After our community highlighted some flaws and limits regarding our latency tests and coverage, we've developed a reliable and consistent way of measuring latency. Following multiple explorations, our solution uses relative delay between two controlled loops. This allowed us to measure the latency of multiple connections and Bluetooth codecs across 27 headphones.

From the data provided by testing these initial models, we could analyze and identify a clear distinction in latency between different connection types and codecs, like the advantage of aptX Adaptive (Low Latency) over SBC or the added latency of Bluetooth over a wireless receiver. We were able to match measurements done by other manufacturers and observe the impact of added audio processing, even over a wired connection. Despite some limitations in codec coverage and optimization for specific source devices, the test results provide clear data that allow us to foresee potential latency issues for usages requiring low latency, like gaming.

The Problem

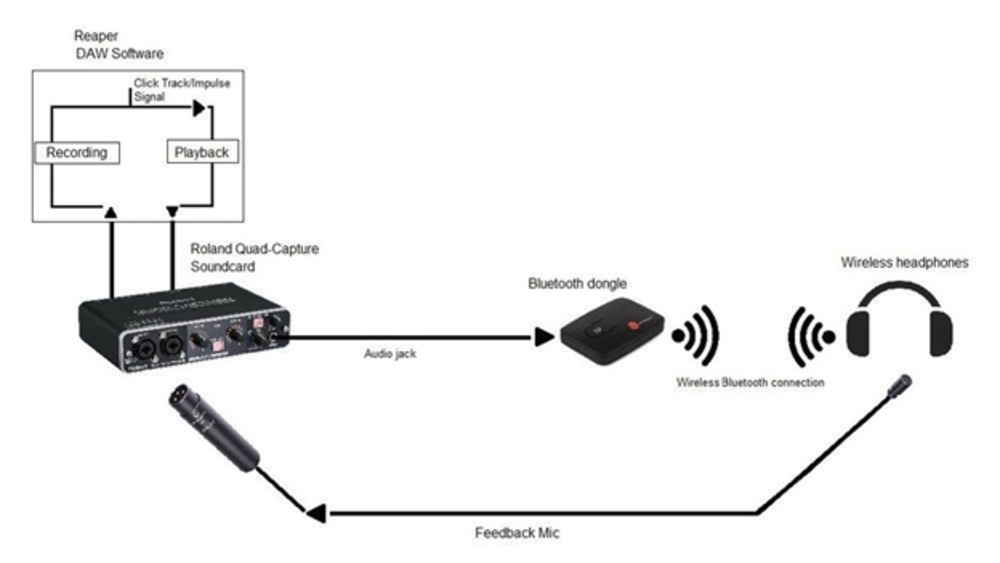

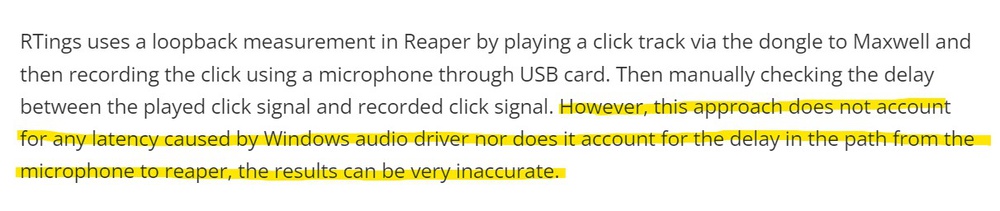

Our previous latency methodology used a microphone connected to a Roland Quand-Capture sound card used in conjunction with a Windows PC. Equipped with a Bluetooth 5.0 transmitter for Bluetooth headphones or directly connected to the soundcard for wired ones, we sent a signal to the headphones and recorded it with the microphone. The recorded signal was then compared to the source signal in a digital audio workstation (DAW).

However, the lack of control over the added delay by the devices in the chain could lead to inaccuracies and inconsistencies in our measurements. It could also result in cases where we had to step out of our methodology to achieve consistent and accurate results like this one.

Furthermore, the iOS and Android measurements weren't comparable with our other latency measurements. These iOS and Android-specific tests were performed using an audio-sync test in the YouTube mobile app and let us measure the difference between video and audio feedback. While this methodology helped identify potential issues when using headphones connected to a phone, the difference in methodology and its lack of comparability wasn't transparent for users.

To raise the quality and, in turn, provide more accurate and reliable results, we needed to address the inconsistencies and issues in our methodology. Those inconsistencies could lead to confusion when comparing different values. Users have also written to us regarding our results and provided valuable feedback, which you'll see below.

Evidence

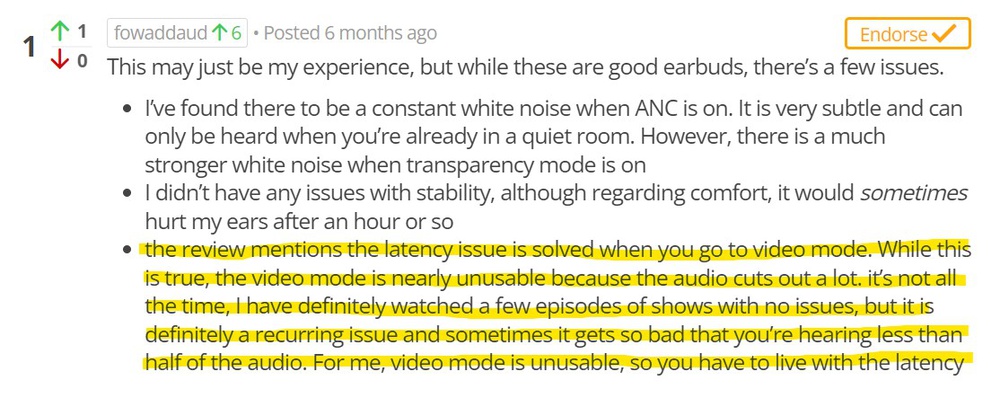

Here are a few comments, some feedback, and criticism we've received that highlighted the need to address the limitations and issues with our headphones latency tests:

Aspects We Won't Be Addressing

We won't be addressing iOS/Android latency. The setup required is very different from our main setup. It also relies on third-party implementations that are constantly evolving, like the YouTube app. Because of this, the scale of the results can be very different than the method developed using a PC. The other issue is that this is only applicable to our phone/OS version/app version and has no guarantee to be valid with other mobile devices.

Proof Of Success

To validate that we fixed the issue, we had two objectives:

- If we subtract system latency, we must be close to the delay measured by an oscilloscope (jack to mic).

- Our results should align with Audeze's measurements of their product, the Maxwell Wireless.

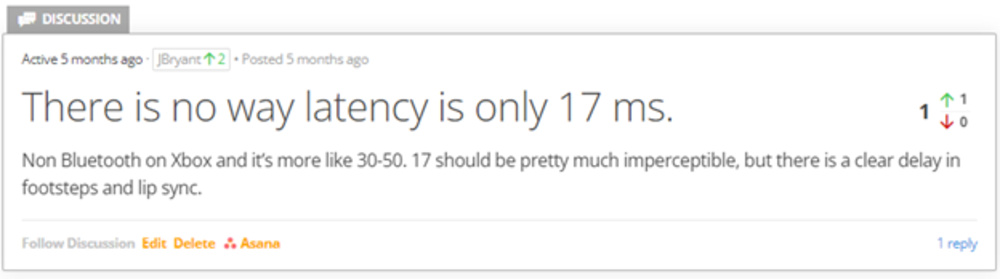

Using LatencyMon To Ensure Stability During Audio Production

LatencyMon is software that monitors drivers and other system exceptions to ensure stability during audio production. A clean computer is likelier to be flagged as appropriate for real-time audio than a powerful but bloated PC. However, our current latency PC passed LatencyMon checks. Nonetheless, it highlights the importance of being careful with how the system is maintained. As such, a snapshot/backup system (like Acronis Cyber Protect) is to be considered to clean the system between each pair of headphones we test (since we may need to install their SW).

Audio Precision Hardware

The non-Bluetooth results we obtained in our previous methodology were compared to results obtained with an APx500 analyzer from Audio Precision (measuring equipment combined with an audio interface). This device has a specialized latency test for DUT Delay (Device Under Test Delay). It can report the consistent true latency of devices under testing, making it an interesting solution.

After contacting Audio Precision directly to acquire more details, we found that, especially when it comes to measuring non-Bluetooth wireless latency, anything using ASIO or ASIO4All (ASIO being a low-latency, high-quality computer sound card driver protocol, and ASIO4All being a free low-latency audio driver) can't be used to accurately measure absolute true latency since Windows controls the clock. This means this hardware wouldn't be a perfect plug-and-play solution for all connection methods and devices we want to test.

AVLatency.com Toolbox

In June 2022, Allen from AVLatency.com reached out to us. He created a tool to measure the audio latency of soundbars. It was worth looking into to learn and possibly adapt some of his ideas to our work in headphones.

Looking into the explanation of how his HDMI latency test works, the concept of splitting the HDMI output between two loops and comparing the difference between those loops (where one of the loops includes the device under test) was simple and very clever. However, since it requires us to be able to split audio with cables on the source side, we can immediately see the added challenge of adding Bluetooth measurements using this method. Nonetheless, the idea is potentially scalable if we apply the offset approach AVLatency.com uses.

Round Trip Latency (RTL) Compensation

Inspired by the work of Allen (AVLatency.com), it's promising to compare two identical signal paths where the only difference is the device under test, as this lets us compensate for any device in the chain. Our setup's added latency can be huge, but since we're comparing two paths using the same route (except for the headphones under testing), the difference should give us the desired latency.

Early results obtained were positive, so we moved in this direction. One thing to keep in mind is this approach isn't "measuring" latency. It involves recording two audio paths playing the same audio track and comparing the time delay between them. It's something common in audio production since most digital audio workstations (DAWs) can get the estimated latency and automatically shift tracks to be aligned. The slate serves the same purpose in the movie industry: it's used to sync video with audio, including audio that different devices would record. Our initial Roland QUAD-CAPTURE soundcard was making this hard to do since it doesn't have a loopback interface and is limited in terms of outputs. While it should be able to do it, we've struggled to get it running smoothly, and a soundcard with more inputs and a loopback interface would be a great benefit moving forward.

The Need For A New Interface

With the issue we faced with the Roland soundcard, we opted for an additional opinion using another modern interface. We decided to use a Focusrite Scarlett 18i20 (3rd gen), which should make things easier with the additional inputs. Its driver and configuration software are much more powerful and easy to configure. What was a challenge with the Roland suddenly felt simple, and we could focus on testing headphones rather than trying to configure the setup.

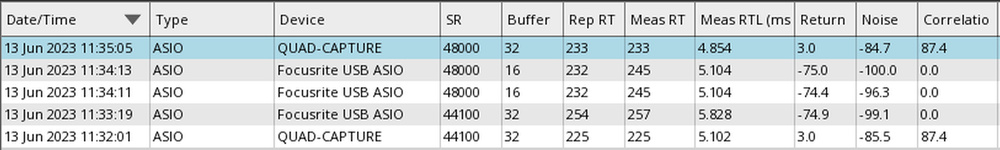

For additional peace of mind, Oblique Audio makes a tool to test the interface latency by doing a physical loopback. Round trip latency (RTL) is how the whole audio production industry evaluates the performance of interfaces. It's relative, but since everyone is doing the same test, it's still comparable. In our case, the Roland is slightly faster, and its driver can perfectly estimate its actual latency (Reported RT vs Measured RT, in samples). Something our Scarlett is not able to do. However, since we use differential latency (the difference in signal delay between two loops), this shouldn't impact us. The simplicity and possibilities outweigh the impact of the added latency of the interface.

Voicemeeter Software

Voicemeeter is a software solution for audio designed to route audio signals and add effects. This lets you use many powerful features in a simple way in Windows. You can select which driver your sink device will use, send a source to multiple devices simultaneously, and request a specific audio buffer size. However, Voicemeeter adds complexity to our setup, and a lot of settings need to be monitored and set appropriately.

Exploring the possibilities this software added to our solution, the benefit provided by this software warranted the added complexity. The main benefit was with wireless headphones since we need to send the source to the interface and the dongle, which use two drivers. This is mainly because Windows doesn't allow streaming audio to two audio devices simultaneously. An added benefit is that this software shows what settings the Windows driver model (WDM audio driver) has been configured with, so we can request the KS and WME (audio drivers) buffer sizes. We can also use it to duplicate the audio to multiple channels on the audio interface if needed. However, the absolute latency introduced when using Voicemeeter is huge, and while it allows the direct use of an ASIO interface because the internal buffers required to run the program smoothly are at least 728 samples, the total latency will often be similar to the default Windows driver.

The Need For ASIO4ALL

While enthusiasts often recommend ASIO4All to reduce latency, it's not easy to use. ASIO4All aims to replicate the benefits of a true ASIO (driver) interface by acting as a middleman. It will show up as your audio output device and give you a GUI to select your actual physical interface/device. You can even select multiple devices and combine them into a bigger one. The real goal behind ASIO4All is to use non-ASIO devices with software that does have ASIO support, including DAWs like Reaper, for example. This would expose all the channels, and ASIO4All can bypass the Windows audio driver and offer much lower latency.

ASIO4All does reduce latency significantly, so if we don't use it, the reported latency could be higher than the true capability of the headphones. Since ASIO4ALL also causes issues with Bluetooth dongle/Bluetooth devices, we must use WDM with Bluetooth devices that we'll test either way. ASIO4All can be used as a variable buffer, too, allowing us fine control to ensure our relative latency is well-balanced between two loops.

Buffer Size, Sampling Rate, And Bit Depth

There are a few important metrics that we need to consider in our test, as they could impact latency:

- Buffer size: the number of samples it takes for a computer to process any incoming audio signals.

- Sampling rate: the number of samples per second that are taken of a waveform to create a discrete digital signal.

- Bit depth: determines the number of possible amplitude values for each audio sample.

We want to keep buffer sizes small to minimize the amount of latency artificially added by our test. A low buffer size will significantly impact audio clarity. Too low, and you might get audio cuts or robot sounds.

Buffer is rather straightforward: lowering the buffer until there's a noticeable quality drop gives us the lowest latency possible while not preventing us from comparing audio tracks in our digital audio workstation. Matching the ASIO4All WDM buffer sizes when testing wireless headphones (BT or Dongle) is also important.

As for sampling rate and bit depth, matching them will avoid introducing conversion steps. For bit depth, Voicemeeter Potato is always 24-bit, so we don't have control over this. As for the sampling rate, the control we have on it was very inconsistent. However, based on our testing so far, the impact should be negligible. As such, we currently don't plan to force any settings. The only directive will be to make sure all loops share settings.

Apple AAC Latency

Advanced Audio Coding (AAC), which is the highest-quality codec that Apple products support, is often regarded as a better alternative to SBC and is expected to be, at the minimum, as low latency as SBC. Apple devices are known to support AAC, but other devices can support AAC codec as well.

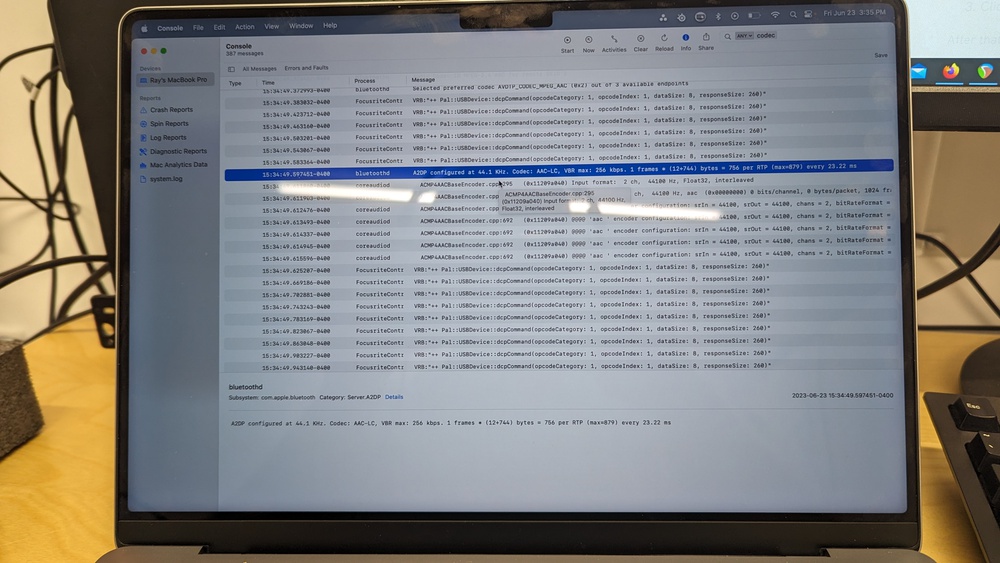

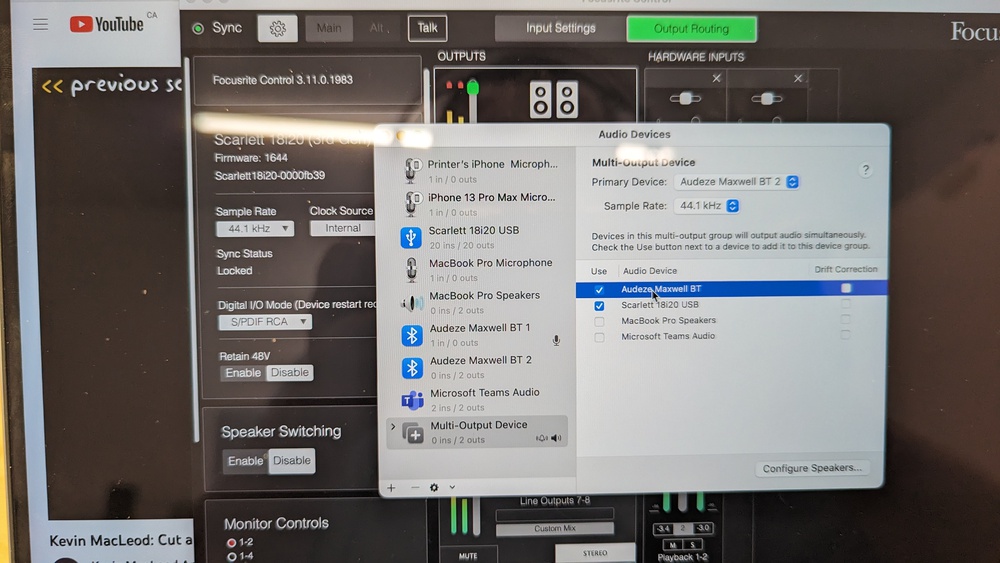

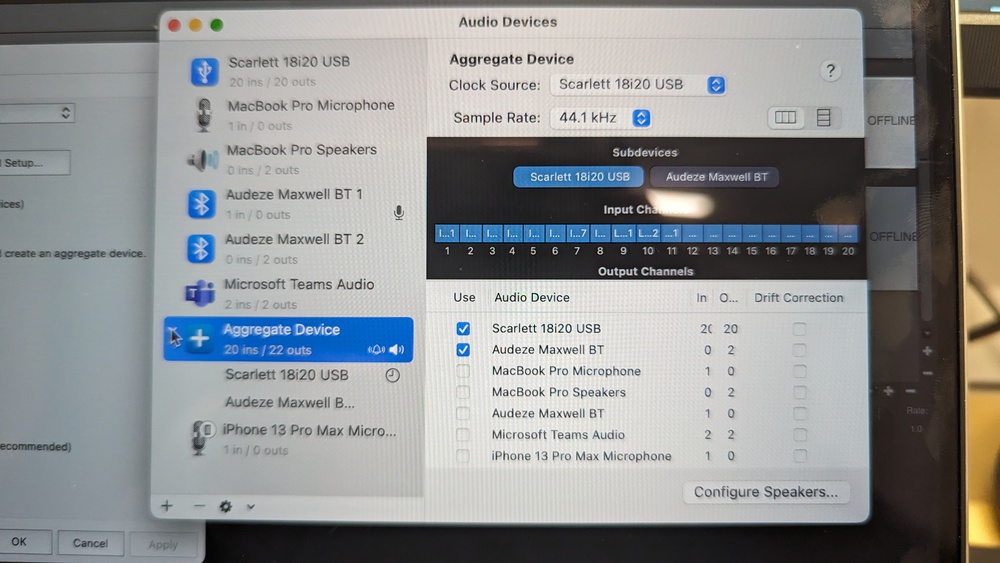

While Windows 11 introduced support for AAC, it uses xHE-AAC, and we had reservations about how it would compare to Apple's implementation. Confirming that the codec is AAC in Windows 11 is also very complex. We bought a M1 Mac Mini to replicate our setup on macOS. The task of Voicemeeter can be done natively on macOS using a multi-output device. We took measurements with both GarageBand and Reaper on macOS.

Our results for the AirPods Pro (2nd gen) Truly Wireless were around 45ms on macOS compared to 160ms on SBC/W11. This difference is so big that we think there's audio compensation (sync) happening in the multi-output device. The same behavior was also measured when testing the Audeze Maxwell Wireless, further reinforcing our doubts about the results obtained on macOS.

This is enough for us to exclude AAC from our final solution. Showing AAC values that low on macOS would certainly mislead anyone to believe AAC is a codec with way lower latency than any other Bluetooth codec available. At this time, our testing with AAC is limited; we'd require a deep dive into AAC to properly cover and understand the macOS audio pipeline and its respective audio buffers or delay compensation.

Solution

Our setup's consistency was a big focus. Previously, it wasn't uncommon for us to have to use an alternative setup to measure latency. For example, our methodology prescribed the use of the Rolland Quad Capture (audio interface) and Reaper (Audio Workstation software), but for some headphones, we took Bluetooth measurements by filming a YouTube video on the display. This method was similar to how we measured Android and iOS latency, which caused inconsistencies when comparing headphones across connection methods.

In addition, we're moving away from total latency and towards relative latency. It lets us better isolate the impact of our system. It's also for this reason that we're dropping Android and iOS latency testing.

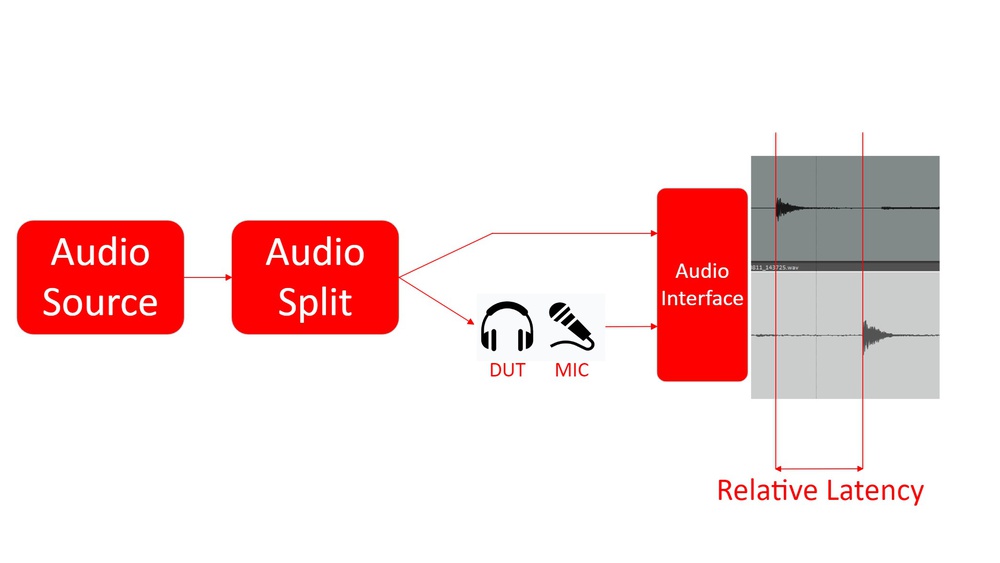

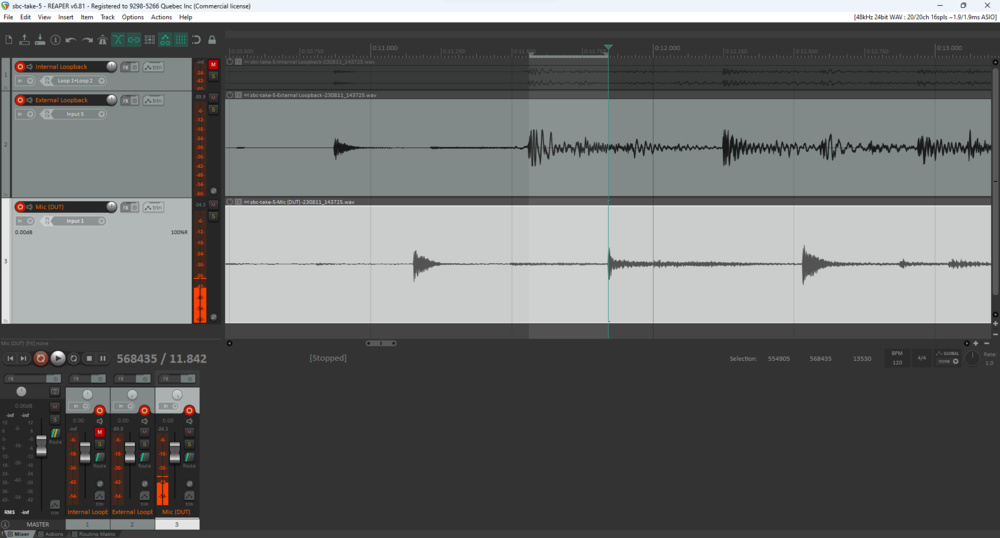

The general idea is to record two round trip latency (RTL) tracks, one of which goes through the headphones; the other bypasses it. We can then compare their recorded waveforms and calculate the offset. This offset should be attributed to the additional delay introduced by the tested headphones.

With this approach, the biggest challenge is to split the audio between two 'devices', especially when they don't share the same connection type. We also need to ensure our reference path is as fast as possible. Otherwise, passive headphones could come first, giving us negative latency numbers.

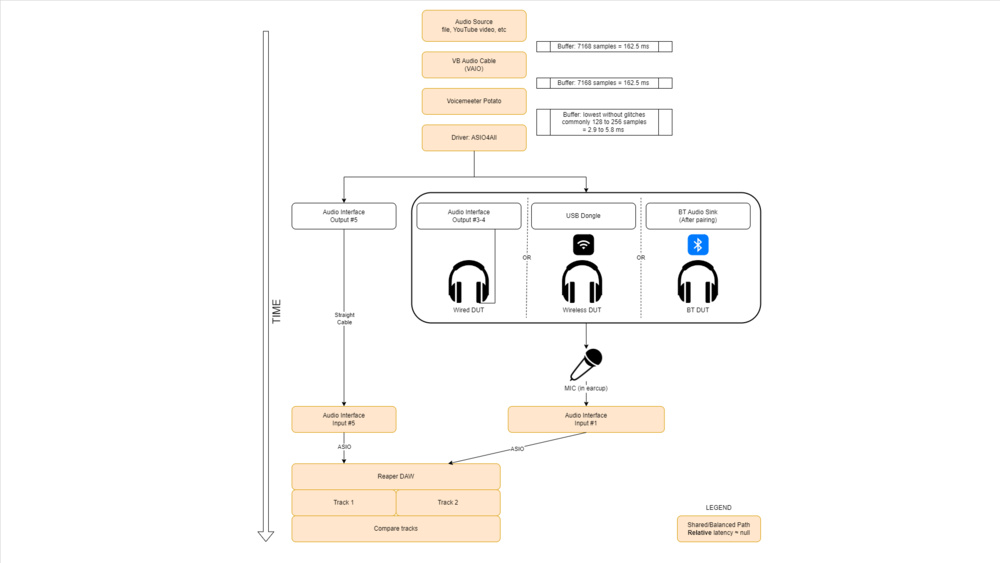

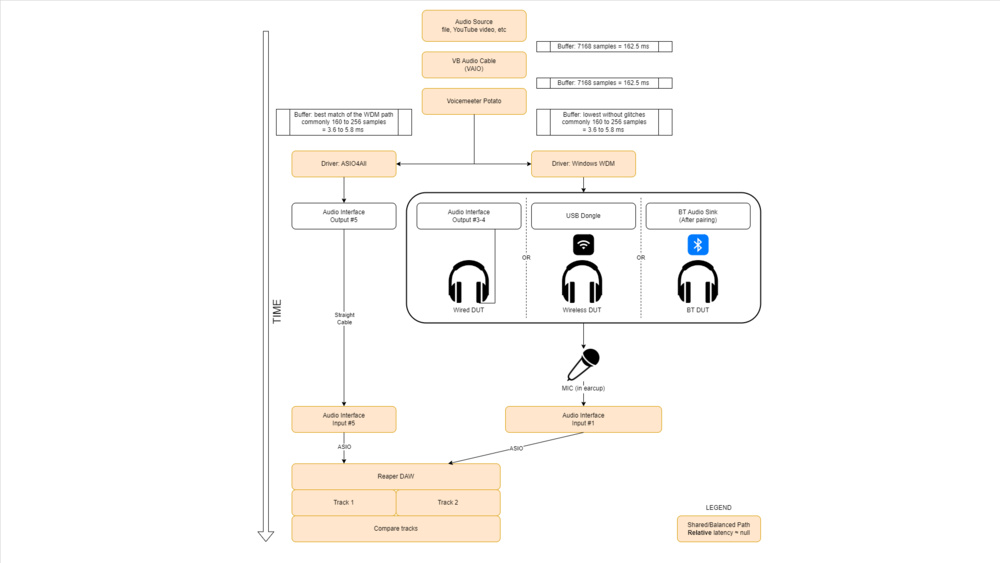

Our final setup uses a combination of Voicemeeter, ASIO4All, an ASIO Interface, and Reaper (DAW). In our case, the ASIO interface (also called Audio Interface below and in the images) is the Focusrite Scarlett 18i20 (3rd gen).

However, some devices (mostly Bluetooth) struggle to play nicely with ASIO4All. In those cases, we use a slightly modified setup.

The difference between the main and alternate setup with the same headphones was negligible in our testing (below 0.3ms every time). Therefore, we can use both the main and alternative setups if issues are encountered with a product during testing.

There's also a possibility that our latency recordings between the loops are different since one path uses a microphone while the other is directly sent to the sound card, which may lead to less processing. This would have the effect of slightly inflating all our numbers. We have tested this by sending out a feedback loop (a signal that we can monitor) through a Rode VXLR+ and into the Mic 2 input. Latency variations were under 0.1ms, so we're concluding that the mic preamp of the Scarlett 18i20 gen 3 is not significantly slow and doesn't impact our results, even for passive wired headphones.

Both setups allow us to be aware of and try to match the buffer sizes at various points in the chain so that the relative latency remains only about the tested headphones.

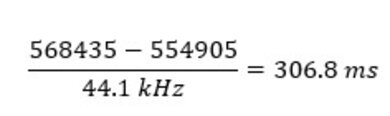

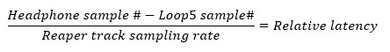

Once both tracks are recorded, we compare the waveforms in Reaper and find the sample number for matching points. Once we know the sampling rate of the recorded track, we can calculate the time difference as follows:

In the above example, the relative latency would be:

It's important to understand that we measure relative latency. The previous diagrams illustrate relative latency by isolating the difference between both paths and revealing the delay of the headphones under testing. While this may suggest that this is the absolute latency of the headphones, true latency requires us to be aware of all the delays in the chain and be able to offset them. We don't know the exact latency of the interface (how much is playback/vs recording, for example). The biggest variable/unknown we have is the driver stack. We may know the buffer size, but how efficiently the headphones can consume it can vary slightly based on flags in the driver or other configurations.

Our results only indicate how the headphones behave under Windows using our test conditions. We're also confident that our solution is generic enough to avoid any optimizations done by manufacturers creating both the headphones and the software/hardware used with them. This has the advantage of being more consistent. Still, it also prevents us from showing lower numbers that many users may be able to observe in cases like iPhones with Apple AirPods Max Wireless, Galaxy phones with Samsung Galaxy Buds Live Truly Wireless, and Xperia Phones with Sony WH-1000XM5 Wireless, for example.

Not accounting for this potential optimization in our methodology doesn't mean that our results are invalid, but simply that some headphones could behave differently under different conditions. For example, Sony headphones, based on the testing of u/aborne25 (see below in the data analysis section), appear to have significantly lower latency when used with a Sony source (Xperia phone). This is what we mean by relative latency. It's relative to our setup and to our device.

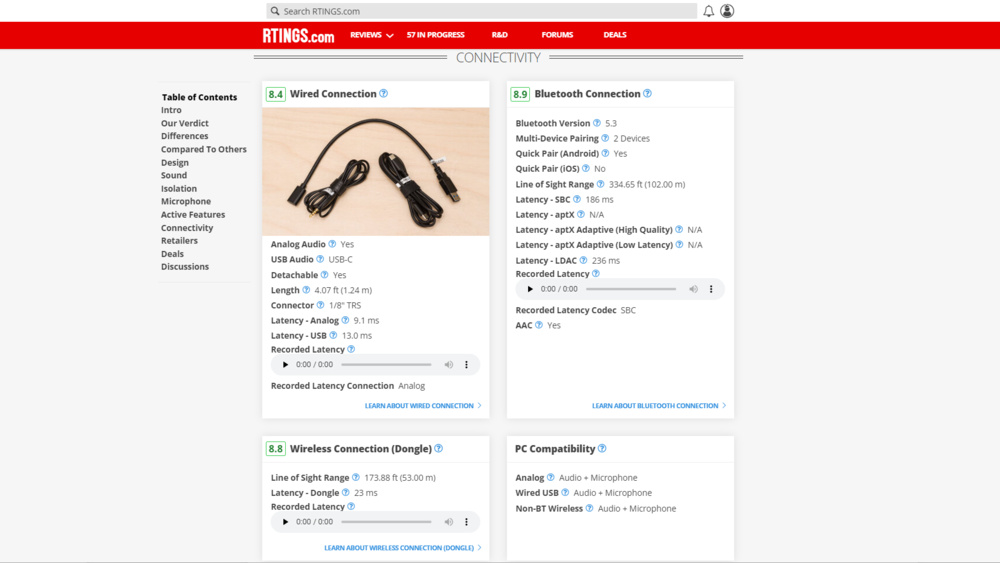

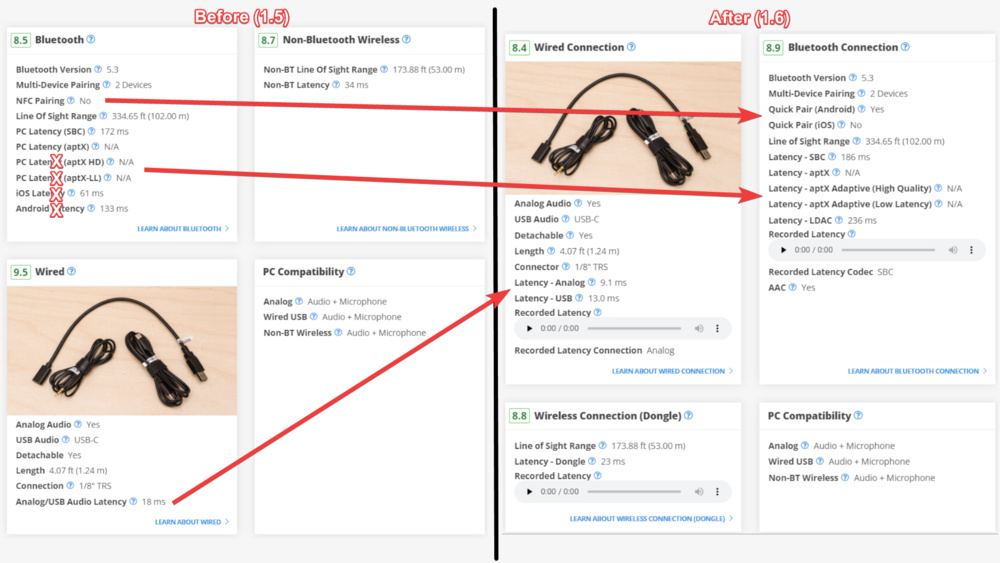

Moving forward, we've made changes in our reviews to integrate this new solution:

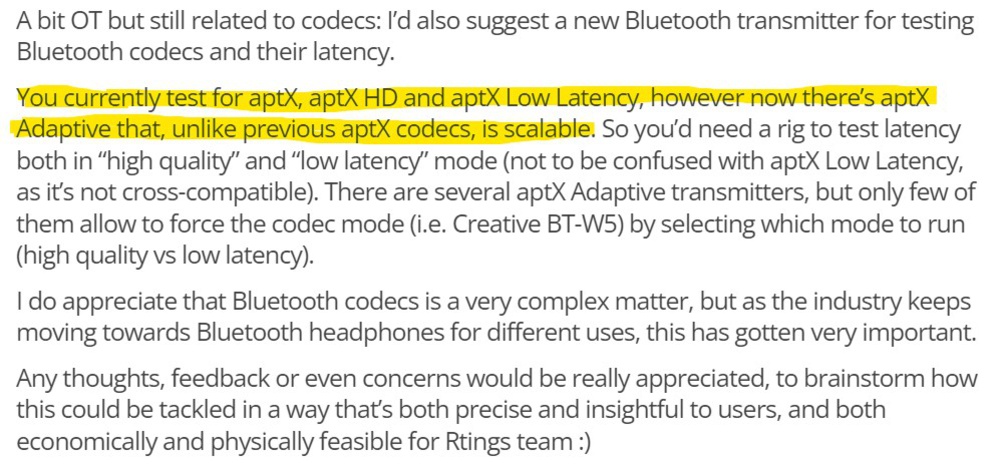

Notable changes are:

- Dropped aptX HD, replaced by aptX Adaptive (High Quality)

- Replaced aptX-LL with aptX Adaptive (Low Latency)

- Added LDAC

- Split Analog/USB into two tests

- Added audio samples of recorded latency

- Replaced NFC Pairing with Quick Pair (for both Android and iOS)

How Our Results Compare To Testing Done By Others

Although very few people have measured and shared headphone latency measurements, it can be worth looking at the few instances when this occurs, as it allows us to see how trends from our measurements compare to trends of others.

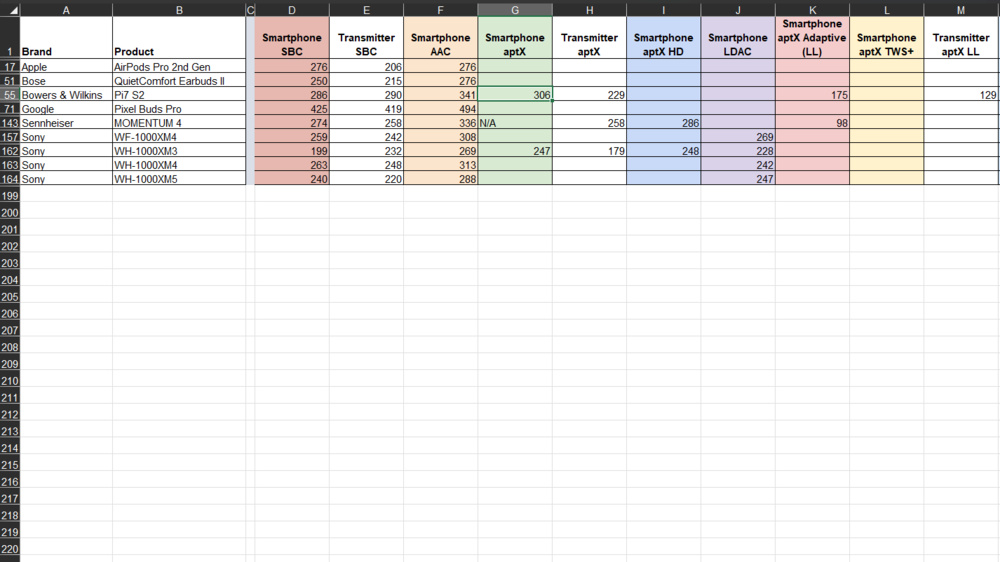

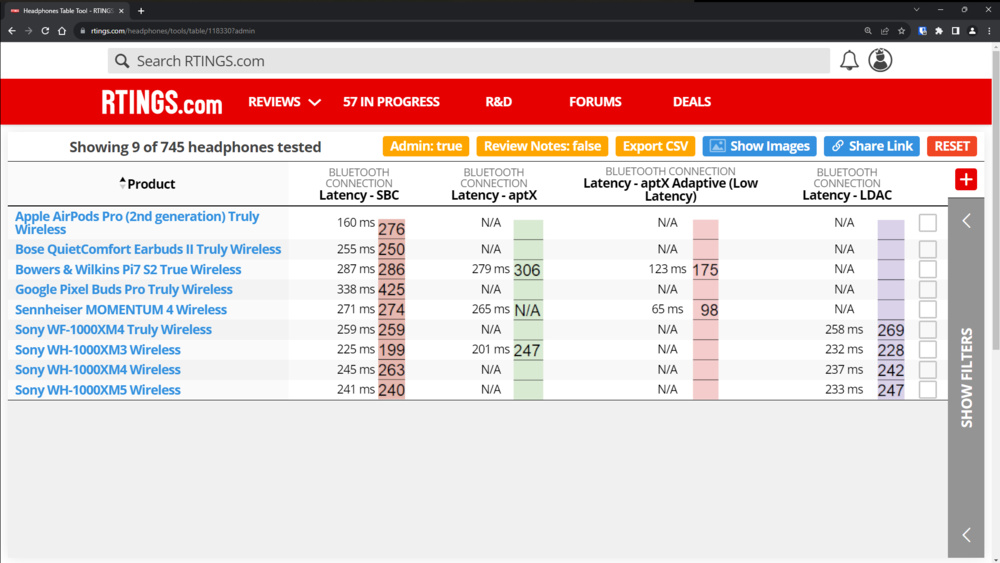

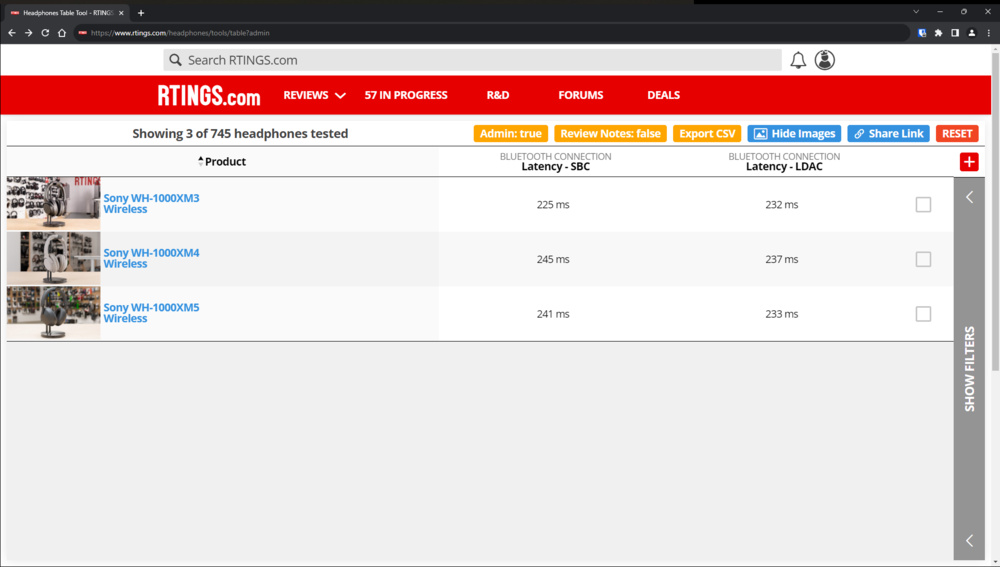

User aborne25 from Reddit detailed their methodology and clearly explained their process for testing Bluetooth headphones. From the products we initially measured, we had the following products in common:

- Apple AirPods Pro (2nd Generation) Truly Wireless

- Bose QuietComfort Earbuds II Truly Wireless

- Bowers & Wilkins Pi7 S2 True Wireless

- Google Pixel Buds Pro Truly Wireless

- Sennheiser MOMENTUM 4 Wireless

- Sony WF-1000XM4 Truly Wireless

- Sony WH-1000XM3 Wireless

- Sony WH-1000XM4 Wireless

- Sony WH-1000XM5 Wireless

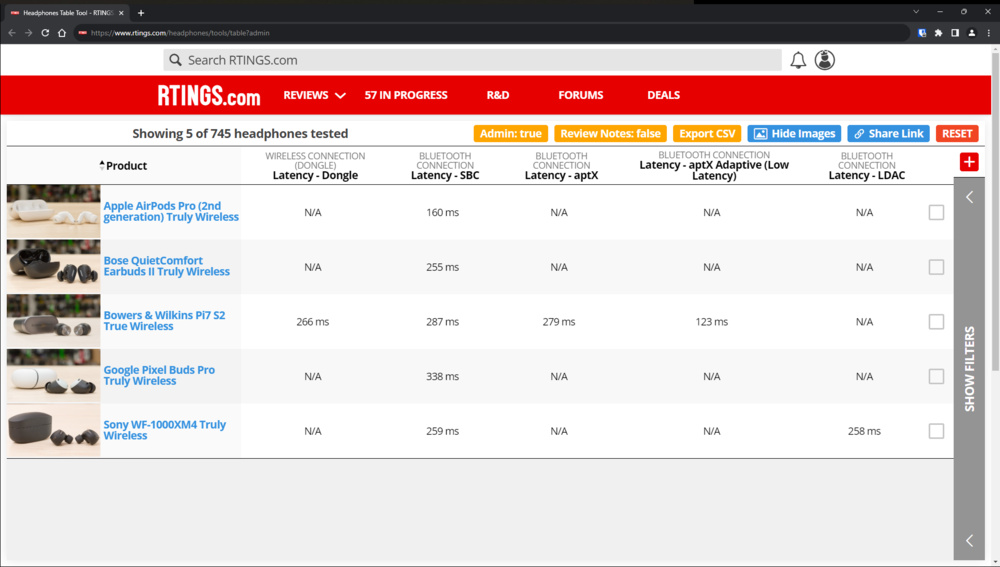

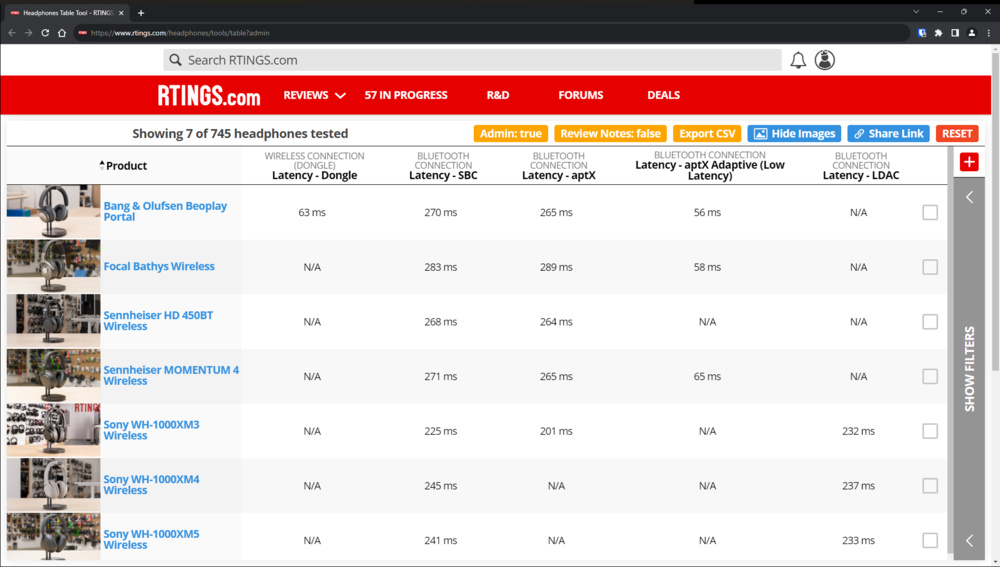

If we isolate these products, here are the results gathered by aborne25:

While the methodology used isn't necessarily directly comparable, using the codec measurements done with a smartphone by aborne25 (due to the additional measurements done over a phone like the Sennheiser MOMENTUM 4 Wireless' aptX Adaptive (Low Latency) results compared to that done on the transmitter) can confirm if our measurement trends are in line with other approaches and conclusions done by others. Here's the overview when comparing the smartphone results of aborne25 to our results under our new methodology:

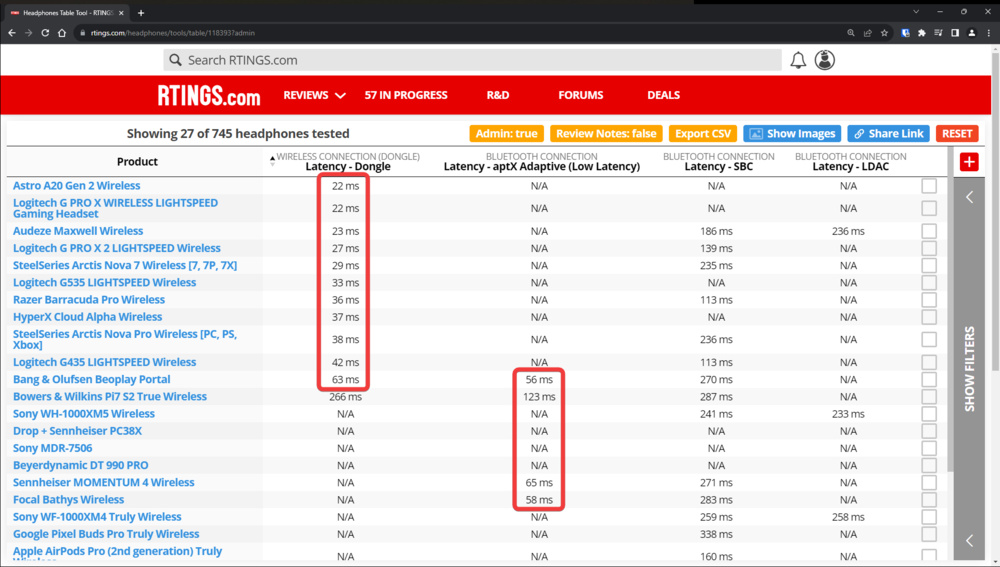

The trends are mostly comparable. There are differences in values, but the larger conclusion is the same. For example, we have the same worst offenders. For SBC, the Google Pixel Buds Pro Truly Wireless are terrible. Our aptX Adaptive (Low Latency) results appear to be lower, perhaps because the newer BT-W5 dongle we use is more efficient than the smartphone. To support this, our numbers for Low Latency match their results with the transmitter for the Bower & Wilkins Pi7 S2 True Wireless.

What's critical is that the overall order of headphones appears to be very well-matched. There are some discrepancies (especially with Sony products, since they tested with a Sony phone), which could include ecosystem optimizations. However, going back to relative latency, we emphasize that our results only apply to our setup and provide a general indication of the relative performance users can expect with their setup.

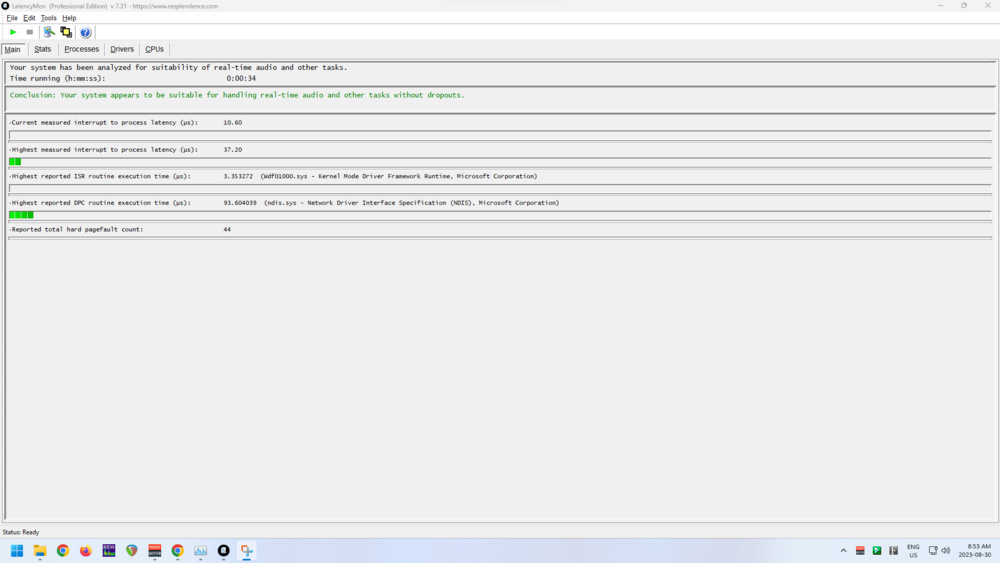

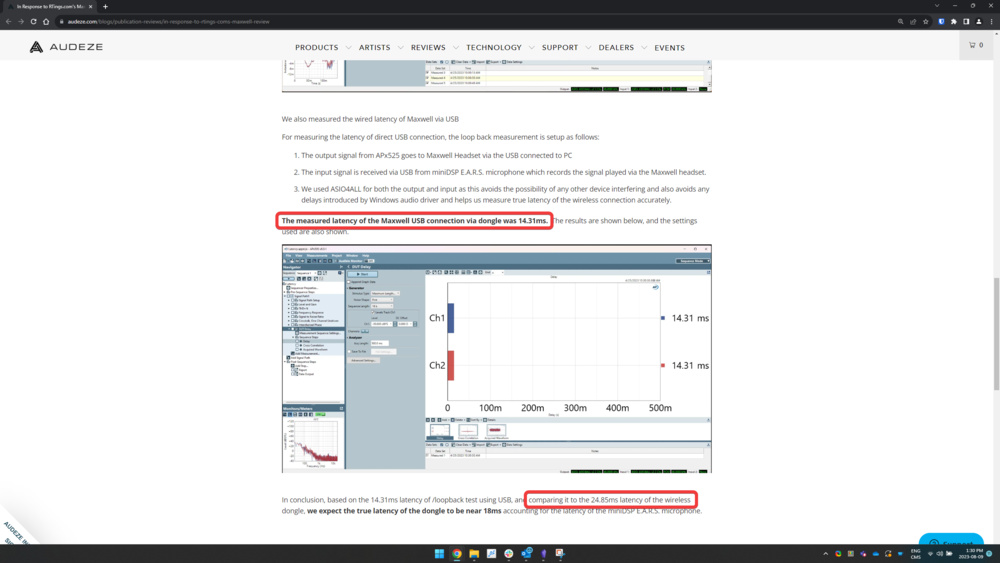

Another example of comparing our results to others is our interaction with Audeze. They published their internal results for their Maxwell headset, so we can see how our results compare to theirs. We're using a similar approach but with different hardware.

They measured 14.31 ms of latency via wired USB and 24.85 ms via wireless dongle. However, those values include recording latency, so they believe their results are a little lower than this.

We measured 13 ms via wired USB and 23 ms via wireless dongle, respectively, which aligns with their results. However, since our results are relative latency, our measurements have the recording latency already subtracted since it's shared between the two loops.

Is SBC The Same On All Devices?

The average SBC latency of truly wireless earbuds is 259.8 ms. However, this is only obtained from five products, one of which is noticeably higher than the others.

In comparison, the average SBC latency of the seven we've tested so far for non-gaming non-earbuds is 257.6 ms. We wouldn't consider this any better than the truly wireless results, though. A difference of 2 ms on numbers that high is irrelevant to user experience as well since it's not noticeable in any sort of content like gaming or when watching video, for example.

The six gaming headsets we've retested so far have an average SBC latency of 170.3 ms, which is a noticeable improvement over the other types of headphones.

We can't conclude that earbuds are necessarily worse than conventional Bluetooth headphones. However, there does seem to be a noticeable improvement in SBC latency when it comes from gaming brands. How they can improve so much over SBC is still unknown to us, though. Not all gaming products perform that well over SBC, so this is one case where we can conclude and compare products in the reviews. However, if you're a gamer, SBC won't necessarily apply to you since you're more likely to use the dongle instead of Bluetooth, which generally ensures lower latency. It could be important if you're a mobile gamer, including on the Nintendo Switch.

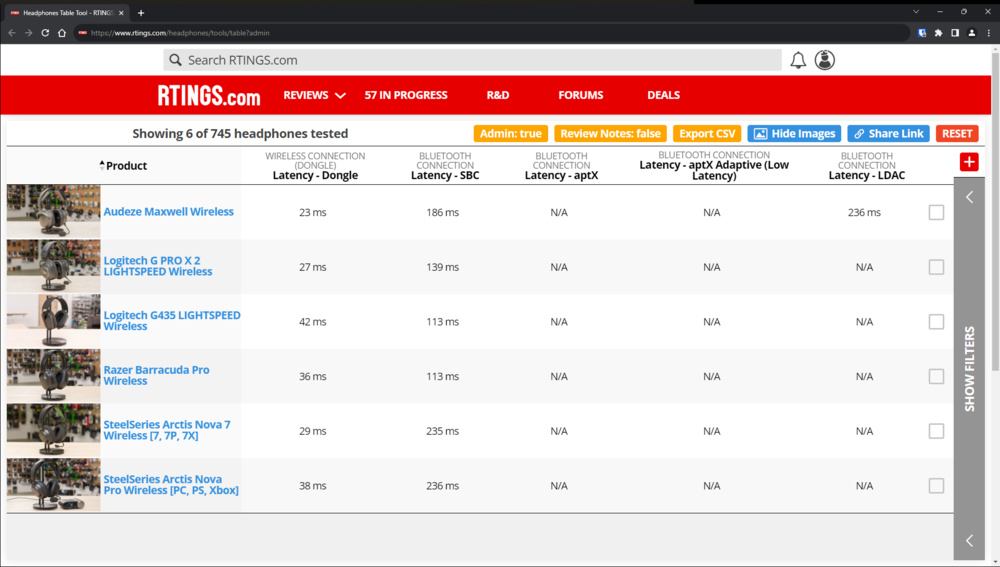

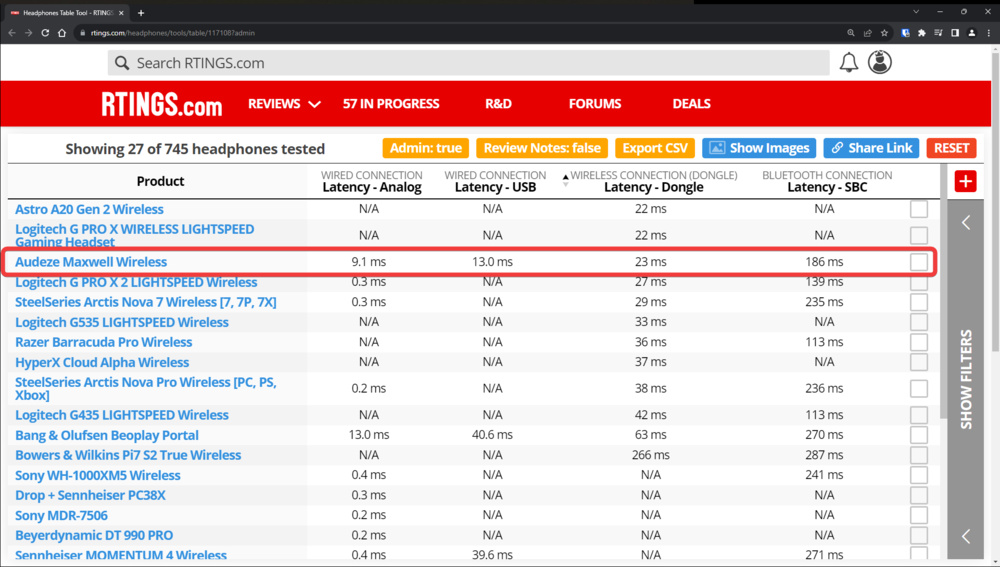

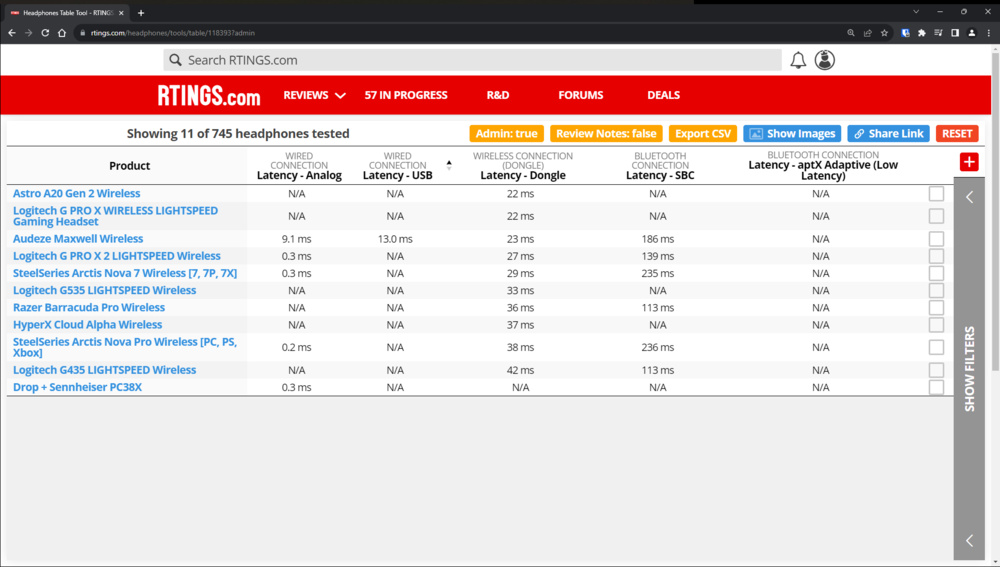

Which Gaming Headset Has The Lowest Relative Latency Over Dongle?

The affordable Astro A20 Gen 2 Wireless, the high-end but older Logitech G PRO X WIRELESS LIGHTSPEED, and the flagship Audeze Maxwell Wireless all perform about the same. All the other gaming headsets we tested so far also perform well.

We don't consider the spread from 22 ms to 42 ms significant enough to exclude any of these for a recommendation. Even if you care about low latency, the headphones in this list are all great for ensuring your audio and visuals stay in sync. However, this is still comparable to a passive headset, which usually has minimal delay, so long as the audio interface driving it also has low latency.

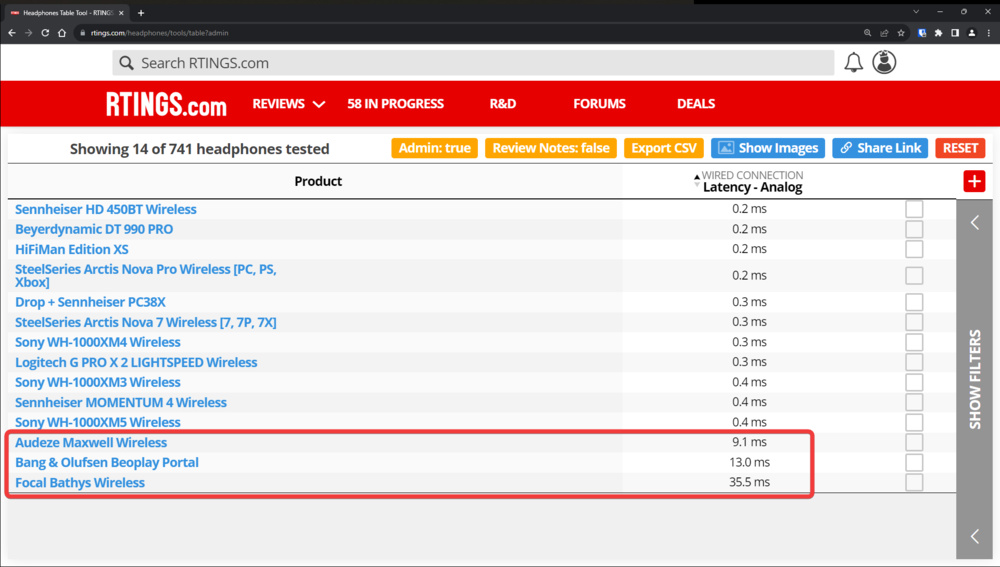

Why Are Measured Latencies With Analog Headphones So Different?

The 'Latency – Analog' measurement is an easy way to identify which headphones are truly passive via an analog connection. For those over 1 ms, we usually must hold the power button on before audio can be heard. For active headphones, additional latency comes from the extra pass of an analog-to-digital converter to an optional digital signal processing back to a digital-to-analog processing. The added latency of this potential signal processing explains analog measurements above 1ms, like the Focal Bathys Wireless, which have 35.5 ms of latency via this connection. The Bathys' signal processing may be even slower due to the complexity of its processing.

Keeping latency this low is critical for audio production, especially if you do live recording and hope to send feedback to the performer. It's also a great way for gamers to maintain low latency, as long as the interface you're using is faster than a USB or dongle connection.

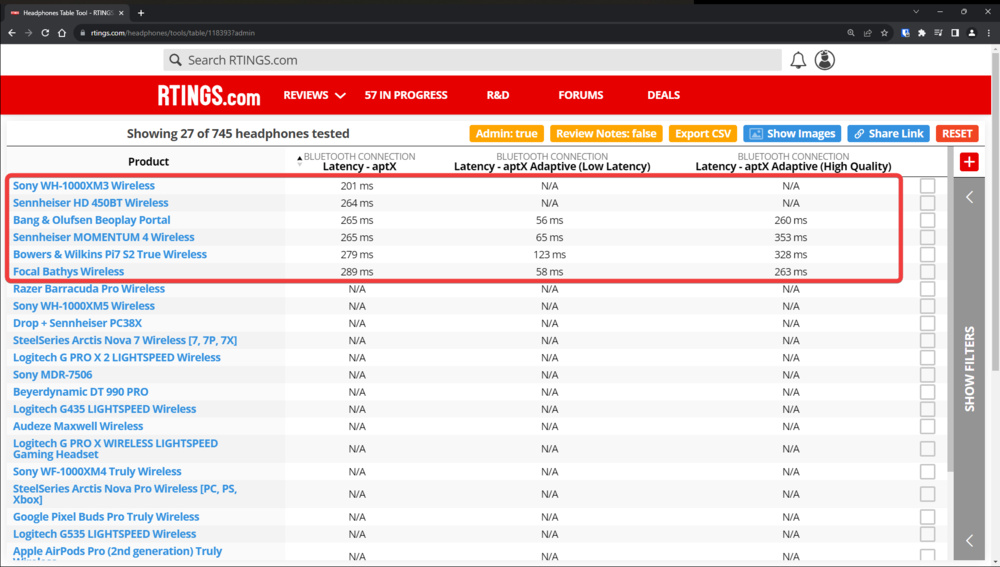

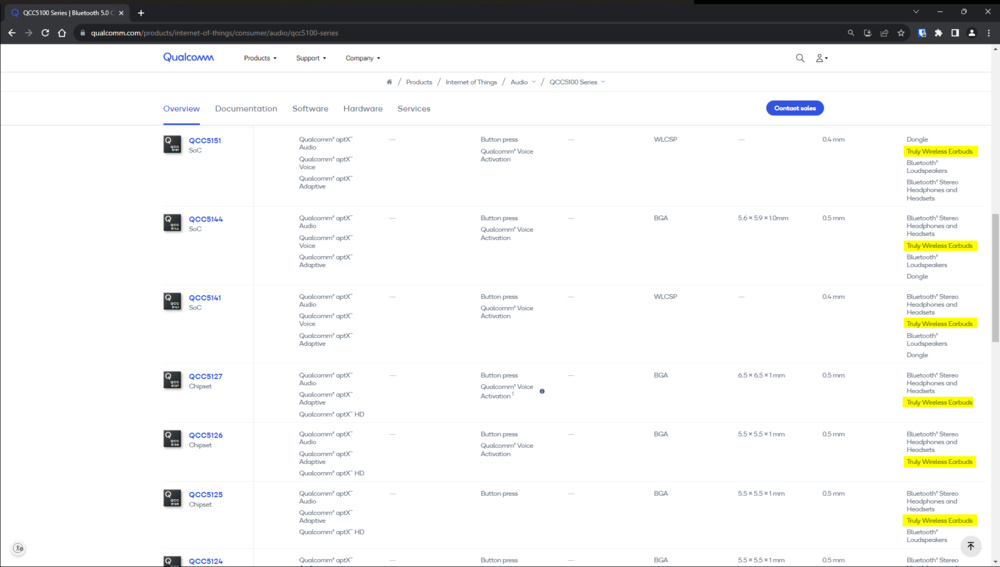

Is aptX Consistent?

Out of the 27 products we've retested as of now, six support a form of aptX. They're all conventional over-ear headphones. Almost no truly wireless (TWS) earbuds support aptX. This may be due to the current Qualcomm chip required, which is too big or power-hungry for this type of design. However, some of their newer chip designs may be integrated into TWS, so we might see more in the future.

Looking at the results, we can say that, in general, most implementations of aptX (any form) should be reasonably consistent and predictable, unless a specific manufacturer implements it poorly or if Qualcomm misses the mark with one of their chips.

Is Bluetooth Unacceptable For Low-Latency Use?

Except for the Bowers & Wilkins Pi7 S2 True Wireless, which may not have prioritized its low latency performance to keep good audio quality, if you can enable a low latency codec like aptX Adaptive (Low Latency), you can generally get really good performance. It may still be about twice as much as a wireless dongle implementation, but <80 ms is appreciably low.

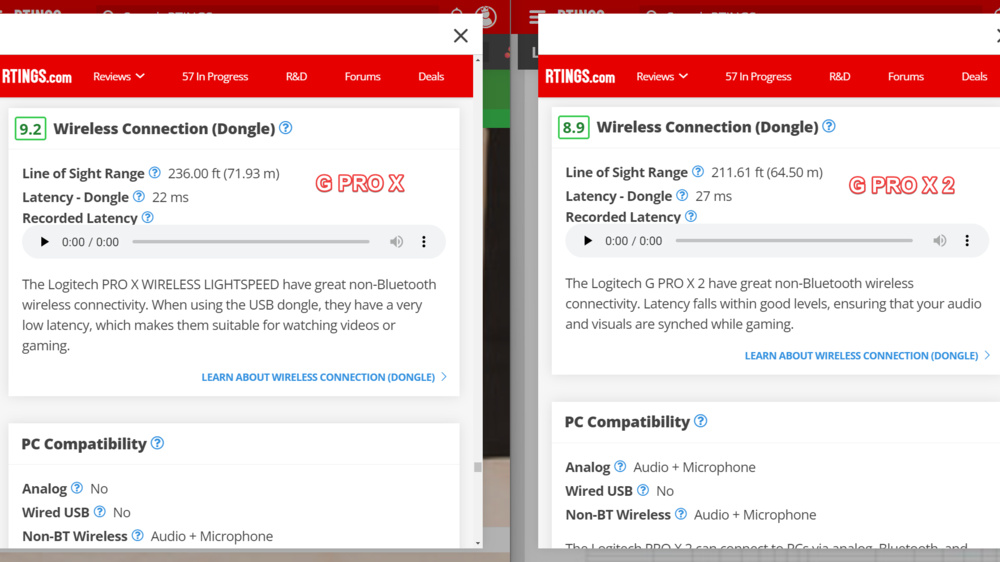

Have There Been Improvements Between The Logitech G Pro X Generations?

The G PRO X has a slightly lower latency of 22 ms versus the 27 ms of their successor, the G PRO X 2. However, the measurements are too close in value to consider it a regression. Checking both dongles, they both appear to be 16-bit audio. There seem to be more features on the X 2 (custom EQs, DTS Headphone:X 2.0), which could explain higher latency, but again, the difference is so small that it's not worth diving more into an explanation. Both headsets should be considered equivalent in terms of latency.

Has There Been An Improvement Between Sony WH-1000XM(x) Generations?

There's a slight SBC latency regression from the XM3 to the XM4, and then a minor improvement from XM4 to XM5. As for LDAC, it is consistent across the three generations.

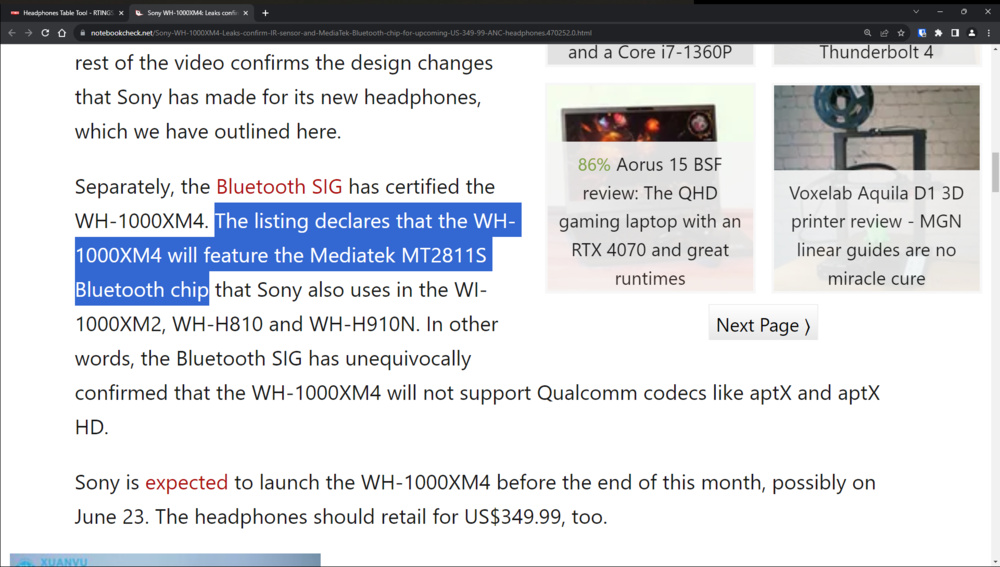

The XM4's slightly higher latency appears to coincide with aptX support being dropped on these headphones. aptX is exclusive to Qualcomm chips, and it appears that Sony changed to a different chip, based on their marketing.

Do Google Products Struggle With Latency?

The Google Pixel Buds Pro Truly Wireless' measured latency is high, but in most scenarios (music, podcasts, YouTube), this shouldn't be a problem due to the lack of video to sync with. Apps like YouTube can compensate for latency by delaying the video to maintain synchronicity.

However, for mobile gamers, this can be very noticeable. As a test, we've tried Subway Surfers on two phones (Samsung Galaxy s10e and Google Pixel 5), and in both cases, the latency was very noticeable. It was significant enough to be annoying and clearly noticeable when gaming.

We wish there were more products from Google to establish if it's a common issue with their products, but they currently have only two products in their lineup: the Pro and the A-Series. We haven't retested the A-Series yet, but we'll keep an eye out for any improvements on that front with future reviews of Google headphones.

Precision And Consistency Between Passes

The test is extremely precise (<1.0 ms) and consistent, as long as some important rules are followed. Resetting the audio' connections' (in ASIO4All + Voicemeeter) right before taking the measurement is necessary. Failure to do so can cause an error of about +50 ms. SBC appears to be the most sensitive to this, while wired/dongle results are almost unaffected.

PC Uses Might Limit Compatibility With Software And Apps

Because we test using a PC, we may be unable to take advantage of apps the product may enable when paired to a phone, for example. This can cause some differences between our measurements and the actual experience when using the headphones connected to an optimized source like a phone of the same brand as the headphones.

Dependency On Our Setup

Our final solution produces results that are only applicable using our hardware/drivers. This means a different setup could get different results that may not translate to all headphones; a user getting a latency of +12% on headphones A cannot assume headphones B would also be +12%. We do relative latency to try to isolate this risk as much as possible, but we can't state that our results will scale to smartphones, for example.

Conclusion

These results are more accurate than in our previous methodologies. They're also in line with what others have measured, and we've even identified headphones with problematic latency, matching community expectations and observations. Overall, our own data is more comparable, so you have a good idea of what to expect with your own unit. You can also be more confident in how the latency measurements stack up to real-life use.

From the two key objectives identified in our proof of success, we succeeded in matching the Maxwell numbers done by Audeze. As for passive latency, we judged the need to validate with an oscilloscope as unnecessary when we achieved below 1 ms measurements without any negative results.

While we couldn't comparably add AAC latency due to a different setup being required, we think it's safer to omit it until our understanding of the codec is sufficient.

From the developed solution, here is the list of pros and cons of these changes:

+ Recorded audio samples allow you to hear the latency for yourself.

+Stable results.

+ Precise enough to show analog latency.

+ Our relative latency numbers correlate well with the best-case scenario absolute latency.

+ Added LDAC latency.

+ Added aptX Adaptive (High Quality and Low Latency) latency.

+ Results are predictable. Helps identify erroneous measurements in cases of setup error.

- No AAC latency measurements.

- Removed iOS/Android latency-specific measurements. They were judged too dependent on the phone model.

- Some codecs are still not covered due to a lack of transmitters for PC or intentionally not covered due to new codec implementations starting to replace older ones:

- LHDC/LLAC

- LC3/LC3plus

- aptX HD (Adaptive implementation is now prioritized over aptX HD)

- aptX-LL (superseded by Adaptive)

- aptX Lossless

- Samsung Scalable Codec

Sources

[1] Notebookcheck, "Sony WH-1000XM4: Leaks confirm IR sensor and MediaTek Bluetooth chip for upcoming US$349.99 ANC headphones", 15 May 2020. [Online] https://www.notebookcheck.net/Sony-WH-1000XM4-Leaks-confirm-IR-sensor-and-MediaTek-Bluetooth-chip-for-upcoming-US-349-99-ANC-headphones.470252.0.html

[2] Reddit, aborne25, "Let's talk about the audio latency on Bluetooth headphones" https://www.reddit.com/r/headphones/comments/ugrsea/lets_talk_about_the_audio_latency_on_bluetooth/

[3] Audioblog, "xHE-AAC Audio Codec now in Windows 11", 20 October 2022. [Online] https://www.audioblog.iis.fraunhofer.com/xhe-aac-windows11

[4] Soundguys, "Android's Bluetooth latency needs a serious overhaul", March 2021. [Online] https://www.soundguys.com/android-bluetooth-latency-22732/

[5] Audeze, "In Response to RTings.com's Maxwell Review", 25 April 2023. [Online] https://www.audeze.com/blogs/publication-reviews/in-response-to-rtings-coms-maxwell-review

[6] RTINGS.com, Omelette, "Testing for audible wireless codec compression", 3 May 2023. [Online] https://www.rtings.com/discussions/GkuuGaPto5SntJsp/testing-for-audible-wireless-codec-compression

[7] RTINGS.com, fowaddaud, "JBL Live Pro 2 TWS True Wireless: Main Discussion", March 2023. [Online] https://www.rtings.com/discussions/B_2k11q_Aj7f3w6I/review-updates-jbl-live-pro-2-tws-true-wireless

[8] RTINGS.com, jBryant, "There is no way latency is only 17 ms.", May 2023. [Online] https://www.rtings.com/discussions/1hJYg4DgQm4nj30F/there-is-no-way-latency-is-only-17-ms