The audio team will be at Montreal's Audiofest March 28-30! Come visit our booth in the Marketplace where we'll be measuring your headphones on our B&K rig!

- Table of Contents

- Intro

- When It Matters

- Our Tests

- What Isn't Included

- Conclusion

- Comments

Bluetooth Connectivity Score and Tests

Headphones

Bluetooth is a wireless technology standard for data exchange between mobile devices over short-length ultra-high frequency (UHF) radio waves from 2.400 to 2.485 GHz. Headphones that support Bluetooth can connect to another Bluetooth device, usually a smartphone or tablet, and play media wirelessly. Bluetooth headsets with a microphone can take wireless calls and usually have some sort of control scheme so you can play/pause music or accept/reject a call from your headphones, too.

All Bluetooth protocols must meet the standards of the Bluetooth Special Interest Group (Bluetooth SIG), an international standards organization that oversees the development of Bluetooth technology. Therefore, Bluetooth has several advantages over unstandardized wireless radio frequency (RF) technologies, like lower power consumption, less signal interference, and greater device compatibility. However, Bluetooth devices require a pairing procedure that can be inconvenient and only connect to a limited number of devices.

For our Bluetooth score, we first determine whether the headphones support Bluetooth and, if so, which version. We then look at whether they support quick pairing, which codecs they support, as well as their latency.

Test results

When It Matters

More and more smartphone manufacturers are eliminating the headphone jack from their products in favor of a single USB-C or Lightning connector. While you can always use an adapter to connect your favorite wired headphones, there are more reasons than ever to opt for Bluetooth headphones. Bluetooth headphones provide the wireless freedom you might expect with RF gaming or home theater headsets without bulky wireless stands or limited USB dongles.

Depending on your needs, there are a couple of features you might want to keep an eye out for. If you have the latest and greatest flagship device, you may want headphones that support the same Bluetooth version for better latency and wireless range performance. If you often switch between devices, such as your work PC and tablet, you'll want Bluetooth headphones that support multi-device pairing. If you listen to hi-res content, a codec that can stream that kind of audio with a high bit rate is crucial. Conversely, low-latency codecs are the way to go if you often stream video.

Our Tests

Our Bluetooth tests evaluate the wireless features provided by Bluetooth headphones as well as the performance of supported codecs. We first determine whether the headphones support Bluetooth. If the headphones are not Bluetooth-enabled, they automatically score a 0 in the Bluetooth category box, and all values are set to N/A. If the headphones do have Bluetooth, we determine their Bluetooth version. We then verify if they support multi-device pairing and quick pair (Android and iOS).

However, the biggest component of this test is evaluating latency across multiple codecs. Codecs are software that encode and decode data for transmission between your device and your headphones. There are three main components to codecs:

-

Bit rate (kbps): How fast audio files are encoded or compressed. If a codec has a high bit rate, there will be less compression, resulting in better audio quality.

-

Sampling rate (kHz): The number of samples per second taken of a waveform to create a discrete digital signal.

-

Bit depth (bits): The number of possible amplitude values for each audio sample. It indicates the dynamic range (or the range between the quietest and loudest sounds) of your audio. A high audio bit depth indicates more detail in your audio.

You'll learn about some of the most common codecs further below. Some are designed for low latency, ensuring your audio and visuals stay in sync if you're streaming video. Others prioritize sound quality at the expense of latency. Keep in mind that not every pair of headphones support all available codecs. Additionally, the codec you're using must be supported by both your headphones and your audio device for it to work; otherwise, the headphones will default to SBC codec.

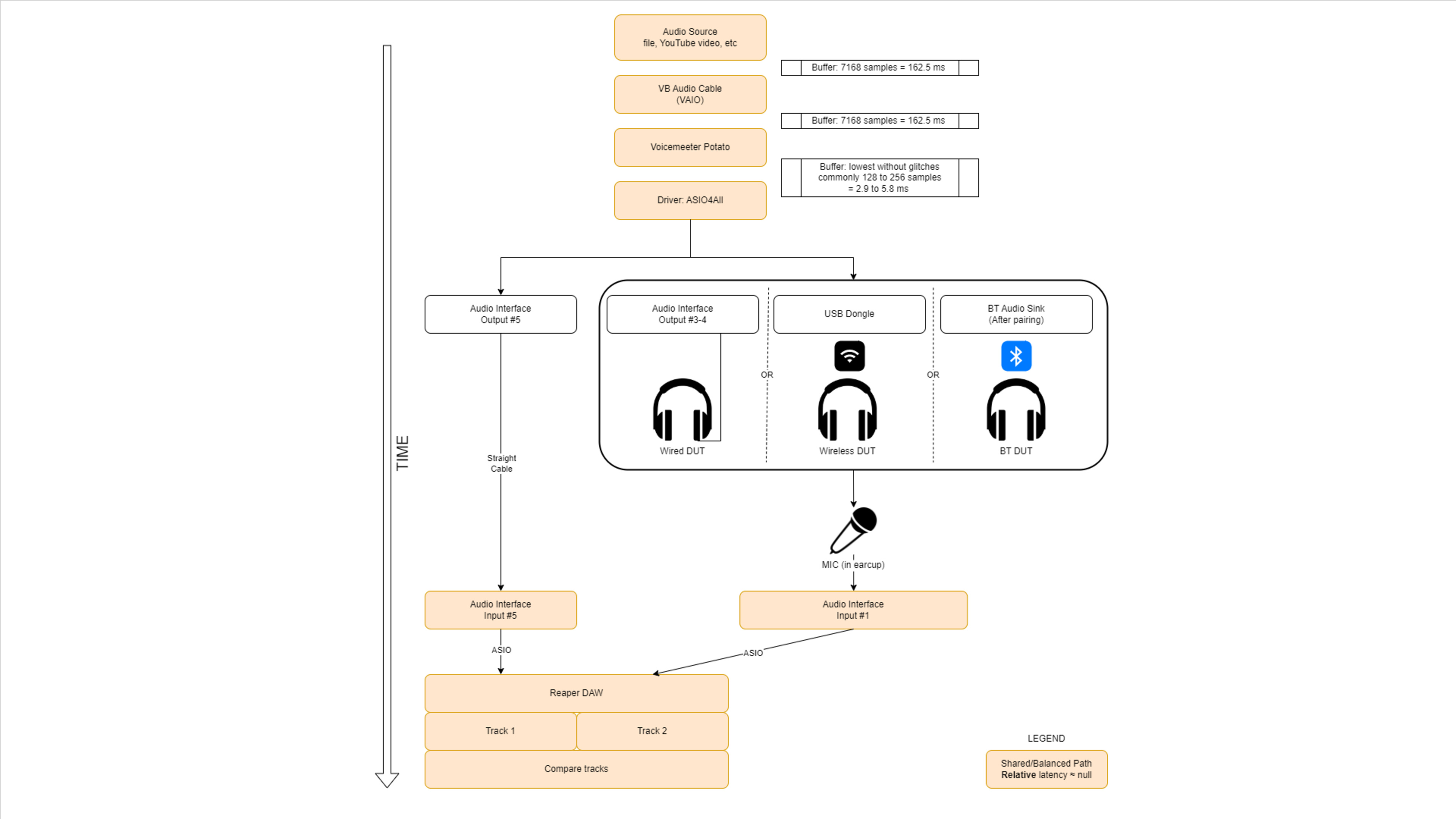

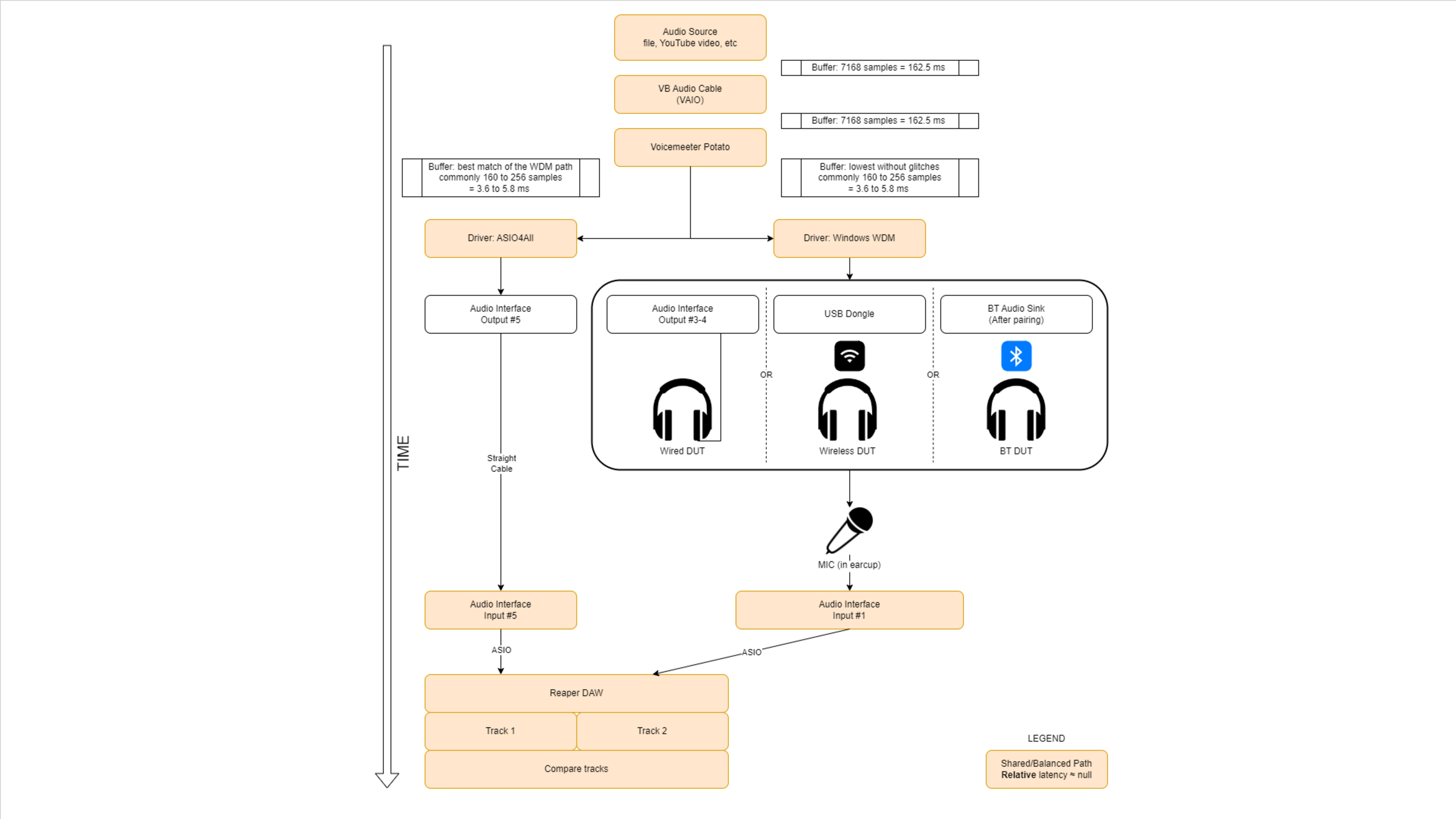

To measure the latency of codecs, we have a PC set up with the following:

-

Voicemeeter: A software that routes audio signals and adds effects.

-

ASIO4All: A low-latency audio driver.

-

An ASIO Interface: A low-latency, high-quality computer soundcard driver protocol. We use the Focusrite Scarlett 18i20 (3rd gen). In this way, we can stay aware of the buffer sizes at different points in the chain. Reaper (DAW): A digital audio workstation, which lets us record and mix audio.

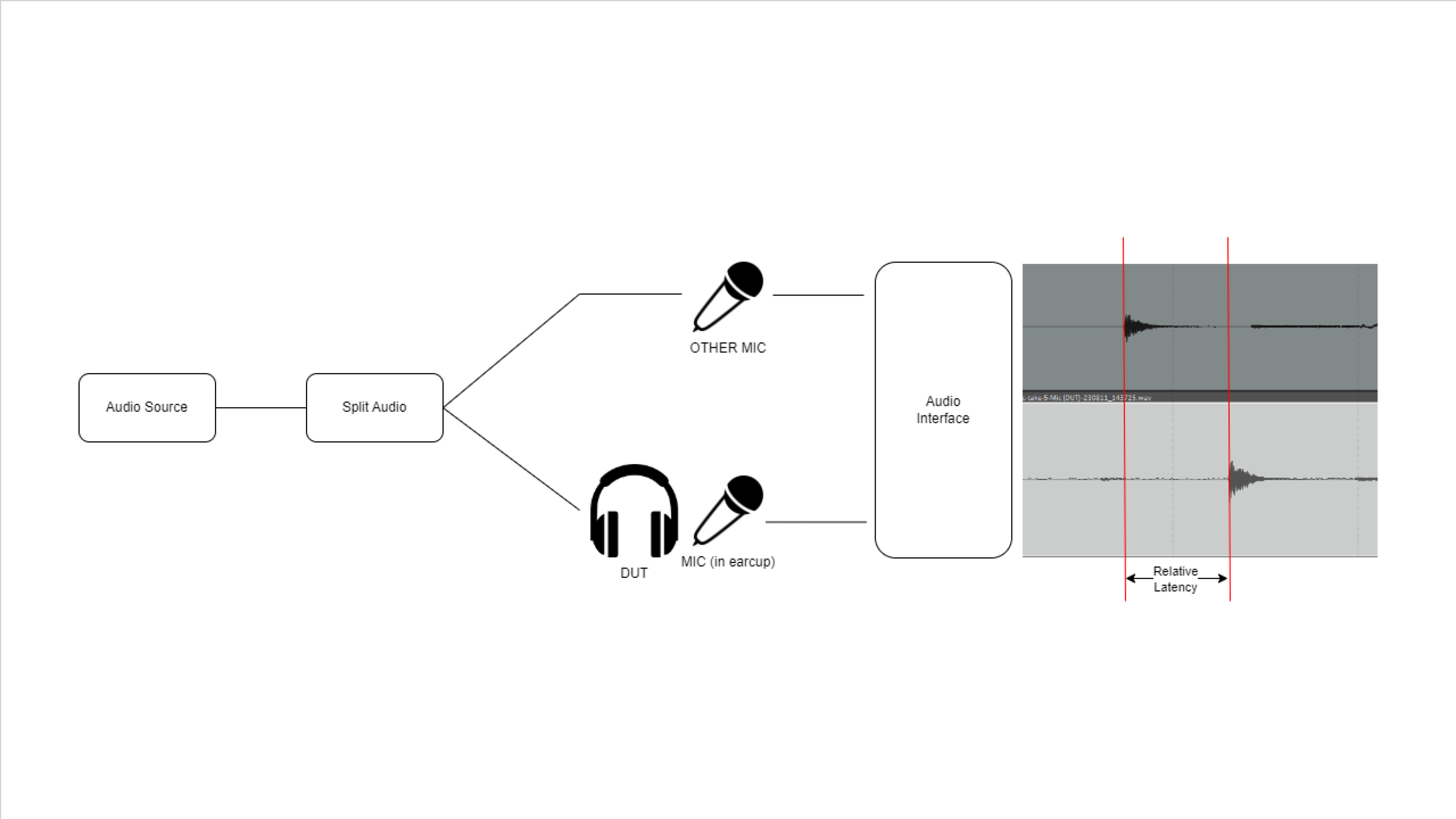

We use a Creative BT-W5 or FiiO BTA30 Pro Bluetooth transmitter, depending on which codecs the headphones support. We pair the headphones with our PC and add it to ASIO4All (in our preferred setup). We then place the headphones' left ear cup on our mic. We can split our audio test file so that one input goes to the headphones tested (device under test (DUT)). A second pair of headphones connect directly to our audio interface. These are the monitor headphones, which allow us to hear the second track that is sent to the audio interface. We record this split audio test track in Reaper as two round-trip latency tracks: one passes through the headphones, and the other bypasses it.

This allows us to compare the recorded waveforms and find the sample number for matching points. We can then find the difference, which can almost entirely be attributed to the additional delay introduced by the headphones tested.

Our default latency setup.

Our default latency setup.

Using this setup, we measure relative latency rather than total latency. True latency means that we need to know all the delays in the chain and offset them accordingly. As a result, our results reflect how the headphones perform under Windows OS, under our test conditions. Some apps like YouTube even have their own compensation features to help reduce audio lag.

That said, this setup allows us to better isolate the impact of our system on our results. At the same time, our latency measurements become relative to our setup. They still provide a general indication of the latency performance you can expect with your own setup, though. Our results are also more consistent and comparable across reviews.

Bluetooth Version

Bluetooth versions vary between headphones and come with a suite of features that improve the data rate of transmission, improve the battery life, or add additional codecs to reduce lag and latency. All Bluetooth standard versions support downward compatibility, which means that a smartphone running Bluetooth 5.0 will still work with headphones that are Bluetooth 4.2 and vice versa.

To test the Bluetooth version, we consult the headphones' documentation to confirm the Bluetooth version, whether through product manuals, specification sheets, or simply checking the box. If we can't confirm the Bluetooth version with the information provided with the headphones, we consult the vendor page of the retailer we purchased the headphones from to see if there is additional information online. If we still can't determine the official Bluetooth version, we report 'Unspecified'.

Multi-Device Pairing

Multi-device pairing lets you connect your Bluetooth headphones to multiple devices with full, simultaneous functionality on each source. Bluetooth headphones with multi-device pairing can connect to a Bluetooth-enabled laptop and smartphone at the same time and play media as well as take calls/use the microphone on both devices simultaneously. Some headphones support partial multi-device pairing, which means they can play and control audio on one Bluetooth device and use the microphone to make phone calls on another.

Many gaming headphones also support multi-device pairing. However, they tend to support Bluetooth on one device and a wireless connection via a dongle on console. We indicate this kind of connection by stating, 'Bluetooth + Console/Non-BT Wireless'.

To test multi-device pairing, we attempt to pair and connect the headphones to two separate Bluetooth devices (currently two Android phones). We then test both media playback and microphone control channels on each device to ensure all channels work simultaneously on both devices. For headphones with a wireless dongle, we'll additionally connect them to a console via their dongle and use Bluetooth to connect them to our Android phone. We repeat the same media playback and microphone control check on each device.

Quick Pair (Android)

If you have an Android device like a Google Pixel or Samsung Galaxy S phone, then you can benefit from quick pairing, which is designed to make pairing your headphones to your device easier. That also means that you don't need to open your Bluetooth settings and manually select these headphones. There are a few kinds of quick pairing, such as NFC (Near Field Communication) pairing, Google Fast Pair, and Bluetooth Swift Pair. For this test, the headphones either 'Yes', support quick pairing on Android, or 'No'. The text will tell you what's supported.

To test for this, we check the manufacturer's documentation and confirm quick pairing implementations with our Android device.

Quick Pair (iOS)

Quick pair lets you easily pair your headphones with your iPhone or iOS device. Headphones with an H1/H2 or W1/W2 chip, like the Apple AirPods Pro (2nd generation) Truly Wireless, are designed to easily pair with your iOS device. Like Quick Pair (Android), there are only two outcomes for this test: 'Yes' or 'No'.

To check for quick pairing, we check the manufacturer's documentation and confirm quick pairing with our iOS device.

Line Of Sight Range

This is the headphones' range when in direct line of sight with the Bluetooth source. This is important if you're listening to audio or watching a video and don't want to sit very close to your audio device, such as a PC or the TV in your living room.

We test for this by connecting the headphones to the same Bluetooth-enabled phone in a large open area, like a parking garage. We then evaluate the distance with a measuring wheel until the wireless connection is too weak to reliably transmit audio without any drops or issues in quality. This distance is also measured three times and then averaged to obtain the line of sight range.

PC Latency (SBC)

Base latency refers to the default sub-band coding (SBC) that most wireless headphones use when connecting via Bluetooth. This typically occurs if the headphones do not have any additional codecs like aptX or AAC. The headphones will also default to SBC if you're trying to use a codec not supported by both Bluetooth devices.

Typical SBC latency ranges between 150 to 250 ms.

PC Latency (aptX)

AptX is a proprietary codec by Qualcomm (previously CSR) that improves audio quality and bit rate efficiency. This means headphones with aptX sound a bit better and less compressed when playing audio wirelessly. They also have a bit less latency than SBC.

Typical aptX latency ranges between 50 to 150 ms.

PC Latency (aptX Adaptive (High Quality))

AptX Adaptive is a codec that automatically adjusts its bitrate between 279 to 420kbps based on what you're playing. It's backward compatible with aptX and aptX HD in stereo headsets.

While you'll experience high audio quality via this codec, it also tends to have 200 to 300 ms of latency. This isn't really a problem if you're just streaming audio, though.

PC Latency (aptX Adaptive (Low Latency))

As previously mentioned, AptX Adaptive is a codec that automatically adjusts itself to the bitrate between 279 to 420kbps based on what you're playing. When you're watching videos or doing something that requires less audio lag, the codec will switch into low latency mode.

This codec tends to have 50 to 100 ms of latency, so while its latency isn't virtually eliminated like most analog connections, it's still low enough that you'll be able to enjoy your content without significant lip sync issues.

PC Latency - LDAC

LDAC is a proprietary codec created by Sony. It uses lossless and lossy compression for streaming high-resolution audio. While you'll find this most commonly in Sony headphones, there have been other adopters of this codec, like Anker, Audeze, and Shure. Usually, to play LDAC from your smartphone or other device, you'll need to see if the feature can be enabled in your device's settings.

This codec has a variable bitrate with three settings: 330kbps, 660kbps, and 990kbps (with an advertised maximum bit depth and frequency of 24-bit/98kHz for high-quality audio). However, reaching max bitrate depends on the stability of your connection, as the codec can control the bitrate based on the network connection. You're likely to start at 660kbps rather than the highest setting, though.

There are a couple of downsides to this codec. For instance, it's not supported on Apple devices. In addition, using LDAC can reduce your battery life. It tends to have high latency, too—between 200 to 300 ms—but this won't really impact you if you're only streaming audio.

Recorded Latency

This track is the combined output of the reference audio track and the tested headphones' track. It allows you to hear the latency for yourself.

Recorded Latency Codec

This lets you know what codec we used for our recorded latency file. We select the codec that users are most likely to use.

AAC Support

AAC (Advanced Audio Coding) is the successor to MP3 and is the default codec for iOS devices, though other devices support it as well. It's expected that its latency should be as low as SBC. However, we can't test AAC latency due to the complexity of testing it on our setup. You can read more about it in our R&D article.

We check for AAC support by pairing the headphones to a Mac computer and checking within the console app.

What Isn't Included

-

Evaluating each codec’s sound quality.

-

AAC latency. This is a complicated issue, which you can read more about in our R&D article.

-

Bluetooth LE (Low Energy) Audio. This is a standard for low-power audio transmission.

-

LC3, LC3+, and proprietary codecs like Samsung's Seamless. Unfortunately, we don’t have the tools necessary to measure these codecs yet.

If you feel there's an item missing that should be included, please let us know in the discussions.

Conclusion

Bluetooth is a wireless standard that lets you connect your headphones to your mobile device without cables. Our Bluetooth score lets you know whether a pair of headphones supports Bluetooth and what kinds of Bluetooth features are offered. Each Bluetooth version has its own advantages in respect to latency and range, and some Bluetooth headphones can even connect to multiple devices or be quickly paired with your devices. If you want to listen to high-quality audio or keep latency down, you'll want to know what codecs the headphones support and their latency.

Comments

Bluetooth Connectivity Score and Tests: Headphones: Main Discussion

What do you think of our article? Let us know below.

Want to learn more? Check out our complete list of articles and tests on the R&D page.

- 21010

I just got this after I broke my Rotator Professional of 13 years. It feels like it glides more along the carpet compared to the old one. I don’t know if it’s a difference in the floorhead suction, or maybe the brush design? Any ideas?

- 21010

As someone who spent a lot of time researching a mattress two years ago, this is welcoming. Though I’ll be curious to see what objective tests you’ll develop for it. Hope you also get to the bottom of the materials used in each mattress, including type of foam, density, etc.

- 21010

I’ve read through the R&D article, and it doesn’t specifically mention pollen except in the graphic showing particle sizes. Is that something that is specifically tested? Or can it be assumed based on the smoke machine’s ability to simulate that size particle whether it does well removing pollen?

- 32120

Wish they would offer their high end models in 55". 65" is too large for my seating.

- 21010

The table says that the Bravia 7 replaces the X93L, but in the paragraph description it says the X90L. Does it replace both?

What made the local dimming on the Bravia 9 that much better than the 7? I thought they both used the new backlight master drive.

Interesting that the 55" Bravia 8 OLED is only $100 more than the Bravia 7.

- 21010

I think that only affected their 2023 models. And doesn’t it only kick in on dark HDR content? CNN being used in this test is SDR.

- 21010

I’ve seen a few between Reddit and AVS Forum. Yes, it’s rare. I’ve had an A90J since summer 2021 with no issue.

- 21010

A lot about professional calibration in this post. All TVs sold are professionally calibrated. You may not like the way the professionals employed by the TV manufacturers professionally calibrate the TVs. For most people the way the professional calibrators that work for LG, Sony, Samsung, etc. professionally calibrate their TVs is acceptable.

They’re calibrated to a standard, not each unit individually. Manufacturing tolerances with panels can make a difference. Not enough that the average person would see a difference 99% of the time, but in some cases you can such as the B9 discussed here.

Edited 4 years ago: Fat fingered post comment - 21010

How prevalent is this issue? Thinking about getting this to pair with my Sony X950H since it’s on sale, but if there are issues with audio syncing I might pass for different one.

- 21010

I agree with this. The CX will start at $1500, but could see a small drop during the holidays. If you’re planning on getting a PS5 then, you could get that TV at the same time. Otherwise wait until next year, the only other TVs with HDMI 2.1 support right now are 55 inches and larger.

The CX actually starts at $1600 now

- 21010

Can you provide an example of one of these TVs?

- 21010

Assuming you’re talking about color space, Auto is recommended (https://www.rtings.com/tv/reviews/samsung/q7fn-q7-q7f-qled-2018/settings).

Do you have color tone set to warm2?

- 21010

Waiting for it to be available in the states so I can go look at it. I’m still torn between this and the x950, mainly because it’s now a $600 price difference (they initially reported the CX retailer at $1500). But being an OLED and supporting HDMI 2.1 is sounding good.

- 21010

Probably the X900h. It retails at $2799.99 on Sony’s site (https://www.sony.com/electronics/televisions/xbr-x900h-series?showModelPrices=true) for the 85". Between that and the model numbers it’s a safe bet

- 21010

Oh, sorry for any confusion, that’s correct actually. Our post calibration scores are done via professional calibration. What I’m saying is that simply taking our recommended settings will get most TVs pretty close, and unless you really care about an accurate image, a professional calibration isn’t worth it for most people.

This is what I was getting at in https://www.rtings.com/tv/discussions/r2ZUwH7iUQ0Cjw7e/pre-calibration-score-more-severe. It sounds like even with the B9’s recommended settings, it still has poor out of box color accuracy, correct?

- 21010

Out of the box color accuracy was poor on your b9 unit. Other reviewers have reported poor out of the box accuracy with their b9 units. If you have to pay $300 to have the b9 calibrated is the b9 still $300 cheaper?

Agree with this. I suggested in the Test Methodology Discussion that the pre-calibration score should be more severe.

- 21010

The point is, i currently have an LG C8 OLED. And the tv is not that bright in hdr and on rtings it says the c8 is brighter than CX, and there is a burn in risk with pixels degrading overtime. And the ABL is annoying in my opinion when you are located in a very bright location in a video game. I don’t know what to choose from the CX or Q90T! The CX isn’t really an upgrade over the C8. And even less bright! My only problem right now is the c8 doesn’t have hdmi 2.1 this is why i wanna upgrade.. but i’m sure i will most likely end up with the 4k flagship qled this year!! Because 2000 nits of peak brightness is crazy, and no burn in risk and the new local dimming algorithm by samsung this year makes blooming way less than the Q90R from last year!! I don’t know i guess i’m yet not sure about what to go for. I will most likely wait a little bit and ask here and there and will pull the trigger later. i love my C8 OLED but the ABL is ruining it for me.. especially in a very bright scene in a video game!

Yeah if it’s HDR brightness you’re after, then either the Q90 or the X900. The Q90 is on sale for $1700 ($100 off), and the X900 is $1000. Are you also looking for lowest possible input lag? The Q90 has lower lag in game mode, but likely at the cost of local dimming. You can turn local dimming on high again while in GM, but I don’t know how much that adds into input lag. This video compares the q80 and the x950, go to 9:51: https://youtu.be/2DaZE_VGytQ. Neither are the same models we’re discussing, but the algorithms are likely similar.

- 21010

Gaming only!!! Ps4 pro and ps5!!! 55 inch is what i want too. I want a tv with hdmi 2.1 too the x950 doesn’t have, the x900h does but it is only 32 dimming zones..

Right the X950H doesn’t have HDMI 2.1, but the review for it should give an idea of how local dimming will score with the X900H.

To me, the CX is the better value, despite HDR not getting as bright and burn-in risk. There is another thread about the Q90 and game mode, since in the past local dimming has generally been greatly reduced to achieve low input lag. Apparently you can change local dimming in game mode, but don’t know what how much that increases input lag.

Tbh I would just go in a store that has them both on display and see what you like. Most Best Buys in the US do it by appointment right now.

- 32120

Sorry but the setting are showed in a seperate video. At about 9:40min he will start up a game and you will see the settings. https://youtu.be/vkK0RAN_Yng Also, someone on Reddit sent me this link to a forum and it has these pics. It’s post #516 https://www.avsforum.com/forum/166-lcd-flat-panel-displays/3125278-owner-s-thread-sony-x900h-no-price-talk.html#/topics/3138076?page=52

I’m not sure then. I can see that yeah, game mode goes on, but I can’t tell how it changed local dimming in that video or if he manually changed it to High beforehand.

Is that second link the right one? It goes to an owners thread for the X900H and no pics on 516.

- 32120

I have seen that video but that is the q80t. https://youtu.be/sMBdICrJx5k At 10:40min you can see the game section of the video. It looks like local dimming is working in this video and can be seen set to high when game mode is on. So I’m under the impression the q80t and q90t are different in game mode. Would want to know how well the dimming performs compared to game mode off and if setting it too high effects input lag

Correct, it is the q80, but the algorithm for game mode is the same as far as I know. Where do you see it set to high? It’s kind of hard to tell what’s effect it had in that video unless you turn game mode on and off to see the difference. But I’d imagine LD affects input lag if you override it, but someone at rtings would have to confirm.

I’m curious how dark the stone to the left of the door is around the 12:00 mark. That’s a pretty obvious darkness difference, so wonder if that’s the CX raising black levels or the Q90T graying them out.

- 32120

It’s pretty significant, as seen here at 9:50: https://youtu.be/2DaZE_VGytQ. Blacks look more gray and you lose some HDR highlights.

Edited 4 years ago: Added link - 21010

Read through this page: https://www.rtings.com/tv/reviews/by-size/size-to-distance-relationship

I would personally go with 55 from 8 feet away when half of the time you’re viewing 720 and 1080 content.

Edited 4 years ago: Personal suggestion - 21010

Will you use it for other than gaming? What size are you looking to get?

I think the Q90T is a bit over priced; both that and the CX are $1800 and the x900h will be $1000, all being the 55"models.

The q90t can get brighter HDR and won’t have burn-in risk compared to the CX, but obviously you lose out on perfect contrast and uniformity.

I really like Sony’s TVs and I’m curious how they score local dimming this year (should get a pretty good idea when the x950 review goes live). I expect it will have more blooming compared to Samsung, but fewer fluctuations in zones and better shadow detail. It will also be significantly cheaper.

- 21010

Why medium over warm2? as the B9 settings page here suggests?

- 21010

Thats very unfortunate. The 48" being the 1st of its size I thought would, if only 1 size would be chosen, be the obvious choice. There were/are a lot of people, myself included, looking forward to the 48" review. it was/is highly anticipated for use as a gaming display for both PC and console use. Perhaps you should reconsider your position in this matter, it is a completely different panel made in a different new fabrication facility after all.

It won’t be released until at least next month. abt had it on their website a few weeks ago, but it disappeared. When I asked about it, they said they don’t expect it until late summer. This TV was voted to be reviewed.

- 32120

It seems that with the V-Series, you may get one with either a VA or IPS panel. See CNET’s review (https://www.cnet.com/reviews/vizio-v505-g9-review/) that has a statement from Vizio. Their M-Series review does say “All of the sizes in the M-Series use VA panels, not the IPS panels found on some sizes in previous years”, but maybe at some point they did have to replace VA panels with IPS ones. As the person above said, try taking a closeup picture of the screen.

- 21010

Are you positive it’s the same model? The tag on the TV itself shows it’s a M437? Where did you buy it?

- 21010

Wait for the PS5 to release before purchasing a TV. Avoid the 49inch version of X95H altogether because it does not have HDMI 2.1. As for the Samsung Q80T 49 inch, it has a single HDMI 2.1 port for gaming although the panel is only 60HZ… not that great. In my opinion, I’d say wait for 2021 because Sony will release updated models that will take advantage of HDMI 2.1. the X95I could potentially feature a 49 inch variant with HDMI 2.1 as well as 120hz display. An excellent choice for gaming on a small TV. I say wait because these TVs cost quite a lot and for your money, you’d want the best. Another great choice would be the 49 inch CX OLED from LG. It can potentially suffer from Burn in, however they have marketed it as a gaming monitor so I would assume they have implemented better burn in prevention features so burn in won’t be an issue to worry about, unless you play static games such as FIFA for 10 hours every day on maximum brightness, or leave the news on for 10 hours every day too…

I agree with this. The CX will start at $1500, but could see a small drop during the holidays. If you’re planning on getting a PS5 then, you could get that TV at the same time. Otherwise wait until next year, the only other TVs with HDMI 2.1 support right now are 55 inches and larger.

- 21010

I would add the Sony X950H to your list. It hasn’t been reviewed here yet, and despite it runs on Android TV and some of the new features are only available on the 55 inch and higher, Sony’s TVs have always been really good. My dad has the 850C from 2015 and the picture on it is gorgeous. It doesn’t support HDMI 2.1 though, so if you’re a gamer looking to take advantage of the XBox Series X or PS5, I would look into one with that 2.1 support.

Edited 4 years ago: Added HDMI support