HDR content has been around for close to a decade now, and you can easily find a large selection of HDR content on most streaming services, 4k Blu-rays, and there's HDR support for a wide selection of video games. 4k and HDR are heavily tied to each other since there's barely any content in 4k that isn't also presented in HDR, and there's even less content in HDR that isn't in 4k. If you want to learn more about HDR's improvements over its predecessor, you can check out our article on HDR vs SDR.

After content is color-graded in HDR during the final steps of the post-production process, colorists have three primary HDR formats to choose from to deliver their final vision to consumers: HDR10, Dolby Vision, and HDR10+. Each of these formats offers tools to these content creators that can impact the image we see when using our TVs at home, but the main difference between the three formats comes down to metadata.

The way HDR content looks on your TV is dictated by the content itself and the capabilities of your display. For example, different movies in HDR have drastically different levels of peak brightness, and each movie has to communicate with your TV to tell it how bright it gets so the content is displayed as accurately as possible. This form of communication happens through the use of metadata. Metadata contains information such as the maximum and minimum luminance of the mastering monitor and the color space used in the content. All three HDR formats make use of metadata, but the way the content sends this information to your TV can come in two forms: static metadata and dynamic metadata.

HDR10: Static Metadata

HDR10 was the first widely supported HDR format and is still the most commonly used one today. A lot of HDR content is only delivered in HDR10. Even if certain content is available in HDR10+ or Dolby Vision, that content will typically also be available in HDR10.

With HDR10's static metadata, your display reads that metadata once and determines how it's supposed to display the content, and the resulting image can be drastically different depending on the capabilities of your TV. For high-end TVs like QD-OLEDs with low black levels and high peak brightness, this static metadata usually suffices since the capabilities of the display are close enough to the mastering monitor used by the colorist. However, things get more challenging on budget models like the Hisense A7N since the TV isn't bright enough to display most HDR content properly. When a TV can't do this, it relies on a process called tone mapping.

Tone Mapping

HDR content is typically mastered on 600, 1000, and 4000 nit mastering monitors, so even the best TVs on the market have to use HDR10 metadata to tone map for rare content mastered at 4000 nits since no current models consistently get that bright in their most accurate picture mode. Essentially, tone mapping takes an image that can't properly be displayed on a TV and makes it fit within the display's parameters. Tone mapping affects how blacks, colors, and bright highlights look.

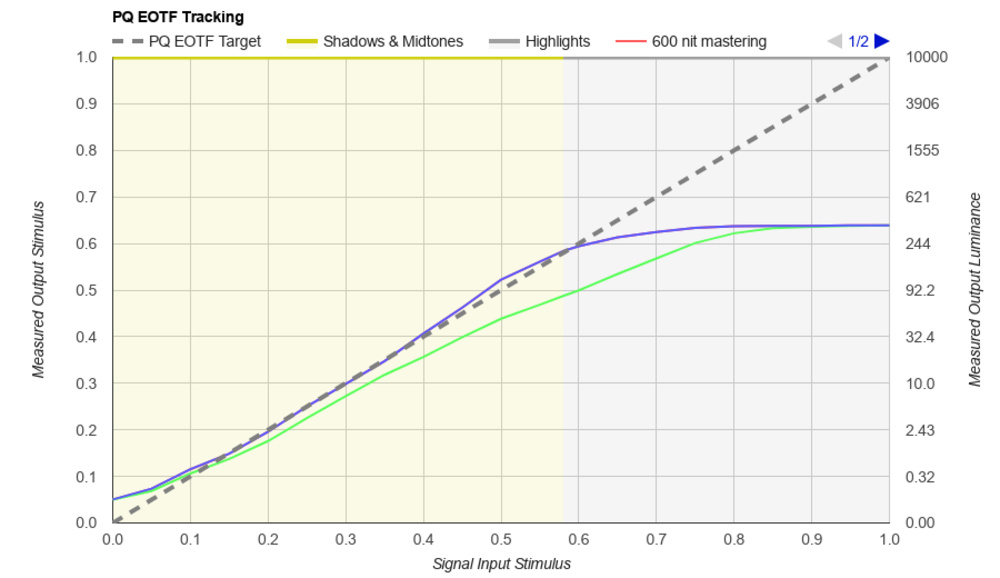

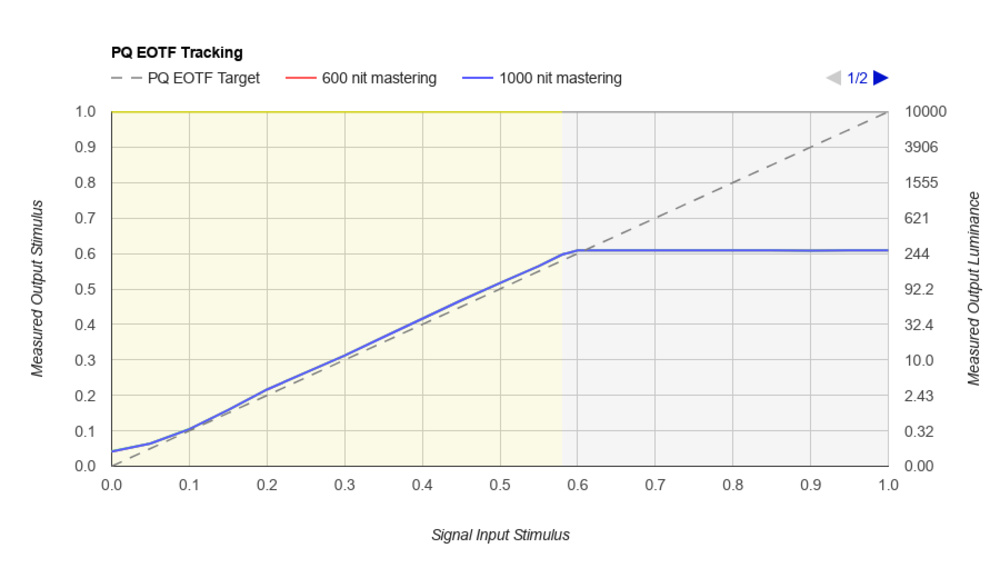

The main piece of metadata used to determine how a TV should tone map is the Maximum Mastering Display Luminance (MaxMDL). Above is a photo of the PQ EOTF graph for the Hisense A7N. The blue line shows how the TV handles content mastered at 1000 nits, and that roll-off near the TV's peak brightness shows tone mapping at play. By compressing the highlights, the viewer will still see some detail in the highlights since the TV is lowering the brightness on the upper end to retain detail. The green line represents content mastered at 4000 nits, and as you can see, the roll-off happens much sooner. Without this roll-off, highlights that are brighter than the TV's capabilities would all be shown at the TV's maximum brightness, which causes clipping and a loss of fine details. This can be seen below on the PQ EOTF graph for the Vizio V Series 2022.

By comparing how these two TVs tone map, it becomes clear that there's no standardized way this metadata is handled, and you can get wildly different results depending on the display.

MaxFALL and MaxCLL

Since the metadata in HDR10 is static, this tone mapping curve is set once, which can lead to an undesirable image. Let's say we have a movie mastered at 4000 nits, but it only peaks at 4000 nits for a few frames, and the rest of the film is well under that. Since the metadata is static, the roll-off to retain detail in highlights will be active for the entire movie when it's really only needed for less than a second. This can make the image look too dim for the movie's entire runtime, giving you a duller image than the content creator intends. Furthermore, just because a film or TV show is mastered on a 4000-nit monitor, it doesn't actually mean that the colorist pushed the highlights that much, which can lead to a duller image if the TV only uses MaxMDL metadata to tone map.

Fortunately, some HDR10 content has metadata called Maximum Content Light Level (MaxCLL) and Maximum Frame Average Light Level (MaxFALL). If a TV utilizes this more precise metadata, you end up with an image closer to what the content creator intends. Unfortunately, not all HDR10 content includes this metadata; some TVs completely ignore it. MaxCLL and MaxFALL metadata can help your TV determine if it needs to tone map and what tone mapping curve should be applied, but since it's still static metadata, it's not perfect.

Dolby Vision: Dynamic Metadata & Some Extras

Dolby Vision offers a few additional tools to colorists and content creators to ensure their vision is translated as well as possible to the at-home TV-watching experience. The main benefit of Dolby Vision content over its HDR10 counterpart is the use of dynamic metadata, referred to by Dolby as Reference Picture Unit (RPU). Unlike the static metadata of HDR10, which TVs use to determine how they should display content for the entire runtime of a movie or show, the dynamic metadata of Dolby Vision constantly sends information to your TV on as little as a frame-by-frame basis. By doing so, all of the potential issues with tone mapping outlined in the section above are completely eliminated.

Using the previous example of a movie that only briefly peaks at 4000 nits but is mostly well under that, the dynamic metadata changes the way the TV tone maps based on how bright the content is at that very moment, so the roll-off near your TV's peak brightness is constantly changing. This can result in a brighter and punchier overall image for the rest of the movie since the unnecessary roll-off is no longer present. It can also lead to more detailed highlights in very bright scenes since the TV can be more aggressive with how it compresses the highlights during those brief moments.

This dynamic metadata is automatically produced during the final steps of the coloring process, but the Dolby Vision tools allow colorists to manually go into the metadata to set certain parameters for how they want a TV to tone map based on its capabilities. By giving content creators more control over this metadata, the resulting image can have richer blacks, more vibrant colors, and more detailed highlights.

profile 5 (Streaming) & profile 7 (4k Blu-rays)

Dolby Vision doesn't stop there, though. Its content is broken down into different profiles and levels, and Dolby offers a wide variety of these to give content creators the tools needed for their projects. However, two main versions of Dolby Vision are used today. The most common version of Dolby Vision is the one found on streaming services. This version is referred to as Dolby Vision Profile 5, which utilizes Dolby’s proprietary IPT color space and RPU (dynamic metadata). As outlined above, this dynamic metadata is the most beneficial aspect of streaming Dolby Vision versus its HDR10 counterpart.

4k Blu-rays utilize Dolby Vision Profile 7, but there are two distinct types of layers that contain different data depending on the disc. The more basic version utilizes the Minimum Enhancement Layer (MEL), which works very similarly to Profile 5. The base layer contains the HDR10 version of the content, while the secondary layer contains the dynamic metadata to help with tone mapping.

The Full Enhancement Layer (FEL) also found on 4k Blu-rays offers a bit more. While it contains the same 10-bit HDR10 base layer, the secondary layer has the dynamic metadata as well as extra video data that produces a 12-bit video. Although modern TVs are limited to 10-bit, most can process a 12-bit video and downsample it to 10-bit. This can result in slightly smoother color gradients, even on a 10-bit display. The extra video data in the secondary layer can also help give you a somewhat cleaner-looking image than regular HDR10, since the additional data can increase bitrates and fidelity. Furthermore, it makes the format more future-proof, as 12-bit displays may be released sometime in the future.

HDR10+: Dynamic Metadata

HDR10+ isn't as common as Dolby Vision, but it's starting to catch on a lot more, with several streaming services supporting it. HDR10+ works similarly to Dolby Vision and utilizes dynamic metadata, so your TV can tone map more precisely. Like Dolby Vision, this dynamic metadata is automatically generated after a colorist finalizes their HDR color grade. However, you can't manually adjust the metadata, so it doesn't give content creators the same level of control as Dolby Vision, which can affect how HDR10+ looks versus Dolby Vision.

As mentioned in the previous section, Dolby Vision offers numerous profiles and levels depending on the needs of the colorist, but HDR10+ is a little more straightforward and is limited to 10-bit. You won't find different versions of HDR10+ like you do with Dolby Vision. However, HDR10+ is a big improvement over regular HDR10 since the dynamic metadata embedded in HDR10+ and Dolby Vision content is what makes the biggest visual difference.

Conclusion

Although there are clear distinctions between how HDR10, HDR10+, and Dolby Vision function, the visible difference between the three formats heavily depends on your display's content and capabilities. It's easy to say that Dolby Vision is the clear winner here since it offers colorists more tools that can lead to a better image when watching content at home, but not all HDR content is created equally. Oftentimes, the colors and highlights in HDR content aren't pushed very far, so there's barely any visible difference between the three formats with the same content viewed on the same TV, since HDR is so dependent on the content itself.

HDR10+ and Dolby Vision offer very similar advantages when streaming your favorite shows and movies since the dynamic metadata of those formats is the main component that sets them apart from regular HDR10. The impact of the dynamic metadata found in HDR10+ and Dolby Vision is greater on low-end displays since budget models don't come close to matching the capabilities of a mastering monitor, and your TV needs to try to fit the parameters of the content you're watching within its capabilities. With higher-end TVs that have similar capabilities as a mastering monitor, the dynamic metadata doesn't make nearly as big of a difference. However, with content that pushes HDR to its fullest potential, you can start to see some of these visual differences on any television, since no TV perfectly displays all HDR content.