- Table of Contents

- Intro

- What Is HDR?

- HDR Peak Brightness

- Color Gamut & Volume

- Gradient

- Extra Information

- Conclusion

- Comments

One of the biggest advances in TV technology in recent years is the development of High Dynamic Range (HDR) video formats. It enhances the picture quality in movies and shows by displaying a wider range of colors with brighter highlights compared to Standard Dynamic Range (SDR) content. However, even if a TV accepts an HDR signal, it doesn't mean that content automatically looks good, as the TV needs to display the image properly.

In this article, we'll look at a few aspects that make HDR content different from SDR.

What Is HDR?

HDR (High Dynamic Range), as the name suggests, introduces a wider range of colors and brightness levels compared to SDR (Standard Dynamic Range) signals. HDR signals send metadata to your TV, which is a list of instructions for the TV to display content properly. The source tells the TV what exact color to display at which exact brightness level, whereas SDR is limited to a range of brightness and colors. For example, with SDR, a car would be ordered to apply "full throttle" or "50% throttle." Instead, the HDR car would be asked to "go to 120 mph" and "go to 40 mph." Some vehicles would provide a worse experience than others working towards this task, and most might not even succeed. In the case of TVs, SDR is a specific set power, while HDR is a set goal.

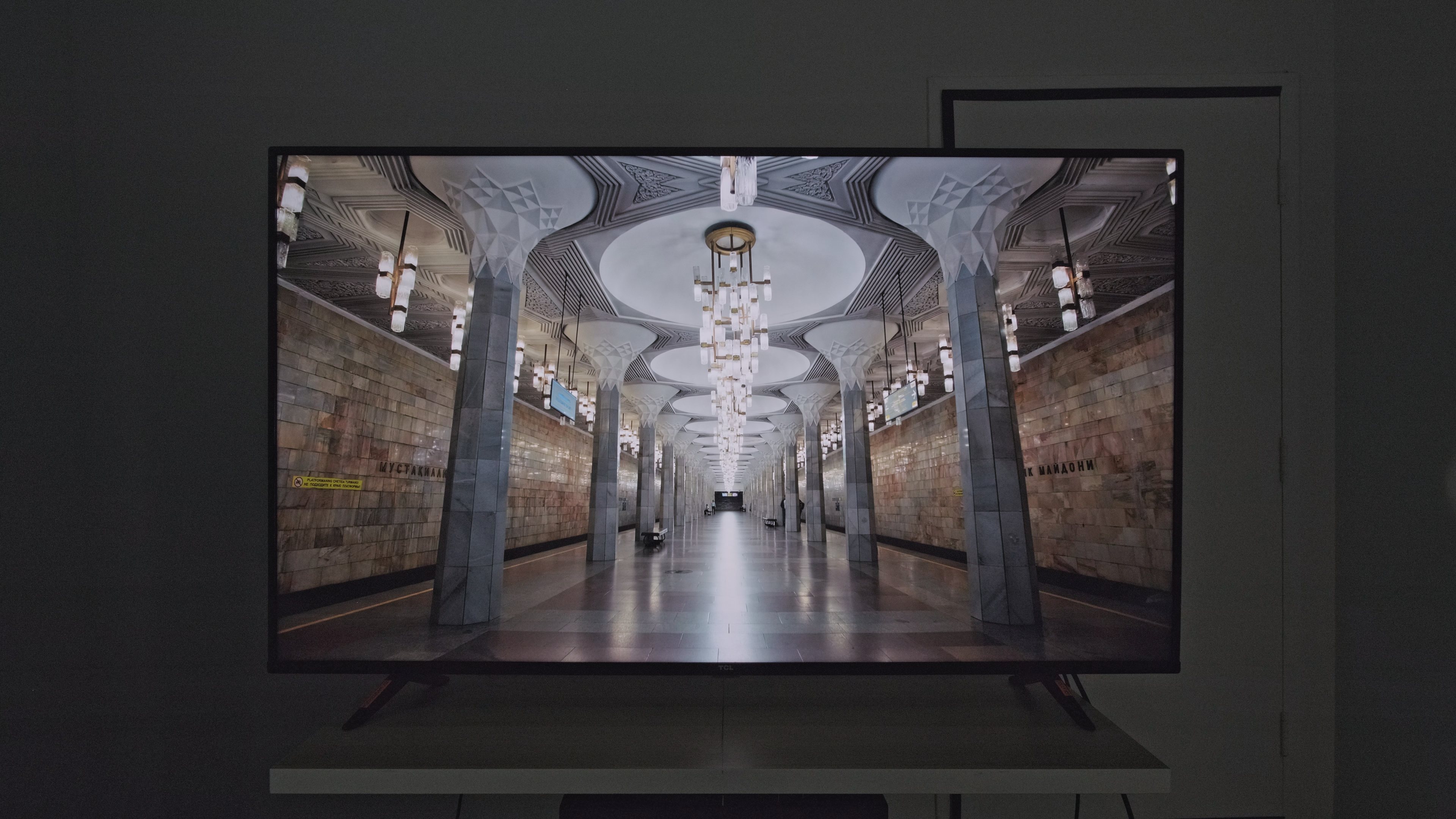

You can see an example above of what an HDR image looks like compared to an SDR image on the same TV. The HDR image appears more life-like, and you can see more details in darker areas, like in the shadows of the cars. Having a TV with HDR support doesn't necessarily mean HDR content will look good, as you still need a TV that can display the wide range of colors and brightness required for good HDR performance.

Dynamic vs. Static Metadata

There are different types of HDR signals that can be sent, and they're either with static or dynamic metadata. Dynamic metadata changes on a scene-per-scene basis, while static metadata remains the same for the whole length of the movie or show you're watching. It means that if content uses dynamic metadata, it can lower its peak brightness for a darker scene and increase its brightness for a brighter scene to make highlights pop, so it has a clear advantage. With content that uses static metadata, the brightness remains the same throughout, meaning some scenes can be over-brightened or not dark enough.

Regardless, between static and dynamic HDR metadata, both get brighter than in SDR, which is one of the main advantages of watching HDR content over SDR. Both HDR and SDR are mastered at a certain peak brightness, but HDR is mastered at a minimum of 400 nits, while SDR is mastered at 100 nits. Because every TV hits 100 nits without issue, it's only brighter TVs that can take full advantage of the increased peak brightness in HDR.

The two most common dynamic metadata formats, Dolby Vision and HDR10+, each use dynamic metadata, while HDR10 is a static format that forms the basis of HDR signals. Despite the name similarities, HDR10 and HDR10+ are different formats. Some TVs support the two dynamic formats, HDR10+ and Dolby Vision, while others support one but not the other. While thinking about which format your TV supports is usually an afterthought, it's important to consider depending on which content you watch. For example, if you constantly stream content from Netflix in Dolby Vision and get a TV that only supports HDR10+, you won't be watching content to its full capabilities. The format doesn't affect the overall picture quality, but it limits you on which HDR content you can watch if your TV doesn't support the format.

When comparing metadata in HDR versus SDR, it's important to remember that HDR content is sent with a specific set of instructions for how the TV should display it, while SDR content uses the TV's processing to control more of the image. This is why not all TVs can display HDR content how it should look like.

Learn about the differences between HDR10, HDR10+, and Dolby Vision

Below, we'll discuss the three most important aspects of HDR content: the peak brightness, the color gamut, and the gradient handling.

HDR Peak Brightness

Peak brightness is probably one of the most important aspects of HDR. This is where high-end TVs have the biggest advantage, as HDR content makes use of their higher brightness capabilities to show lifelike highlights. If a TV has limited HDR peak brightness, it can't properly display all the highlights the content is supposed to show.

Having a high contrast ratio and a good local dimming feature is also important for delivering a good HDR experience because the TV can show bright and dark highlights without losing details. However, the contrast is independent of HDR, and you can still have deep blacks with SDR content.

Above, you can see what HDR looks like on a high-end TV like the Samsung QN90A QLED versus an entry-level TV like the TCL 4 Series/S446 2021 QLED. The Samsung has incredible contrast and high peak brightness, so it really makes the image pop, and you get a sense of how bright the hallway is. However, the TCL has low HDR peak brightness and low contrast, so it can't properly distinguish the bright and dark areas of the screen and doesn't even look like the lights are turned on. HDR is all about delivering a more impactful and vivid image, and the QN90A does exactly that.

Learn more about the HDR peak brightness

Color Gamut & Volume

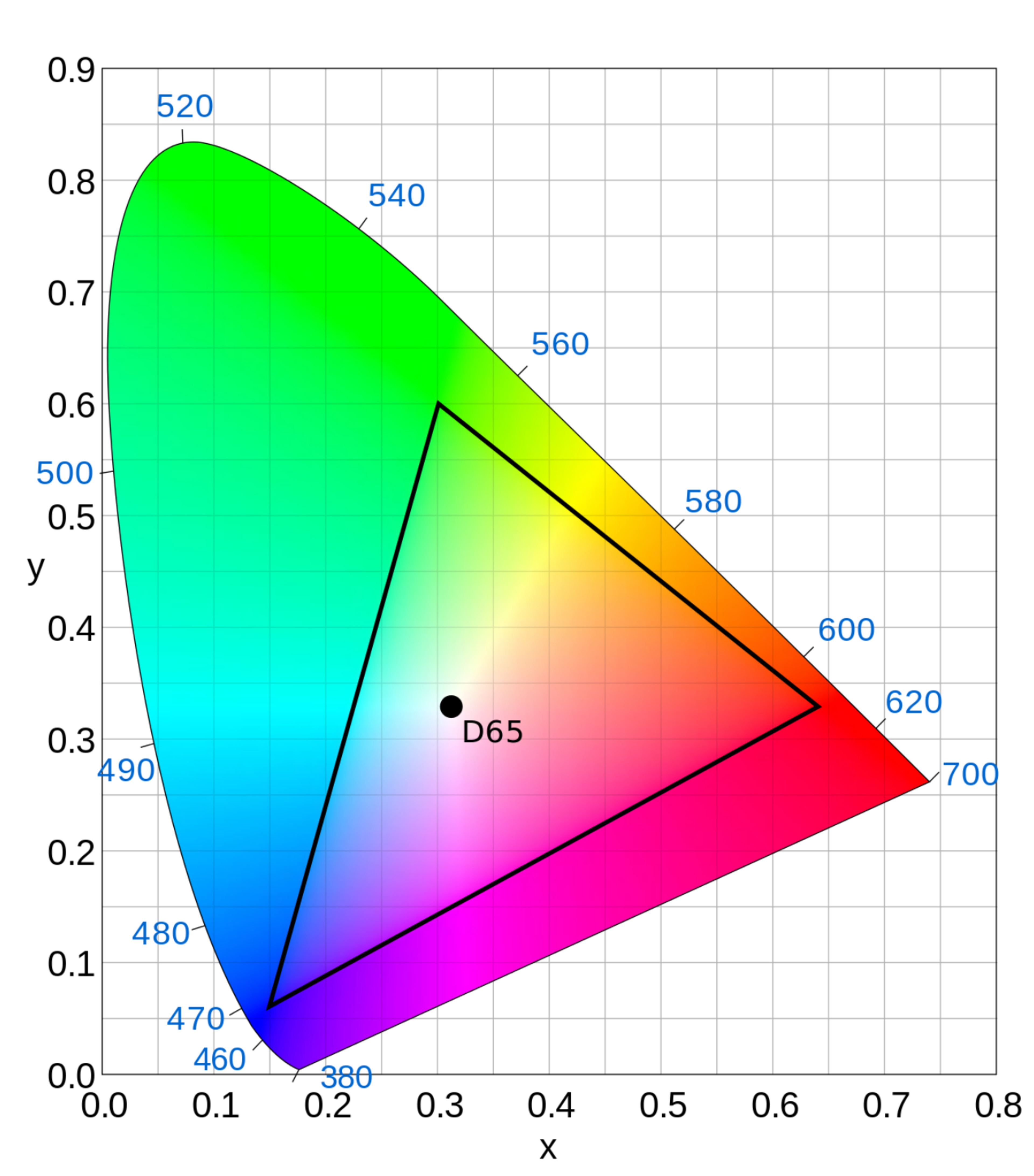

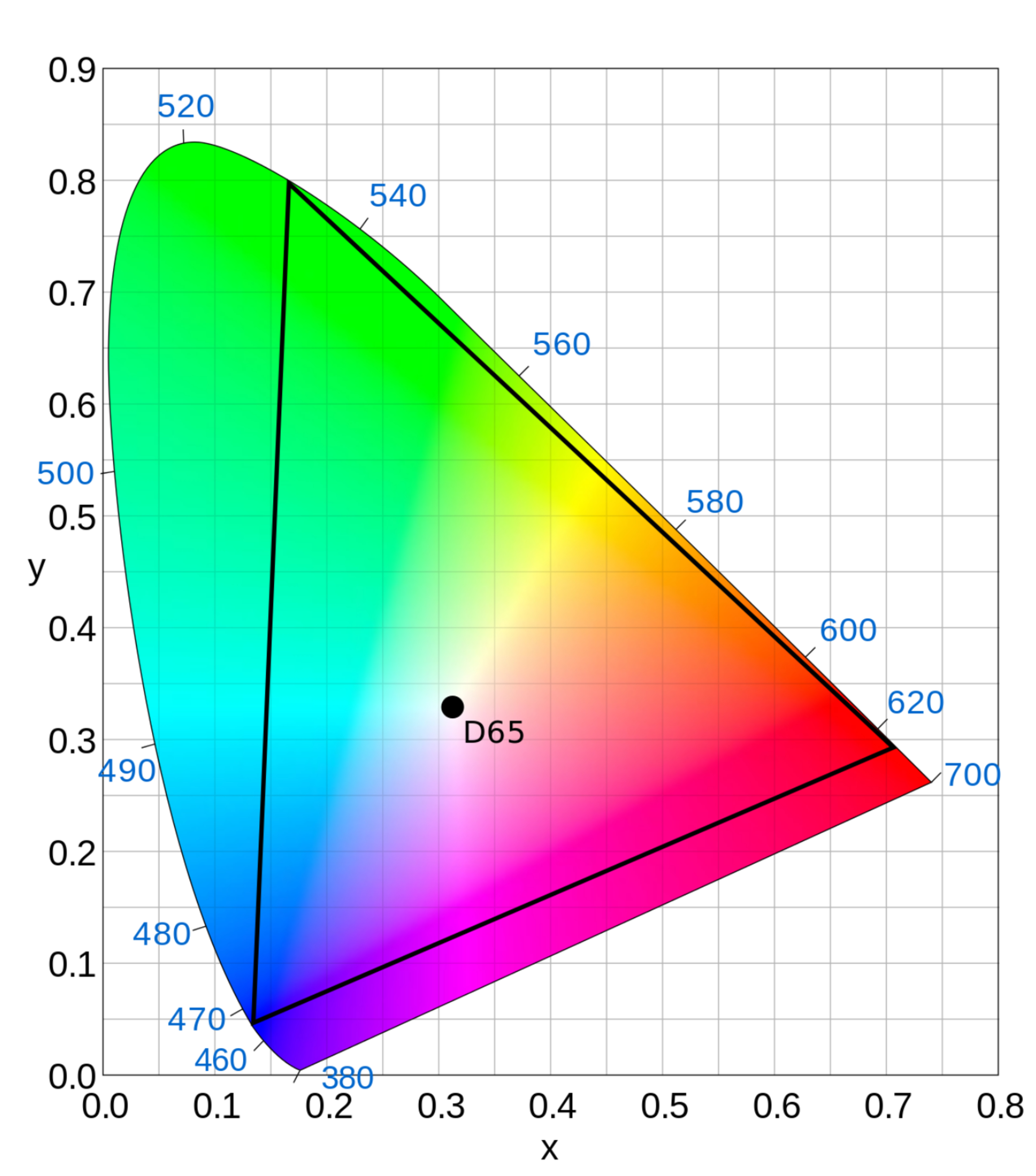

A TV's color gamut defines the range of colors it displays. There are two common color spaces used for HDR content: the DCI-P3 color space that's used in most HDR content and the Rec. 2020 color space that's slowly being included with more content. The difference between the two spaces is simply the number of colors each space covers, as Rec. 2020 is wider. Both of these cover a wider range of colors than in SDR. A TV needs to display a wide color gamut, as doing so helps improve the picture quality.

The color volume is the range of colors a TV displays at different luminance levels. So this is where the peak brightness and contrast help the TV, as a higher peak brightness helps it show bright shades, while a higher contrast is important if it needs to display darker colors.

Above, you can see two graphs of the Rec. 709 color space used in SDR content and the Rec. 2020 color space used in HDR. Rec. 2020 requires a lot more colors, which helps make images appear more life-like. Most modern TVs don't have any problems displaying all the colors necessary for SDR, but coverage of the wider color spaces can be a struggle for some TVs.

Learn more about the color gamut and the color volume

Gradient

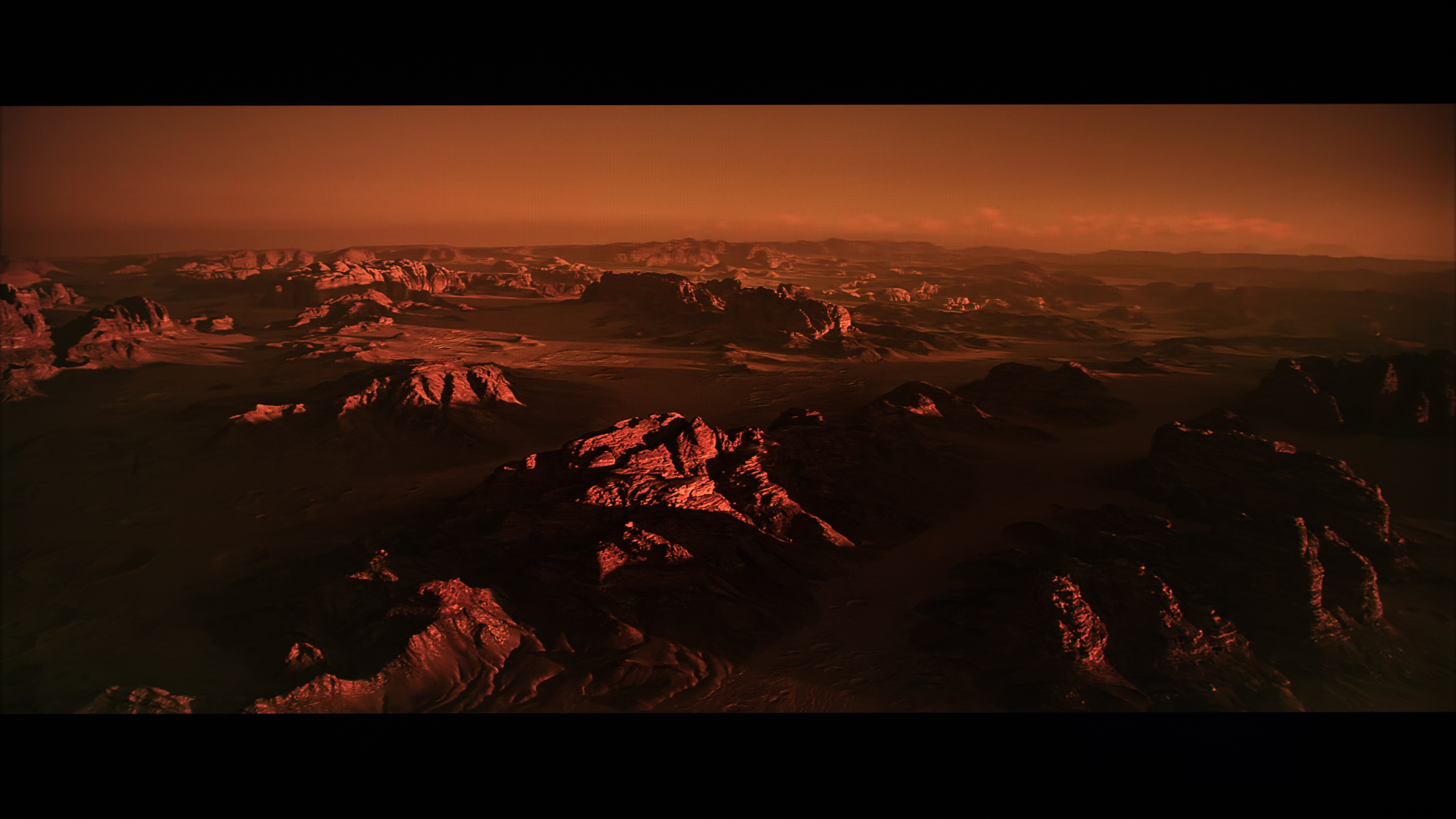

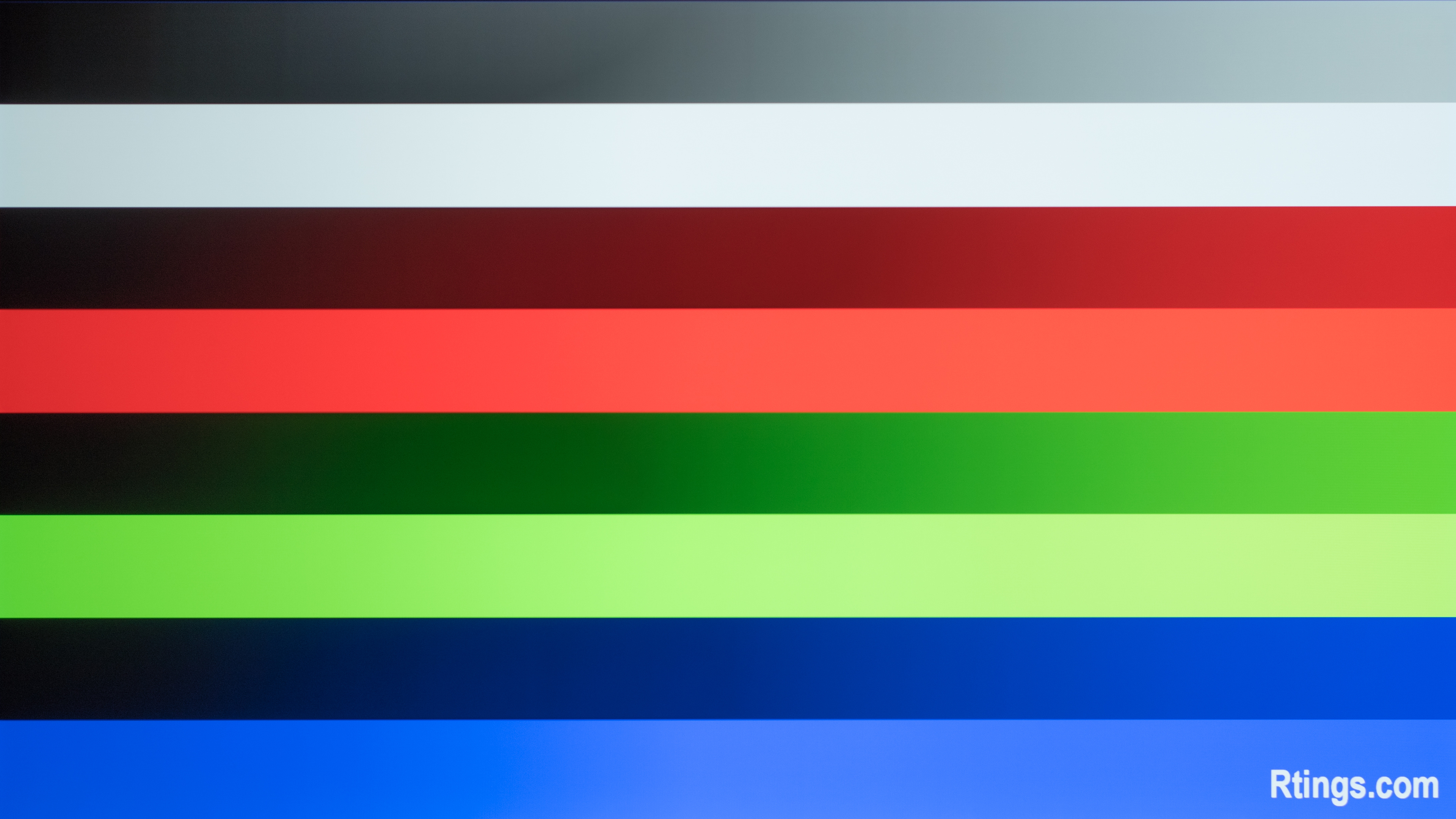

Gradient handling is a bit more technical than the peak brightness and color gamut. It defines how well a TV displays colors at different shades, and this is important for scenes with a sky or sunset. If your TV has good gradient handling, that means that the sky transitions between the different colors well, but if the TV has bad gradient handling, you'll see banding that can be distracting. Gradient handling is important for watching HDR content because HDR requires 10-bit color depth, and while most modern TVs can display a 10-bit signal, not all of them do it well. Color depth is the amount of information used to display a certain color, so a higher color depth holds more information. It's also why HDR content uses 10-bit color, while SDR has 8-bit color depth. Keep in mind that not all TVs that accept 10-bit signals have a 10-bit panel, as some use 8-bit panels with dithering to help display a 10-bit signal, but it doesn't affect the overall picture quality much.

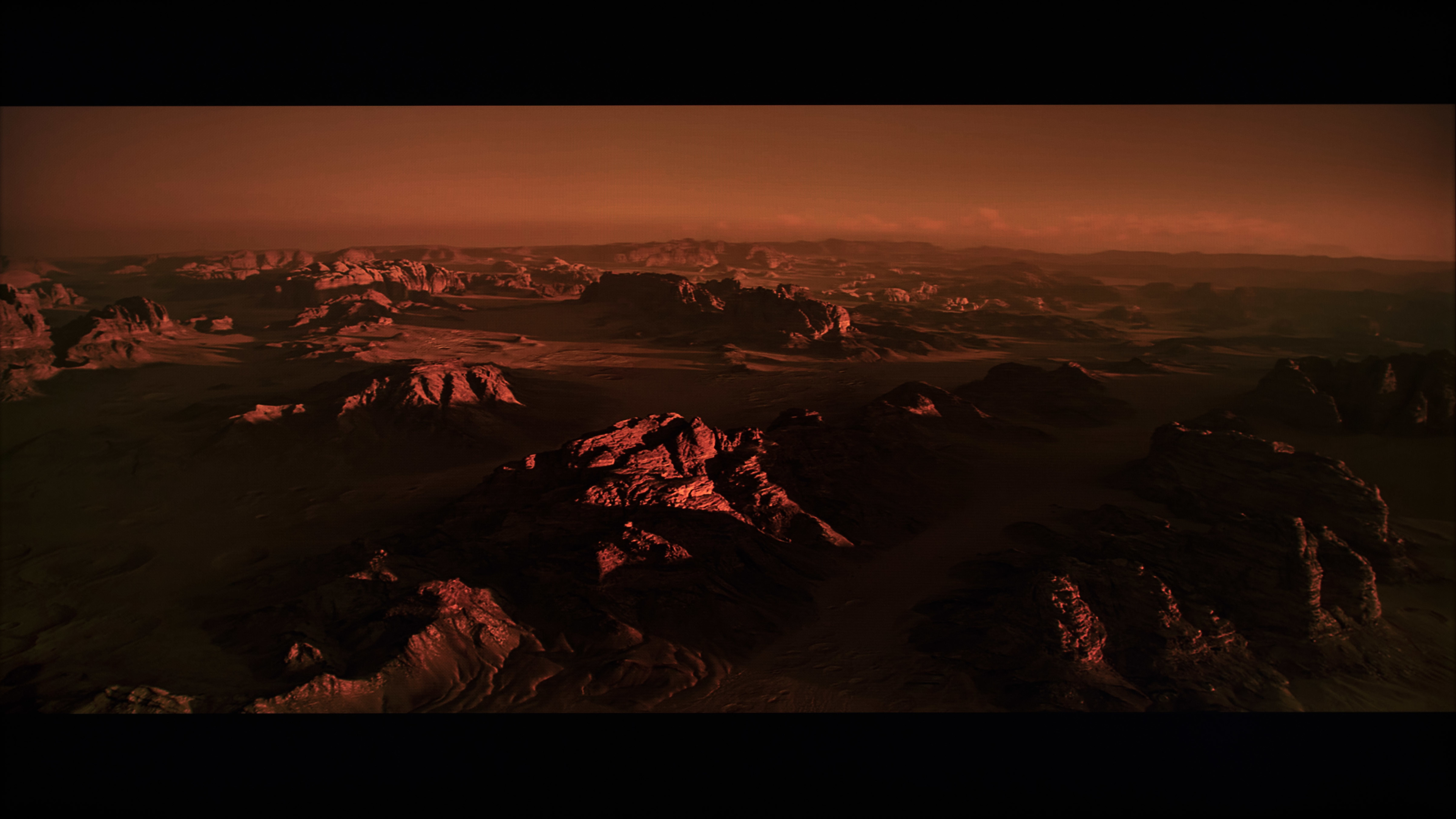

If you look at the images above, you can see how there's much more banding in the orange sky on an 8-bit image versus a 10-bit image on the same TV. It's because 8-bit color depth has less information, meaning it can't properly display those minor color changes. Most modern TVs have good enough gradient handling, but there are a few where the banding is obvious. Below, you can see two examples of what good and bad gradient handling looks like on our gradient test pattern. There's clear banding on the right, especially in darker shades.

Learn more about gradient handling

Extra Information

HDR Gaming

Although HDR was primarily designed for movies, it's made its way into video games. While you won't get the same fantastic HDR picture quality in games as in movies because games aren't mastered the same way as movies for the highest brightness possible, HDR games still deliver a better viewing experience than SDR games. Modern TVs are designed to have low input lag in HDR Game Mode, so you get the same responsive feel as if you were playing SDR games. However, there are some limitations with HDR gaming, as some TVs can't display 4k @ 120Hz signals in Dolby Vision from the Xbox Series X, so you're limited to 60 fps in that case.

How To Know If You're Watching Content In SDR Or HDR

So, after all this, when do you know if you're watching content in HDR or SDR? The simple answer is to know which type of content you're watching and look for any symbols that pop up. If you're streaming content from Netflix, you'll see a symbol to tell you which HDR format it's in, like HDR10 or Dolby Vision. Another way is to look at the TV settings menu and see if there's an HDR symbol in the picture settings.

If you see that the content you're watching is in SDR and want to change to HDR, first make sure the content is available in HDR before trying to switch. Check your TV's settings regarding the HDMI signal format or bandwidth (the exact name varies per brand), and make sure your TV is set to the highest signal format possible because HDR requires more bandwidth. If you're playing video games, you likely have to check the system or game settings to enable HDR. Of course, if your content doesn't support HDR, there's nothing you can do, and you're stuck in SDR.

Conclusion

HDR is a video signal that improves the overall picture quality by introducing brighter highlights and a wider range of colors compared to older movies that are in SDR. The peak brightness, color gamut, and color bit depth are all important to deliver an excellent HDR experience. HDR uses dynamic metadata to change the information it sends to the TV on a scene-per-scene basis so that the TV properly displays content at its correct brightness level. Every modern 4k TV supports HDR, but just because it supports the metadata doesn't mean HDR content looks good, as you need a bright TV with good contrast and a wide color gamut to deliver good HDR picture quality.