- Table of Contents

- Intro

- Test Methodology Coverage

- When It Matters

- Our Tests

- Additional Information

- Conclusion

- Comments

HDR Native Gradient

How We Measure Gradient Handling On Dozens Of TVs Each Year

Color gradients are shades of the same color, or very similar colors, displayed on the screen. Whether you're watching a movie or TV show or playing a video game, you'll encounter color gradients in certain scenes.

A sky is one of the most common color gradients across all forms of content. For example, sunsets have numerous shades of orange and yellow next to each other, since the bottom section of the sky is yellow, gradually transitions to orange in the middle, and usually is a dark blue at the top. When you watch a real-life sunset with your eyes, the complex color gradients are smooth. However, you may see lines when viewing a sunset on your TV, which are typically referred to as banding but are also known as posterization. Banding can show up in any scene with color gradients, but having a TV with good gradient handling is especially important for HDR content since HDR uses a wider range of colors and has more color depth.

Test results

Test Methodology Coverage

This test methodology has been updated for our 1.10 test bench and is also valid for 1.11. The gradient handling of TVs tested before 1.10 were done in 1080p SDR using a different approach, so those results and scores aren't comparable with our newer HDR Native Gradient category. Learn how our test benches and scoring system work.

| 1.6 | 1.7 | 1.8 | 1.9 | 1.10 | 1.11 | |

|---|---|---|---|---|---|---|

| HDR Native Gradient | ❌ | ❌ | ❌ | ❌ | ✅ | ✅ |

| Gradient (SDR) | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ |

When It Matters

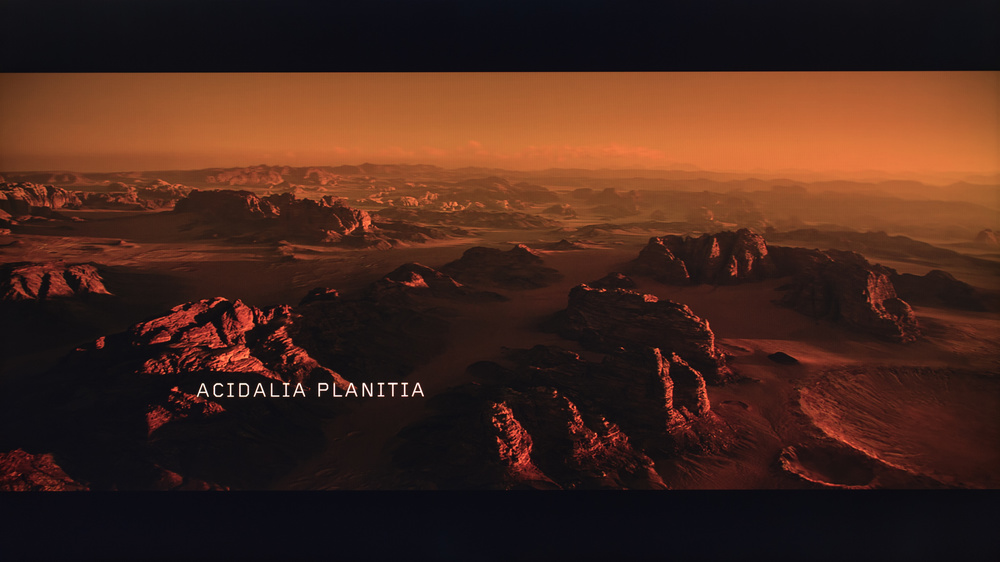

One of the primary benefits of HDR video is detailed and nuanced colors, so good gradient handling is important for seeing fine details in colors. A TV with good gradient handling displays smooth gradients, as seen in the photo above from Ridley Scott's "The Martian." There's no banding in the sky, and the shades of orange are smooth.

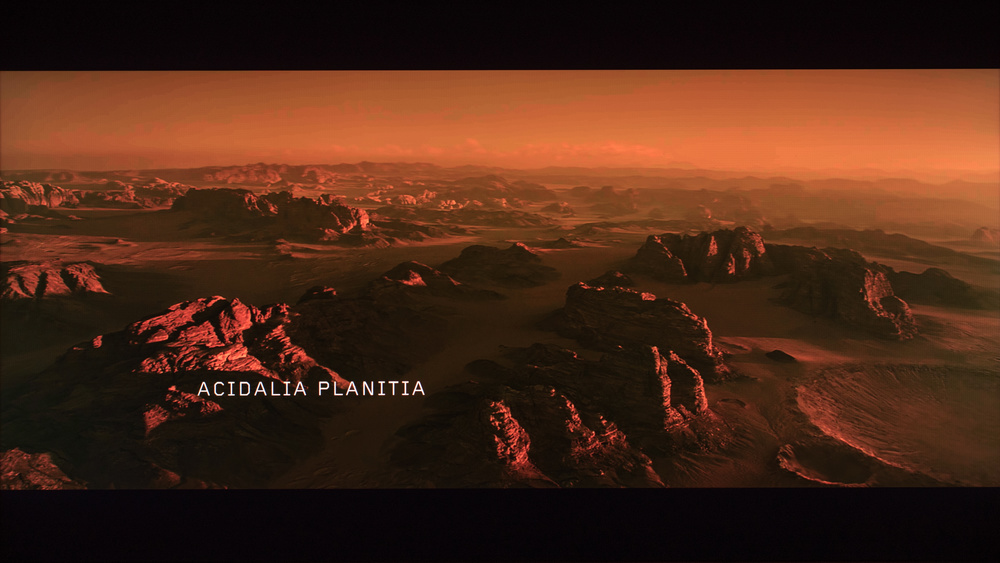

Above, you can see obvious banding in the same image since that TV's image processing doesn't handle color gradients well. Since HDR content is designed to provide a more realistic viewing experience, a TV with poor gradient handling can be distracting and break your immersion, although not everyone will be bothered by this.

Our Tests

Our HDR Native Gradient test is quite simple. Since gradient performance with real content heavily depends on what the source does with the image, we want to test the native capabilities of the display itself, not what the source does to the signal, so we use a source that will deliver consistent results. The test pattern we use is in 4k (or 8k for 8k models) and was designed by us with Photoshop using the "Classic" gradation mode, as it delivers the most linear gradation. It was encoded with a 16-bit color depth and then converted to a 10-bit, HDR10, RGB full-range video. Our test pattern has eight separate lines, which are listed below.

- Line 1: 100% Black to 50% Gray

- Line 2: 50% Gray to 100% White

- Line 3: 100% Black to 50% Red

- Line 4: 50% Red to 100% Red

- Line 5: 100% Black to 50% Green

- Line 6: 50% Green to 100% Green

- Line 7: 100% Black to 50% Blue

- Line 8: 50% Blue to 100% Blue

We then uploaded the test pattern to our Murideo Seven Signal Generator so we can directly control how the image is sent to the TVs we test. We ensure all the TVs have their gradient smoothing features disabled, hence the "Native" in our test name. We do this because these optional smoothing features remove fine details from the image, and we want to test the TV's ability to display gradients while leaving details intact. Once we see how much banding there is in each separate line, we subjectively assign a score to each individual line and then average the scoring for all lines to come up with our final score.

Our Photos

Our test captures the appearance of gradients on a TV's screen. This is to give you an idea of how well the TV can display shades of similar color, with worse reproduction taking the form of bands of color in the image. Because this photo's appearance is limited by the color capabilities of your computer, screen, browser, the type of file used to save the image, and even how your display is calibrated, banding that is noticeable in person may not be as apparent in the image. You may also see banding in the photo caused by your display, but it's not actually there on the TV.

Above is a photo of the gradient handling on the Samsung S90D/S90DD OLED, which has the best gradient handling of any TV we've tested as of the date of this article's publication, with only some very minor banding in the '100% Black to 50% Green' line.

Using the same test pattern for the Sony A80J OLED (pictured above), you can see visible banding in almost all lines since that TV has the worst gradient handling of any TV we've tested as of the date of this article's publication. You can see what exceptional and poor gradient handling looks like by comparing the amount of banding in each photo.

Additional Information

Why is there banding in gradients?

Two things can happen with banding: either two colors that are supposed to look the same look very different, or two colors that are supposed to look different end up appearing the same. This is because the TV can't display the necessary color, so it groups it with another. This is why banding appears on the screen.

Therefore, if you see lots of banding in a gradient, it means one of three things:

- The signal doesn't carry enough bits to differentiate lots of similar colors.

- The screen's bit depth is not high enough to follow the detailed instructions of a high-bit signal.

- The TV's processing is introducing color banding.

With a high bit-depth signal played on a TV that supports it, more information is used to determine which colors are displayed. This allows the TV to differentiate between similar colors more easily, minimizing banding.

How to get the best results

The TV needs to be able to accept true 10-bit signals from the source for a more detailed color. For minimal banding with TVs, you must watch a 10-bit media source on a TV that can display 10-bit color. When watching or playing HDR media from an external device, like a UHD Blu-ray player or video game console, you should also ensure that the TV's enhanced signal format setting is enabled for the input in question. Leaving this disabled may result in banding.

If you have met these steps and still see banding, try turning off any enabled processing features. Things like 'Dynamic Contrast' and white calibration settings can result in banding in the image. A lot of TVs have a gradient smoothing feature that can enabled. These features can help reduce visible banding, but they also tend to remove fine details from the image, so a trade-off has to be made. If you still see banding after trying different settings, it means the TV has poor gradient handling, and there's not much you can do about it.

Color depth: 10-bit vs. 8-bit + FRC

Color depth is the number of bits of information used to tell a pixel which color to display. 10-bit color depth means a TV uses 10 bits for all three subpixels of each pixel (red, green, and blue), compared to the standard 8 bits. This allows 10-bit to specifically display many more colors: 1.07 billion versus 16.7 million on 8-bit TVs. Although most modern TVs display a 10-bit signal, the majority of them don't use native 10-bit panels. Instead, they use a form of temporal dithering known as Frame Rate Control (FRC), which is when a pixel quickly flashes between two different colors, making it look to viewers like it's displaying the average color. 8-bit TVs can use dithering to display an image that has a 10-bit color depth and can even have smoother gradients than a native 10-bit panel. We don't differentiate between a TV with a native 10-bit panel or one that uses 8-bit + FRC since we score the end result of how smooth the gradient is, not how the TV achieves it.

Does Resolution Have an Effect?

The resolution doesn't affect gradient handling. Resolution defines the number of pixels, while color depth is the number of colors each pixel displays, so having more pixels doesn't change anything. An 8k TV won't inherently have better gradient handling than a 4k TV just because it has a higher resolution.

Gradient enhancement settings

Most modern TVs have settings to specifically improve gradient handling. Enabling these can help improve the gradients, but it also comes at the cost of losing fine details. You can see the settings names below:

- Hisense: Smooth Gradient

- LG: Smooth Gradation

- Samsung: Noise Reduction

- Sony: Smooth Gradation

- TCL: Gradation Clear

- Vizio: Contour Smoothing

Different Testing Between Monitors and TVs

We also test for gradient handling on monitors, but we do so differently than on TVs. Because of this, you can't compare the gradient scores between the two products.

Learn more about gradient handling on monitors.

Conclusion

Gradient handling defines how well a TV displays shades of similar colors. A TV with good gradient handling can display an image with shades of very similar colors well, and you don't see any banding. Good gradient handling is important for HDR content since it displays more colors than SDR content.