Over the last ten years, we've brought you more than 450 unbiased, trustworthy, and user-centric TV reviews. We buy and test more than 40 TVs each year, completely independent, with no cherry-picked manufacturer samples, and a lot goes into each review. From product selection to our user-centric, transparent approach to testing, it's not just a question of watching The Office reruns as a group and making up the scores as we go. A lot goes into testing a TV, from using specialized equipment like colorimeters and spectroradiometers to measure picture quality to custom-built tools to measure input lag and response times to help you find the best gaming TV for your needs. A finished TV review contains over 350 individual test results and thousands of measurements, and the final written review is, on average, about 5,000 words.

If you've ever wondered what goes into producing our objective TV reviews, then you've come to the right place. Below, we'll break down what goes into delivering the objective, user-centric TV reviews you've come to expect.

Product Selection

Before we get into the nitty-gritty of our testing process, let's take a quick look at everything that needs to happen before we can start testing.

The first step in our review process is deciding which TVs we buy and test. If you include all budget brands, there are over 100 TVs released each year, but we can't test them all, so we have to choose which ones don't make the cut. Like many of you, we're avid TV enthusiasts, and we follow the news closely, so we usually know which products are coming to the market long before they're released. After over ten years of reviewing TVs, we also know which ones you, our readers, tend to care the most about. We've also started sending teams to events like CES 2024 and the Sony 2024 TV Event to help us better understand what manufacturers are focusing on to help make sure we're delivering the highest-quality reviews possible.

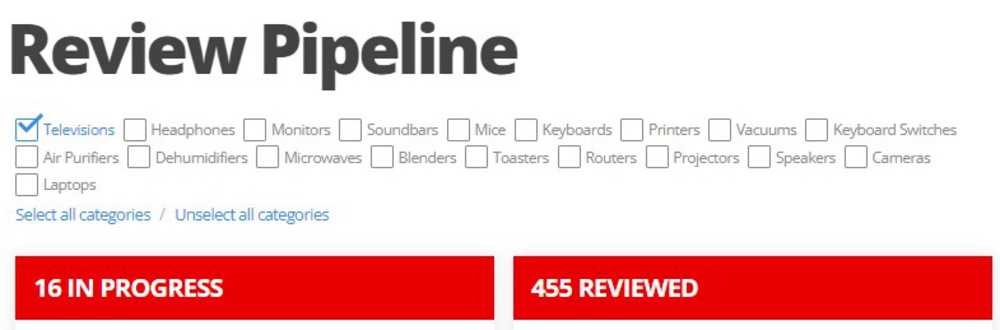

Most of our TVs are chosen based on how popular we expect them to be, and we usually buy the popular models as soon as they're released. That's not the only way we choose TVs, though. We also want to hear from you, so every 60 days, we buy a TV based on your votes. Your votes matter, so make sure you vote on the TVs you want us to purchase and test. This means we often have a lot of TVs in the pipeline at once. The review pipeline page is your one-stop shop for keeping track of the reviews you care about and voting on the ones you want us to buy.

Unlike most websites, we don't get our review units directly from the manufacturers. Instead, we buy all of the review products ourselves. We buy them like you on Amazon.com, BestBuy.com, Walmart, and other retailers, mostly from the United States. This means the manufacturers don't handpick our units; they represent what you would buy yourself. This also means that we usually get our TVs later than other reviewers.

Testing

Before we get into the fine details of our testing process, let's examine our overall philosophy and why we test TVs the way we do.

Philosophy

Our reviews are heavily built around standardized testing; that is to say, we use the exact same test patterns and materials for every TV we test. You might be wondering why we do this. Does it make sense to test a $5,000 high-end Sony TV with the same materials used to test a $300 Fire TV? There's a very clear advantage to this approach. Using the same materials provides a level playing field. Since everything is tested the same way, you can directly compare the Sony TV and the Fire TV (see an example of how these compare) and know exactly how much better the Sony model is. It's also important to note that price is not a factor in our scores.

This standardized testing should be seen as a common baseline, but it's not necessarily the final result. Before starting any TV review, one of our first steps is to read about the product in places like Reddit and AVS Forum, so we also know what everyone else is saying about the TV. This allows us to run extra tests to ensure we're testing everything you care about. We can't test every possible combination of settings and external devices, though, so if there's something you really care about that you want us to test, make sure you reach out on our forums or various social platforms and let us know!

Once we've unpacked and verified that a new TV is ready for testing, what happens next? The first step is for one of our two professional photographers to photograph the TV's physical aspects and overall design. Like our test results, our photos are designed to give you a consistent way to compare the various characteristics of a TV. Our consistent photo methodology ensures that you can directly compare the back photos of a TV, see exactly how they compare, and look at things like the TV's cable management. Once the photos are done and a TV is scheduled for testing, the TV is assigned to one of our few highly-trained testers. That one tester will be responsible for testing every aspect of that TV's performance from start to finish, which usually takes about six working days to complete.

Testing Process

When testing a TV, some tests rely on an accurate calibration, and some don't. Therefore, we break down our testing into two steps: first, we run a gamut of tests that aren't impacted by calibration, like input lag, VRR support, response time, and supported resolutions. Then, we calibrate the TV in SDR and do a partial calibration in HDR, and we run the rest of the tests that have to be done with the TV in its calibrated state. Some individual tests are still done without our calibrated settings. However, we don't break down the results into calibrated vs. uncalibrated modes.

Our testing process is divided into six categories: design, picture quality, motion, inputs, sound, and smart features. You can see each of these with their respective tests below, and you can click the links for more in-depth articles on how we perform each test. Below, we'll provide a brief overview of each category. Each section is presented in the order in which it appears in the final published review, not the order in which we test it.

| Design | Picture Quality | Motion | Inputs | Other | |

|---|---|---|---|---|---|

| Style | Contrast | Gray Uniformity | Response Time | Input Lag | Frequency Response |

| Accelerated Longevity Test | Local Dimming | Black Uniformity | Flicker-Free | Supported Resolutions | Distortion |

| Stand | HDR Brightness | Viewing Angle | Black Frame Insertion (BFI) | PS5 Compatibility | Interface |

| Back | PQ EOTF Tracking | Reflections | Motion Interpolation | Xbox Series X|S Compatibility | Ad-Free |

| Borders | SDR Brightness | HDR Native Gradient | Stutter | Inputs Specifications | Apps and Features |

| Thickness | Color Gamut | Low-Quality Content Smoothing | 24p Judder | Total Inputs | Remote |

| Build Quality | Color Volume | Upscaling: Sharpness Processing | Variable Refresh Rate | Audio Passthrough | TV Controls |

| Pre/Post Calibration | Pixels | In The Box | |||

| Misc | |||||

Testing conditions are also a very important consideration. With very few exceptions, almost all of our tests are done in a dark, light-controlled room, as we don't want stray ambient light impacting our results. Our audio testing is done in a different room that's treated for ambient sound instead of light (although it can also be used as a dark room if we need it). Like your car in the winter, TVs also need time to warm up, so we also make sure to properly warm up the TV before any test that this might impact.

Finally, our testing uses a mixture of test slides and real content. We have access to a lot of different sources, including streaming video services, test PCs, a Murideo Seven-G signal generator, and 4k UHD Blu-ray players like the Panasonic UB-820. We use test patterns for most of our objective testing and measurements, but most people don't watch test patterns all day, so it's important that we understand what you'll see in real life. We'll often use real content to confirm what we're seeing on test patterns to ensure that our results represent what you'll see at home.

Throughout the review process, you can track the status of your favorite products. Our testing status tracker will show you exactly how many tests have been completed out of 367 total tests as of TV methodology version 1.11. Not all tests are created equally, though; some consist of thousands of individual measurements, so they can be time-consuming!

Design

You might be wondering how exactly we can test a TV's design. Well, we don't really. Most of this section is just measurements of different aspects of the TV's design so you can decide if the TV fits well in your setup. Unlike most of our testing, these results are only valid for the specific size we bought since a TV of a different size will obviously have different measurements. We measure the stand and look for alternate stand positions, as these aren't always advertised by the manufacturer, and measure those as well. We also check the design of the back, looking at things like the VESA mounting standard, cable management options, and how accessible the ports are if you decide to wall-mount the TV.

Picture Quality

Picture quality is one of the most important aspects of a TV's performance. After all, you're not buying an expensive speaker; you're buying a TV, and you want it to look good. It goes without saying that our picture quality testing is extensive and takes up the bulk of our testing time.

We use two main tools for our testing: a Colorimetry Research CR-100 colorimeter and a CR-250 spectroradiometer. To start testing the picture quality, we first have to calibrate our CR-100 to the TV we're testing. To do that, we use the CR-250 to measure the specific light spectrum on the TV, creating a calibration profile unique to that TV. We load that profile into the CR-100, and that's used for all measurements going forward. We have to do this because TVs don't all produce light the same way; there are many different types of TV panels, and even between similar models, there are slight differences in the light they produce. Two colors that look similar to our eyes can actually measure differently depending on the exact spectra of light used to produce them. This is known as metamerism. Creating a profile for each TV we test ensures a higher degree of accuracy in our measurements.

Once we've calibrated our instruments, it's time to calibrate the TV. Using our CR-100 colorimeter and CalMAN software, we manually calibrate the TV using the 2- and 10/20-point calibration settings. We're aiming for the best results given a relatively short time limit. It's possible to achieve better results on most TVs than what we post on our settings pages, but they provide a good starting point and help us evaluate how easy it is to calibrate any given TV.

We won't get into the specifics of each picture quality test here since there are a lot of them. If you'd like to learn more about the testing methodology for a specific picture quality test, check out our TV articles or click "Learn about _____" on any review page. Most of our tests are done under very similar conditions: a completely dark room, with the calibrated settings above, and using the CR-100 and CR-250 meters mentioned above. Some tests, like gray/black uniformity, are done with a camera and photo processing software. We still take most of those in a completely dark room, and the camera settings are adjusted to ensure the picture in the review is as close as possible to what we see when we're using the TV in our testing rooms.

Motion

Motion doesn't require the TV to be in its calibrated modes; it just requires the most accurate settings before calibration. The first step in running our gamut of motion tests is to measure the TV's response time using our custom-built internal input lag and response time tool. This tool allows us to measure how quickly the TV can change from one frame to the next. This test is highly sensitive to the temperature of the TV, so as we mentioned above, we need to warm up the TV properly before running these tests. We use the response time results to calculate the amount of stutter on the TV.

For the other related motion tests, we use a variety of different tools to check for things like VRR support and judder removal and to measure the TV's backlight flicker frequency. Flicker is a very important factor for many people, so we check this with various settings, as some TVs change their backlight flicker depending on the selected picture mode.

Inputs

Like the motion testing above, most of the input tests are done using our custom-built internal input lag and response time tool. As the name suggests, this tool enables us to measure a TV's precise input lag and overall response time. With the help of dedicated test PCs, we measure the input lag across a wide range of supported resolutions and refresh rates. That same PC is then used to determine which resolutions the TV supports and check for VRR support across multiple resolutions and refresh rates. We back up these results by also testing the TV with an Xbox Series X and PS5, as there are occasional differences in the formats supported from a PC vs. those supported from a console.

We also look at the TV's physical inputs, and using the Murideo Seven-G signal generator, we evaluate a few things, including the maximum bandwidth supported by the HDMI ports. That same signal generator is then used to test for the various audio passthrough formats to make sure you can enjoy your favorite movies to their fullest, with high-quality audio to match the improved visuals you'll get from a new TV.

Sound

Our sound testing is rather simplistic at the moment. Playing audio from a PC with a Focusrite Scarlett 2i2 external sound card, we use a single Dayton Audio EMM-6 Electret Measurement microphone to measure the frequency response of the TV with various sound sweeps. Picture quality doesn't matter here, so we can do this test with or without our calibrated settings, but we turn off all sound post-processing features on the TV. For TVs with a room correction feature, we enable it and place it with the microphone.

Smart Features

The vast majority of TVs sold these days have a built-in smart system. Our testers spend over a week with each TV, and during that time, they spend considerable time just interacting with the system. That information and user experience is used to help us rate the overall smart features. We also run a few dedicated tests, like timing how long it takes to switch to YouTube, making sure certain apps are supported, and checking that HDR is working properly. We check the TV for ads, which almost all have these days, and look for any settings that could reduce the number or type of ads we see. Finally, we rate the remote on its ease of use and check for things like voice control on both the remote and the TV.

Writing

Once the testers are done testing a TV, a peer-review process with the writers and testers takes place to validate the results. Transparency is key for us, and if we make a bold claim about a product, we want to ensure we have the data to back it up. We look at other similar models, including predecessors, to make sure we've dotted our 'i's and crossed our 't's. If any unusual results come up, we'll perform additional testing, including comparing TVs side-by-side to make sure that our objective measurements match what we see with our own eyes and, more importantly, that it matches what you'll experience at home. We're uniquely positioned to do this, as unlike most reviewers, we buy every product we review ourselves, so we still have almost every TV we've tested over the last few years.

Once the team has validated the results, early access is published to the website for our insiders, and the writing process begins. It takes about three days on average to convert the nearly 400 individual test results and thousands of measurements in a TV review into the finished product you see on the website. The writing process culminates in an extensive peer-review process before going off to our fabulous content editors to make sure everything is hunky-dory before publishing. Finally, once the entire review has been checked, rechecked, and checked again, the final review is published for the entire world to enjoy. All said and done, the entire TV review process takes about two weeks from unpacking to publishing, and although the majority of the work is done by three people (photographer, tester, and writer), it takes the combined team effort of about a dozen people to make each review possible.

Recommendations

After we've tested and published our findings, we also take a step back and look at our recommendation articles. These curated best-of articles are updated frequently, but sometimes a TV comes along that just can't wait. We look at the overall market position of each product, how they perform on our scoring, and the overall impact on the consumer. We want to make sure you get your money's worth, so these articles don't just look at the score; they take other factors like pricing and availability into account.

The recommendation articles are written by the same team of dedicated TV writers who know the ins and outs of the TV market and exactly where a product might fit. Since the same people work on the reviews and the recommendations, they also know what our scoring doesn't catch and make sure to take those extra factors into account when deciding which products are worth recommending and which ones you should stay away from.

All the data and results that go into making our recommendations are also available for you to parse and make your own decisions. With tools like our table tool, recommendation tool, and compare tool, you can easily compare hundreds of TVs across dozens of metrics and make your own picks.

Retests, Burn-In, And Longevity

Unlike most reviewers, we buy everything we test and keep it after testing. This provides us with a unique advantage: we can go back and retest things. We do this often for various reasons. Our test methodology system enables us to retest previously reviewed products under a new methodology so you can see how new TVs perform and how they compare to previous generations. We can also go back and retest TVs if they receive firmware updates. Buying everything has also allowed us to run not one, not two, but three real-life burn-in and longevity tests to directly study how long a new TV should last.

Conclusion

If you want to learn more about our overall pipeline process, from purchasing to unpacking, testing, writing, and finally publishing, check out our overview video here. As for testing, the above was just a brief overview of the work that goes into testing a TV. If you'd like to learn more about our specific testing methods, check out our TV articles or click "Learn about _____" on any review page.