- Table of Contents

- Intro

- Product Selection

- Philosophy

- Testing

- Writing

- Videos and Further Reading

- How to Contact Us

- Comments

How We Test Mice

Transforming Our Data Into Comparable Reviews

We test dozens of mice annually, staying completely independent and unbiased by purchasing each one ourselves. This way, we can be sure we're not testing cherry-picked review units. But we don't just play a few rounds of Fortnite with each mouse and give our vibe-based feelings. We test each mouse on the same standardized test bench to ensure you can compare one mouse to another.

Our pipeline relies on a team of experts and probably has more moving parts than you'd expect. We want to show you how it works so you know you can trust our results. We'll examine how we buy our products, conduct our tests, and write our reviews. The entire process can take days or even multiple weeks to produce a review from start to finish.

Product Selection

With hundreds of mice hitting the market every year, we can't test them all. Our selection process largely hinges on a mouse's current or anticipated popularity. We analyze search trends and closely monitor which products people are talking about online. While we aim to purchase major releases as soon as they drop, we also rely on input from you and the rest of the enthusiast community to guide our choices.

We love hearing from you in our forums, but the most direct way you contribute is by voting for which mouse we'll review next. We restart our polls every 60 days and buy the product that receives the most votes within each cycle.

Have your say on which mice we test on our Voting page.

Philosophy

We purchase our own products and put them under the same test bench so you can easily compare the results. No cherry-picked units sent by brands. No ads. Only real tests.

That's our slogan, but it's not just an elevator pitch. We hope it highlights the underlying motivations behind how and why we test the way we do.

How we buy products

We buy our test units just like you would. We don't accept review samples from brands and do our best to purchase anonymously from major retailers. This way, we avoid hand-picked units that could skew our results. The trade-off is that we don't get products as quickly as other reviewers who receive early samples and often publish their reviews the moment a mouse hits the shelves.

Standardized tests

We use a standardized test bench, which is the key to making our reviews useful for making a buying decision. Testing products in the same way ensures you can use our specialized tools to compare products side-by-side on an even playing field to determine which mouse best suits your needs.

We also have a powerful custom table tool that lets you view all the mice we've tested with adjustable filters. You can choose to display all the mice we've tested by button count, sensor model, or even every mouse under a certain weight or within a certain price range, just to give a few examples.

We're also constantly working to improve our test benches as time goes on. We adapt our tests to adapt to new technological changes and feedback from the community. You can track all the changes we introduce to our test bench in our changelogs. However, this approach also means that when we update our methodology, some of the older, less relevant products are left behind on older test benches. As time goes on, depending on changes to our future test benches, comparisons to older products may not be as applicable, but this remains an important aspect of how we improve over time.

Testing

Our mouse reviews are made up of three sections: Design, Control, and Operation System and Software. The following is a quick overview of each section that makes up our testing suite. In some cases, we have dedicated pages with much more precise detail about our methodology, particularly for some of our most sensitive and important performance tests for evaluating Click Latency, Sensor Latency, and CPI. If you're curious, see a list of all our mouse articles.

Design

The first section of our review covers a lot of ground. It has basic information like a mouse's dimensions and weight that we measure with calipers and an electronic scale.

It also covers more subjective elements, like how the mouse actually looks, whether it suffers from any build quality issues, and how suitable it is for different hand sizes and grip types.

This section is also home to our 3D Shape Compare Tool. We produce a detailed, full 3D scan of each mouse we test using an ARTEC Space Spider 3D scanner. Our tool allows you to explore the scans of any mice in our database. Align them perfectly to compare their sizes, rotate them, or even overlap them to see exactly which curves are different and where some of the biggest shape differences are. This can be an especially powerful tool for comparing shapes you may already be familiar with. With hundreds of models scanned in our database, it's fairly likely that you can compare the mouse you're currently using to one you may be interested in buying.

|

Control

In this section, we evaluate performance data. We test main button switch actuation, the number of programmable inputs, and all sensor specifications. This is also where we test raw gaming potential across three major tests: CPI, click latency, and sensor latency.

We always test mice using optimal latency conditions. This includes lowering debounce settings as low as possible, enabling any low latency or competition modes, disabling RGB effects, and using maximum polling rate settings. We know that it's critical for us to fairly evaluate each mouse performing at its peak for comparability.

In order to provide a well-rounded understanding of these products, we often include supplementary measurements at lower polling rates or less optimized settings to see how much performance you leave on the table, especially for wireless mice where you may decide to use lower settings to conserve battery.

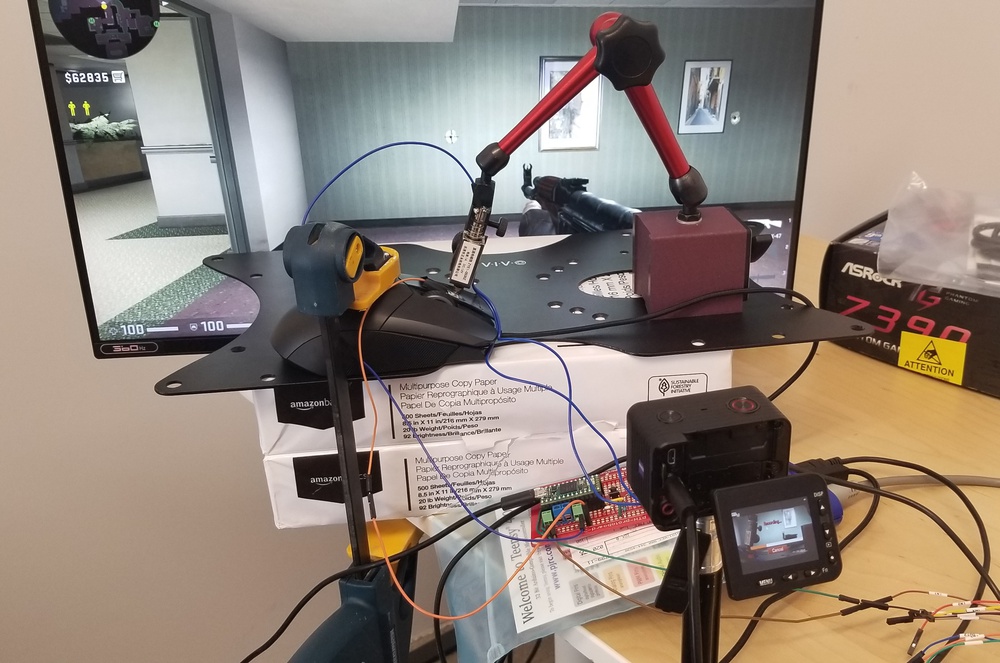

We also film each test with an associated in-game video. This video element only serves as a way for you to validate that we properly ran our tests, but the video itself isn't used for measuring. This is important because we're not testing end-to-end latency. We intercept input information directly from the mouse using a Beagle 480 USB analyzer.

Operating System and Software

This final section is relatively straightforward. We assess a mouse's compatibility across different operating systems and evaluate any software options. We'll often comment on how easy its software is to use and whether it has any new or unusual features. In some cases, we'll even comment on how well the software is received within the wider community to give you advance warning of issues or complaints people may be having.

Writing

Once testers finish testing a mouse, we begin the first of two peer review processes between writers and testers to validate the results. Although this step is invisible in the final review, it's a crucial quality check. We cross-reference results against similar models and leverage expert knowledge alongside our own expectations and community feedback. We identify discrepancies, ask questions, and run retests as needed. This process can take anywhere from twenty minutes to several days, depending on the complexity of the findings. Once writers and testers agree on the results, we publish early access results for our insiders and begin writing the review.

The actual text in our reviews is wide-reaching. It includes simple elements like an introduction, which is a brief overview of some of the standout aspects and advertised features. We also produce more targeted blurbs about how the mouse performs for specific use cases. Additional sections include more free-form boxes where we compare size and model variants within the same lineups as well as comparisons to other similar mice in the marketplace.

While we expect our test results to speak for themselves for a knowledgeable audience, many of our tests have supporting text to provide extra context if you're unsure how to interpret the results. This text will often also offer comparisons to other products and occasionally explore unusual results or exceptions in more technical detail.

Once the writing is finished, a second round of peer review begins. A different writer reads through the review and may pose questions, make corrections, or suggest changes for readability and comprehension. Afterward, the tester who performed all the tests on this mouse then validates all writing for technical accuracy.

Once the validation ends, the review is sent to our editing team, who do an enormous amount of work behind the scenes to make sure the final review is properly formatted, consistent, and error-free. They also make sure that they adhere to our internal style guidelines and are ultimately responsible for maintaining a high standard of quality for our readers.

Recommendations

Our mouse recommendations fall outside the scope of how we test and publish a single review, but they're closely related. These recommendations are curated lists designed to help people decide what mouse to buy, which we, as writers, think about constantly and update frequently. When preparing these recommendations, we're not just looking at which products score the best. We take other factors into account, like pricing and availability, and occasionally elements like the buying experience and customer support.

It's important to remember at the end of the day, our recommendations are only recommendations. Our intention isn't that they serve as definitive tier rankings for advanced hobbyists. Rather, we want them to be most helpful for a non-expert audience or for those who may not have purchased a mouse for a while and aren't sure exactly where to start.

Retests

We keep the products we test for as long as they're relevant and widely available for purchase, and often even longer. At regular intervals, our writing, testing, and test development teams collaborate to decide which products to keep and which we can safely resell. We typically hold on to products featured in our recommendation articles, those expected to remain popular despite their age, and occasionally, noteworthy products we may need to use as references when updating our test bench.

Keeping a large number of mice in-house lets us retest them whenever needed. We might retest a mouse for various reasons, such as responding to community requests about potential build quality or performance issues being reported that show up over time. One of the most common reasons we retest is when manufacturers release major firmware updates, which often promise better sensor performance, new features, or higher maximum polling rates.

This process resembles our review testing but on a smaller scale. Testers perform the retests, and then writers and testers collaborate to validate them. Writers then take over once more and update and text with context on our new results. After our editing team validates the work of our writers, we publish a new version of our review. For full transparency, we leave a public message to address what changes we made, why we made them, and which tests were affected.

Videos and Further Reading

If you're curious about watching our review pipeline in action, check out this video below.

We also produce in-depth video reviews and recommendations using a similar pipeline of writers, editors, and videographers with multiple rounds of validating for accuracy at every turn. For mouse, keyboard, and monitor reviews in video form, see our dedicated RTINGS.com Computer YouTube channel.

For all other pages on specific tests, test bench version changelogs, or R&D articles, you can browse all our mouse articles.

How to Contact Us

Constant improvement is key to our continued success, and we rely on feedback to help us. We'd encourage you to send us your questions, criticisms, or suggestions anytime. You can reach us in the comments section of this article, anywhere on our forums, on Discord, or by emailing feedback@rtings.com.