The Dreame L10s Ultra is a premium robot vacuum. It boasts an RGB-color camera and a structured light sensor for real-time hazard avoidance. It also includes a lifting mopping system that allows it to vacuum carpets and clean bare floors without getting the former wet, as well as a multi-function dock that not only empties the vacuum's dustbin but also refills its water tank and washes and dries its mopping pads.

Our Verdict

The Dreame L10s Ultra does a reasonable job overall in a household with multiple surfaces. It's good on bare floors but sub-par on carpet where it just can't suck up fine debris and is downright poor at picking up pet hair from carpeted surfaces. On a positive note, its obstacle handling is very good. It will also automatically raise its mop heads when transitioning from bare floor to carpet. Its multifunction dock takes care of menial maintenance tasks like emptying the dustbin, refilling the mopping solution, and cleaning and drying the mop pads. Though the mopping system is effective on stains, it needs a detergent solution to work well. This adds to the high ongoing maintenance costs, along with the frequent inspection and cleaning of its many parts.

-

Advanced multifunction docking station.

-

Lifting mop system.

-

Great performance on bare floors.

-

Effective hazard recognition system.

-

Poor pet hair pickup on carpets.

-

Mopping system performs poorly without the addition of detergent.

-

Struggles with fine debris on carpet.

-

High number of parts to be inspected and cleaned periodically.

The Dreame L10s Ultra is an alright option for pet owners. Its pet hair cleaning performance is pretty poor on carpeted floors, and it does a bad job of sealing in fine allergens as it cleans. It'll also need to make an extra pass to fully clear away any dried-on stains, and that's if you decide to mix detergent into its clean water tank; its stain-clearing performance is quite poor if the system uses only water. That said, this vacuum operates very quietly, so it won't be too much of a disturbance to pets that are easily startled. Thanks to the built-in RGB camera, you can also use it to keep an eye on your pets while you're away from home, and it does a very good job of steering around things like pet waste, so you won't have to worry about any nasty surprises if you leave it to run while you're away from home.

-

Advanced multifunction docking station.

-

Can be used as a roving security camera to check in on pets or children.

-

Effective hazard recognition system.

-

Poor pet hair pickup on carpets.

-

Middling filtration performance.

-

Mopping system performs poorly without the addition of detergent.

-

High number of parts to be inspected and cleaned periodically.

The Dreame L10s Ultra delivers good debris pickup on bare floors. It's quite effective in handling large and medium-sized material, but you might need to have it do another pass to clear away finer material. It can also leave behind a bit of debris that's been piled along walls and in corners.

-

Great performance on bare floors.

The Dreame L10s Ultra's debris pickup performance on carpets is middling. While it'll clear relatively large amounts of bulky and medium-sized debris within a pass or two, it really struggles with finer debris, even when it's only been lightly pressed into carpet fibers.

-

Poor pet hair pickup on carpets.

-

Struggles with fine debris on carpet.

The Dreame L10s Ultra does a poor job of dealing with pet hair on surfaces like low-pile carpeting. It simply doesn't provide enough surface agitation to lift away hair from carpet fibers and can drag lighter strands around instead of sucking them up.

-

Poor pet hair pickup on carpets.

The Dreame L10s Ultra has very good obstacle-handling performance. Its pathing is thorough and efficient, with the vacuum cleaning pretty close to furniture like table and chair legs. Its hazard avoidance system, which uses a 3D Structured light sensor and camera to spot obstacles, is very effective, allowing it to run in cluttered areas without getting stuck or making a bigger mess.

-

Effective hazard recognition system.

- 6.8 Multi-Surface Household

- 6.7 Pets

Performance Usages

- 7.5 Debris Pickup: Hard Floor

- 5.6 Debris Pickup: Carpet

- 5.0 Debris Pickup: Pet Hair

- 7.9 Obstacle Handling

Changelog

-

Updated Apr 16, 2025:

We've added text to this review for the new tests added in Test Bench 1.0.

- Updated Apr 16, 2025: We've converted this review to Test Bench 1.0, which updates our performance testing to include new tests to evaluate Obstacle Adaptability, Obstacle Avoidance, Height Clearance, and Threshold Clearance. We've also introduced new performance usages. For more information, see our changelog.

-

Updated Feb 12, 2025:

We've lowered the score in Build Quality to better align with other vacuums that meet a similar standard of build quality. The text in that section of the review has also been updated.

-

Updated Nov 04, 2024:

We've added a link to the Roborock Qrevo S in the Stains section of the review to provide an alternative that's more efficient in scrubbing away stains.

- Updated Oct 11, 2024: We've added a link to the Roborock Qrevo S in the 'Hard Floor Pick-Up' section of the review to provide an alternative that's more effective on bare flooring.

Check Price

Differences Between Sizes And Variants

The Dreame L10s Ultra is only available in a single color scheme: 'White.' You can see the label for the unit we tested here.

Let us know in the comments if you come across another variant of this vacuum.

Compared To Other Robot Vacuums

The Dreame L10s Ultra is a high-end robot vacuum. It's quite feature-packed for something in this price bracket, with an RGB-color camera for real-time hazard identification and avoidance, a dynamic mop system that it can raise on carpeted floors, and its multifunction dock, which empties the vacuum's dustbin, refills its internal water tank, and washes and dries its mopping pads. While it isn't the most powerful machine on the market nor the most effective in terms of debris pickup, it does offer a wide array of features that make for a hands-off user experience.

If you're looking for alternatives, look at our list of recommendations for the best robot vacuums, the best robot vacuums for hardwood floors, and the best robot vacuums for carpet.

While the Dreame L40 Ultra is the higher-end offering to the Dreame L10s Ultra, the L10s Ultra offers superior performance on every surface type. This upset in performance is attributable to the L40 Ultra's pathing, which yields inconsistent results and adversely impacts debris clearing by dragging debris around and insufficiently covering surfaces. That said, if you want a robot vacuum that offers all the bells and whistles and granular customization options, the L40 Ultra is the vacuum for you.

The Roborock Qrevo S is slightly better than the Dreame L10s Ultra. The Roborock's multifunction dock uses larger 2.5L dirtbags in comparison to the Dreame dock's 2.15L bags. The Roborock also has a considerably longer max battery life, and its mopping system is a little more effective in scrubbing away stains. On the other hand, the Dreame's hazard avoidance system is far more effective overall, making it a better option for cluttered environments.

The Dreame L10s Ultra is better than the eufy X10 Pro Omni. They're similar in terms of features. Both are hybrid vacuum/mops bundled with multifunction self-empty docks that refill their respective water tanks and wash and dry their mop pads. That said, the Dreame is more effective in dealing with debris on most floor types, and it's less prone to getting stuck compared to the eufy. The Dreamehome app also has a slightly more comprehensive suite of features than the eufy Clean app.

The Roborock S8 Pro Ultra is a slightly more capable robot vacuum than the Dreame L10s Ultra. The Roborock's twin-roller head is better at dealing with pet hair, and its Reactive 3D hazard detection system is better at spotting obstacles, making it less likely to get stuck on obstacles than the Dreame. Its brushroll lifting system also prevents the rollers from getting dirty as it mops. However, it's worth noting that the Dreame's multi-function dock, which has similar capabilities to the Roborock's RockDock, is taller and narrower, making it a little easier to fit in tight spaces.

The eufy Omni C20 and the Dreame L10s Ultra are both robot vacuums with mopping capabilities. While they perform similarly overall, the C20 takes the cake overall despite not having as many bells and whistles. It delivers better debris pick-up performance across surface types, although the L10s Ultra's mopping system does a much better job. Another place where the L10s Ultra has the edge is maneuverability. It has real-time obstacle avoidance sensors that work fairly well, while the eufy struggles with common obstacles and tends to get stuck on certain carpeting and tassels.

The Roborock Qrevo Pro and the Dreame L10s Ultra are ultimately very close in overall performance and share very similar feature sets. Both vacuums are bundled with multi-function docks and share similar motor and battery specifications. Their hazard-avoidance systems have a major difference: the Dreame has an RGB camera and structured light sensor, whereas the Roborock has only the latter. This gives the Dreame superior obstacle avoidance performance and allows you to use it as a roving security camera. On the other hand, despite sharing similar mopping systems, the Roborock is much more effective in dealing with stains, and its FlexiArm design allows it to clean thoroughly along walls and in corners.

The Dreame L10s Ultra is a more feature-packed vacuum than the Roborock S8+/S8, but the latter is simply a stronger overall performer. The Dreame is bundled with a more advanced multifunction dock that not only empties its dustbin but also refills its water tank and cleans and dries its mopping pads; you'll need to upgrade to the more expensive Roborock S8 Pro Ultra to get these features. The Dreame vacuum also has a more advanced hazard avoidance system than the regular S8, with the addition of a color-capable camera. That said, the Roborock clears away more debris on carpeted floors, and its twin roller head is far more effective in cleaning pet hair. Its mopping system is also far more effective; the Roborock vacuum's scrubbing does a better job of dealing with stains with just water compared to the Dreame's system, which performs best with the addition of detergent.

The Dreame L10s Ultra and the Narwal Freo X Ultra each have their own strengths, though ultimately, the Dreame is the slightly more well-rounded option. The Dreame incurs fewer maintenance requirements, does a much better job of navigating around obstacles, and has a more effective mopping system. Its companion app is also better overall, with an even bigger feature set and a more intuitive interface. Meanwhile, the Dreame charges faster while offering a longer battery life. It also incurs a slightly lower cost of ownership.

The Roborock Qrevo and the Dreame L10s Ultra each have their own strengths and weaknesses. They're very close in general specifications, which isn't overly surprising considering both brands used to share Xiaomi as a parent company. Both vacuums are bundled with multi-function docks and share similar motor and battery specifications. That said, their hazard-avoidance systems have a major difference: the Dreame has an RGB camera and structured light sensor, whereas the Roborock has only the latter. In practice, this makes the Dreame faster and more accurate in identifying obstacles and allows you to use it as a roving security camera. On the other hand, despite also sharing pretty similarly designed mopping systems, the Roborock is much more effective in dealing with stains.

The Dreame L10s Ultra and the Roborock S7 MaxV are both hybrid vacuum mops with relatively advanced real-time hazard avoidance systems. That said, you'll need to upgrade to the pricey Roborock S7 MaxV Ultra to get something that's bundled with a multifunction docking station. Meanwhile, the Roborock does deliver superior debris-pickup performance on most surface types, and its mopping system does a better job of scrubbing away stains.

Test Results

The Dreame L10s feels impressively well-built. It's made mostly from high-grade plastic with a mix of glossy and matte finishes and has a dense, solid feel. There are a few obvious weak points, though the dustbin and trim piece covering the front-facing camera are made of thin plastic that feels rather brittle.

The vacuum itself is also quite easy to take apart and put back together; the side brush, dustbin, and brushroll are all quite easy to remove and reinstall back into the vacuum itself.

This vacuum has quite a few parts that you need to clean regularly, though thankfully, everything is pretty easy to access.

- Dirt compartment: Since this vacuum has a self-emptying function, in which debris is sucked from its internal dustbin into a dirtbag inside its dock, you actually won't have to empty its dustbin manually all that often. That said, the manufacturer does recommend rinsing it under water every two weeks. Thankfully, removing it is easy. All you need to do is lift the top cover of the vacuum and pull it free.

- Base station dirtbag: The base station dirtbag is held in place with a plastic collar, so it's pretty easy to slide out after you've opened the dock's dirtbag compartment door. The bag self-seals after you've removed it, preventing dust and debris from spilling out during disposal. It has an advertised capacity of six to eight weeks' worth of debris, though you'll likely find it less than that depending on whether or not you have floors that gather a lot of dirt, dust, and pet hair.

- Vacuum filter: The filter is held into a slot at the top of the vacuum's dirt compartment and can be un-clipped very easily. You should pull out any stuck-on solid debris whenever necessary, and the manufacturer recommends that you rinse it under water every two weeks.

- Brushroll: After popping off the brush guard, you can essentially just pull the brushroll out of its slot. The roller is somewhat tangle-prone, especially compared to alternatives like the Roborock S8, which features twin rubber rollers. Thankfully, this unit does come with a cleaning tool that allows you to cut away hair that's tangled in the roller. You should clean the roller twice a week.

- Side brush: You can just pull the side brush out of its slot. You should clear away any stuck-on debris every two weeks, either by hand or with the provided cleaning tool.

- Omni-directional wheel: You should remove the front wheel and rinse it every month to clear out any dirt and grime.

- Sensors/Charging contacts: You'll have to wipe off the RGB camera cover at the front of the vacuum, the metal charging contacts underneath the vacuum, and at the front of the dock, and the auto-empty inlet with a clean dry cloth once a month.

The Dreame L10s Ultra's mopping systems also have their own fair share of required upkeep.

- Mop pads: The mopping pads are velcroed onto their base plates, which in turn are attached to twin magnetic pegs under the vacuum. You can simply pull these base plates off. No specific maintenance interval is given, but since this unit's multi-function dock washes its pads with warm water and dries the mop pads automatically, you won't have to clean them yourself all that often.

- Multi-function dock clean water tank: You can simply lift the clean water tank upwards from its slot in the dock. You should refill this tank as necessary. The manufacturer recommends rinsing it out every two weeks.

- Multi-function dock dirty water tank: As with the clean water tank, you can just lift the compartment from its slot. You should empty it and clean it every two weeks with the provided cleaning brush.

- Multi-function dock washboard/Base plate: You can slide out the base plate from the base of the station. You should rinse it with cool water whenever necessary.

Any component washed under water should dry for at least 24 hours before being reinserted in the vacuum.

The Dreame L10s Ultra incurs high recurring costs.

- Brushroll: You'll need to replace the brushroll after 300 hours of use or every six to 12 months. Replacements can be purchased here.

- Side brush: The side brush has a service life of 200 hours or approximately three to six months of use. You can buy a two-pack of replacements from the company's website here.

- Filter: The filter needs replacing following 150 hours, according to the maintenance tab of the companion app, or every three to six months, according to the manual. You can buy replacements in packs of two here.

- Mop pads: You should replace the mop pads every 80 hours or one to three months. Replacements can be purchased here.

- Multi-function dock dirtbag: The dirtbag has an advertised capacity of 2.7L, enough for seven weeks worth of debris. Replacements can be purchased here.

You can also purchase an Accessory Kit bundle, which comes with:

- 2x Dirtbags

- 2x Side brushes

- 1x Brushroll

- 2x Filters

- 6x Mop pads

You can also buy a cleaning detergent, which is injected into the clean water refill tank in a precise quantity. You can add it to the clean water supply by pouring it into a slot at the back of the dock. You can buy a three-pack of 10.6oz (300 mL) bottles here.

The Dreame L10s Ultra has a relatively large footprint. That isn't down to the vacuum itself, which is similar in shape and size to most other models on the market, but rather its multifunction dock, which is much larger than more conventional self-empty docks due to the addition of two separate water tanks.

The Dreame L10s Ultra's internal dustbin has a capacity of 0.35L, which is actually fairly spacious as far as robot vacuums go. However, it'll still need to return to its docking station pretty often. There's no actual fill sensor within its dustbin; instead, you'll receive reminders to check it through its companion app.

This vacuum has a multifunction docking station. It empties the dustbin into a dirtbag, refills its water reservoir for its mopping feature, and dries its mop pads with a rush of warm air. According to the manufacturer, its dustbag has sufficient capacity for 60 days' worth of periodic cleaning sessions.

- Dreame L10s Ultra vacuum

- Dirt compartment

- 1x Vacuum filter

- 1x Brushroll

- Brushroll guard

- 2x Mopping pads

- 2x Mopping pad mounting plates

- Multifunctional Dock

- Multifunctional Dock Extension Plate

- Washboard

- Clean Water Tank

- Used Water Tank

- Multifunction dock power cable

- 2x Dust Collection bags

- Dust Cabinet cover

- Cleaning brush

- 1x 10.1 oz (300 mL) Multi-Surface Floor Cleaner bottle

- User Manual

The Dreame L10s Ultra has excellent battery performance. It uses a 5200 mAh lithium-ion pack, the same capacity as the pack found in the Roborock Qrevo, but it has a shorter overall runtime. It can run for just under an hour in its 'Turbo' setting. Setting it to the low-power 'Quiet' mode extends its battery life to a little under three hours. This is still plenty of time to deal with lightweight debris but considerably less than the four-hour-plus max runtime from the Roborock.

The Dreame L10s is a feature-packed robot vacuum. Like the Roborock Qrevo, it has an Auto-Mop Lift feature, which lifts the mopping pads when the vacuum is moving on a carpeted surface or when it's returning to its dock, so it doesn't soil the freshly cleaned surface. There are four different suction power modes: the energy-efficient 'Quiet' mode, the default 'Standard' mode, 'Strong,' and 'Turbo,' which is meant for cleaning extremely stubborn debris.

There are various mopping options, too. You can choose between three levels of dampness for its mopping pads: 'Slightly Dry,' 'Moist,' and 'Wet'.

The Dreame features a real-time hazard avoidance system different from the Roborock Qrevo. The Dreame has a structured light sensor and an RGB-color camera, allowing you to use the vacuum as a roving security camera. In contrast, the Roborock has only a structured light sensor.

This vacuum does a good job of cleaning hard floors. Its suction inlet is positioned high enough to easily pass over bulky and medium-sized debris like cereal or rice so that it can suck them up. That said, it does struggle a little bit with fine material like sand, especially when it's been piled up against walls or pushed deep into corners. Check out the Roborock Qrevo S if you want something that performs a little better on this surface type.

This vacuum's performance on high-pile carpet is okay. It can clear large and medium-sized debris like cereal or rice pretty efficiently, but it has a hard time with finer debris that's been pressed into carpet fibers and will likely need another run to collect everything. Check out the Roborock Qrevo Pro if you want a robot vacuum that performs better on this surface type.

Note: The vacuum's cliff sensors can trigger on dark-colored surfaces, resulting in navigational issues. We got around this issue in testing by covering the cliff sensors in white gaffer tape, but you likely won't do the same if you plan to use this vacuum at home.

This vacuum delivers inadequate performance on low-pile carpet. It's quite good at dealing with bulky debris, but it may leave behind some smaller solid debris along walls and in corners. Unfortunately, it struggles quite a bit with fine debris that's been pressed into carpet fibers. Check out the Roborock S8 Pro Ultra if you want a robot vacuum that performs better on this surface type.

Note:The vacuum's cliff sensors can trigger on dark-colored surfaces, resulting in navigational issues. We got around this issue in testing by covering the cliff sensors in white gaffer tape, but you likely won't do the same if you plan to use this vacuum at home.

This unit has okay airflow performance for a robot vacuum. It falls a little short of flagship models like the Roborock S8+/S8, but it still isn't bad in this regard.

This vacuum is remarkably quiet in normal operation, and you won't have any trouble hearing nearby conversations even when the vacuum is running in the same room in its 'Standard' setting. However, you can expect it to be a little louder if you have a lot of rugs and carpeted floors or simply need to use a higher power level: noise levels can reach as high as 68.8 dBA when it's set to its 'Turbo' suction mode. In contrast, noise levels drop to as little as 58.1 dBA when the vacuum is in its low-power 'Quiet' setting on bare floors. Its multi-function dock produces a peak volume of 70.2 dBA during the self-empty process, which, while louder than the vacuum, is still far from unbearable.

The Dreame L10s Ultra does a great job of adapting to different obstacles. Its LIDAR sensor maps out rooms quickly, but while it's quick to clean and pretty thorough, it occasionally moves in a sporadic, unpredictable fashion. It's through in cleaning under and around furniture like coat racks or table and chair legs, though it might bump into them a little bit. Mirrors pose a minor problem, as the vacuum's LIDAR sensor can bounce off the reflective surface and register a nonexistent space to clean. You're also better off removing any lightweight tasseled rugs before leaving it to run, as these items can get stuck in its suction inlet.

This vacuum's obstacle avoidance performance is great. Its AI Action hazard recognition system is very effective in spotting obstacles like socks, shoes, and even pet waste on bare floors. While the system's performance degrades slightly on carpets, it's not to the point where you'll have to constantly babysit the machine to make sure it doesn't make a huge mess in a slightly cluttered room.

This vacuum is somewhat tall. Its LIDAR sensor tower is marginally shorter than that of the Dreame L40 Ultra, so it'll have a slightly easier time cleaning under things like TV stands or couches.

This vacuum's threshold-clearing performance is decent. While it might struggle to clear incredibly thick marble thresholds, it won't have too much trouble negotiating most laminate or wood room dividers.

This vacuum does a poor job of sealing in fine particles. Fine allergens bypass its exhaust filter and are blown straight out of its exhaust port and LIDAR sensor.

This vacuum has poor crack-cleaning performance. It struggles with drawing up fine debris wedged deep within crevices, though at the very least, its side brush doesn't fling much debris outwards. Running a second pass in the 'Turbo' suction mode makes little to no difference in terms of performance in this regard, with the final results of said test seen here.

This vacuum's stain-clearing performance is decent with the mop dampness level set to 'Wet,' the mop mode set to 'Highest,' and with detergent poured into the clean water tank. However, a single pass just isn't enough to scrub away a dried-on stain, though a second pass does really help, as seen here.

Running a mopping cycle without detergent results in significantly worse stain-cleaning performance. You can see the vacuum mopping with just water here, and the aftermath of that test here.

After the vacuum has finished mopping, it'll park at the multifunction dock, where the pads press themselves into the textured washboard filled with water to dislodge any stuck-on debris before being dried with a flow of warm air. You can see how the pads looked before the automatic clean cycle here, and after the clean cycle here. This process does dirty the dock's washboard, as seen here.

Take a look at the Roborock Qrevo S if you want a hybrid vacuum/mop unit that does better with dried-on stains.

The Dreame L10s Ultra has a broad array of physical automation features. Most obviously, there's the multifunction dock, which empties its dustbin, refills its water tank, and washes and dries its mopping pads.

Overall functionality is limited without using the app. You can use the buttons on top of the vacuum to have it start a general-purpose cleaning cycle, spot-clean its immediate area, or send it back to its dock.

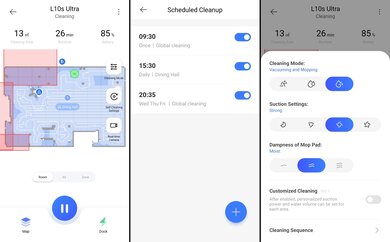

The Dreamehome companion app is very feature-rich. You can see a quick overview of the interface and features here.

Once the vacuum has generated a map of your home, you can access that through the app to set up no-go zones and virtual barriers, set up different cleaning areas and split rooms, label areas, and set floor materials so that you can set up fully custom cleaning modes for different rooms. Like most models in this price bracket, the Dreame supports multi-floor mapping, with memory for four different floors.

Like the Roborock S7 MaxV, you can use the Dreame as a roving security camera by looking through its front-facing camera. It also has a built-in mic and speakers, so you can talk to others through the vacuum and hear what they're saying.

You can use the app to enable 'Carpet' mode, which allows for automatic power adjustment on carpeted floors, 'Carpet Avoidance,' 'Low-Collision' mode, which prioritizes avoiding contact with walls and furniture at the expense of lower-precision scanning in tight spots, and 'Pet' mode, which boosts object detection performance for avoiding hazards like pet waste, but may degrade overall coverage.

Comments

Dreame L10s Ultra: Main Discussion

Let us know why you want us to review the product here, or encourage others to vote for this product.

- 21010

Hello there,

I may have framed my point poorly. What I meant to say was that I would like Rtings to measure and quantify the degree of raised black levels in QD-OLEDs (and other panels for that matter) so that I can better assess whether newer generations of the panels have improved to such a degree that I should be interested again.

I do see the value in QD-OLED technology. It’s just that - with the first generation of the technology - the degree of raised black levels outweigh the positives (for me personally) and I say that from personal experience with a QD-OLED TV.

The other point I was trying to make was that not testing for this technological limitation with QD-OLED could lead to consumers being disappointed with their purchase. It’s a new technology with a new type of image artifact, so it made sense to me that - as with other image artifacts that Rtings measure - it should be taken into consideration when scoring a product.

Edited 1 year ago: clearer wording - 21010

Hi there, I just wanted to add an example of why the degree of raised black levels is important to me, as news has just come out of an upcoming monitor that looks to be the ideal monitor for me (and many others, I’m sure).

Asus has just announced the PG32UCDM - a 32" 4K 240hz QD-OLED monitor set to release in early 2024: https://www.techpowerup.com/312693/asus-unveils-32-inch-4k-oled-rog-swift-pg32ucdm-gaming-monitor

The monitor uses a second generation QD-OLED panel, which Samsung claims to have reduced the raised black levels on, but I would like to know by how much before considering a purchase, and I think others would too.

I hope this use case makes sense to you, and that you will consider making some kind of test specifically to assess this type of panel issue, as it’s impossible to judge the raised black levels on video or still images (unless you make a highly controlled side-by-side comparison).

Thank you.

- 43230

Thanks for the feedback. I made a judgement call when writing the S95C to not mention it because I felt that it wasn’t as much of an issue as it was when the S95B came out. With the S95B, this was a big unexpected thing with the first QD-OLEDs, but whether or not it was even an issue proved to be highly subjective. Yes, the blacks are raised in a bright room when the TV is off, but this is also true of most TVs to a certain degree. Even internally, only about 50% of our team noticed it or was bothered by it. Given that community sentiment seems to agree that, for the most part, this isn’t really an issue, I decided not to mention it. If more users like you write in and feel that I’ve made a mistake on this, then we’ll definitely go in and update the review to mention it.

Hi Adam and Pascal,

Thank you for replying. I definitely think the issue with raised blacks should have a clear spot in QD-OLED reviews, for TVs as well as monitors.

I have an old LG C8 TV and have been very happy with it, and I’ve been very interested in the promises of QD-OLED (for both TV and monitor replacements) but having seen them in stores now, I’m completely uninterested due to the raised blacks.

In my mind, they negate the strongest selling point of OLEDs (especially in the monitor market) when viewed in a bright room, which is going to account for much of the time they are used, even if it isn’t the “ideal” cinematic scenario. To be a bit blunt, I think it almost turns an OLED panel into an IPS one, with enough light in the room.

Samsung has also said they are working on improving the effect, and once sufficiently improved, I would be interested again. It’s just like how ATW filters are an improvement on IPS technology, or how HDR brightness improves over time with all OLEDs. It’s important information for buyers.

For these reasons, I would be very interested to see how QD-OLEDs improve over time, and I think not putting more focus on it is keeping important information away from buyers, especially the less tech savvy ones, who may still want true blacks in bright rooms that don’t have direct reflections.

Edited 1 year ago: Small addition to clarify a point. - 21010

Hey there! I just wanted to start by thanking you for taking the time to share such a detailed message with us. It’s clear you put a lot of though into this and I really do appreciate it! Truthfully, I think you brought up a lot of very valid points, so I’m going to share this information with our test devs. We’ll definitely keep this in mind moving forward! :)

Thanks a lot! Glad to hear it :) Have a great day.

- 32120

If an 8 bit panel with dithering up to 10 bit can display gradients as smoothly as a native 10 bit panel, then you wouldn’t be able to tell them apart. Dithering implementations are pretty good nowadays. even though they achieve this dithering by flickering, it’s at a really high frequency, and it’s a really small range of flicker (between two very similar colors, not like between black and white) and it’s on a sub-pixel basis (so it’s not like the whole screen is flickering) so it isn’t noticeable.

Thanks for replying both times. It’s very enlightening, and a little embarrassing that I’ve been mislead for so long about the value of 10bit panels.

- 21010

A good quality 8 bit panel with good dithering can look better than a bad 10 bit panel. If you think of it like a car, a good V6 engine with a turbo can outperform a bad V8 engine.

Oh! I didn’t know that. That’s very surprising to me. And a good analogy too lol. I guess most 10bit gaming panels are actually pretty suboptimal when this one outperforms them.

But just to be absolutely certain - you’re saying that I wouldn’t actually be able to tell this one apart from a 10bit gaming panel with equal color gamut and volume results, even if I tried HDR content? ‘Cause that would mean I’ve fallen victim to marketing bs, along with many others.

Would there even be a difference if you were to create content in a 10bit environment? If not, then 10bit seems entirely pointless as a specification alone.

- 21010

Hey! Thanks for catching this! 10 bit was only possible at 120Hz over DisplayPort and this was just a writing mistake. We will get the review updated as soon as we can!

Ah alright. Wouldn’t it be able to run 10bit at 140hz with a custom resolution though? That should be within DP1.2 bandwidth limitations, but I don’t know if something specific to this monitor affects that.

- 21010

Hi! So for our testing, we only look at the bit depth supported by the display, not the native bit depth of the panel. So even if it’s an 8 bit panel, if it can display 10 bit gradients, we list it as as 10 bit.

If it’s only got an 8 bit panel, then how come it displays 10bit color so well? The results are better than a lot of 10bit gaming monitors.

- 21010

Vincent Teoh (HDTVtest) the TV god has confirmed this is an issue confirmed by LG Display that occurs because the panel is optimised for 120hz so when it lowers refresh rate with VRR the sub pixels are overcharged as they are programmed for 120hz. Vincent believes this is not fixable with firmware and would only be fixed with hardware e.g. next years TV’s. Dissapointing that RTINGS didnt pick this up or the fact that the Samsung QLED’s HDR performance drops in gaming mode (less dimming & color volume) all things picked up by Vincent. I guess the CX 48 isnt the ultimate PC monitor, ill wait for next years.

Yep, even though there’s no official word on whether a fix is coming, it does seem pretty likely now that a firmware fix will be impossible.

I would very much appreciate it if Rtings could take some photos of the raised black levels, and attempt to adjust the picture to represent what it actually looks like in person. Maybe a comparative image would work, so you could see for yourself how big the black difference is and judge whether it’s important enough for you.

I just wanna know if it brings perfect blacks to LCD levels and ruins point of getting an OLED (or it having VRR turned on). I hope it only raises blacks slightly and still remain much darker than LED panels, but I just don’t know.

I’ve looked all over for evidence of how it looks, and all I’ve found is a grainy video on youtube that only proves the issue exists, but doesn’t attempt to show what it looks like in person.

Edited 4 years ago: corrections - 21010

Some retailers list the 48"CX’s weight without the stand at 32.8 lb, but finding single arm mounts that can hold over 30 lb is pretty tough unless you spend ~$350+. So how dumb is this idea I just had: I could attach BOTH arms of a more reasonably priced dual-monitor mount. I’d probably need some kind of transfer/connector plate to keep the 2x 100x100mm arms together at the TV’s 300x200 but that shouldn’t be too bad, right?

As long as the total weight capacity of a dual monitor arm is enough, I guess you’d be fine in that sense. But I think it would be a real pain to get set up properly. Remember that both arms would be moving simultaneously while constricted by the attachment to the other arm, which they are not designed for. Some movements might be ok, while others could be hard to make with two arms moving in slight opposition to each other.

Comparing prices between the Ergotron LX dual arm setup (up to 40lb) with the HX single arm (up to 42lb) the dual arm setup is actually slightly more expensive. Ergotron is expensive, but the same relative difference might also be there for cheaper brands.

- 21010

I don’t know about third party stands, but monitor arms should solve your problem. It’s a heavy monitor though, so options will be limited.

But I’ve found one that can do the job, the Ergotron HX Desk Monitor Arm: https://www.ergotron.com/en-us/products/product-details/45-475#?color=white&buynow=0

It needs an Ergotron adapter though, which has part number 97-759.

- 43230

I wouldn’t recommend it if photo editing is your primary concern. As an OLED it’s amazing for content consumption, but not great for content creation.

For content consumption the colors will look great, but compared to IPS monitors that are made for color accuracy, it’s far off the mark. Also, given that it’s an OLED monitor, you would risk burn-in from the static elements of the photo editing software’s interface. I’m sure it would be great as a secondary monitor to view whatever you edited, but as a monitor to edit on, it just wouldn’t be suitable.

I just looked up the other monitor you mentioned, and that’s a “proper” editing monitor. It’s a far better option for photo editing.

- 21010

I have this TV and I am using it exclusively as a monitor for both work and PC gaming. I have not seen any raised black levels when Gsync is engaged. In fact, the blacks are amazing. My biggest problem is figuring out how I want to play the game. For example, I was playing Resident Evil 3 in 4K 120hz and it looked amazing, but I switched over to 1440P 120 with HDR and it looked amazing and now I cant decide what I want more. The extra resolution or HDR. Either way black levels were great. The only thing about this monitor that drives me nuts is the fluctuating brightness. It makes calibration very difficult.

Hi there, I can’t find any information stating that it can’t do 4K 120hz HDR, so I’m guessing the only reason you’re limited is due to not having an HDMI 2.1 source for the monitor, right? With the new RTX 30 series it should be possible at least.

- 21010

Don’t bother with G-Sync Ultimate. It’s absolutely better, but not in a way I think you would care if you are considering this monitor. It means the monitor has to have proper 1000 nits HDR, which is great, but comes at a very high price. Again, it’s definitely better, but if this LG monitor is around your budget, then a G-Sync Ultimate monitor won’t be. The Acer X35 is one of those monitors, and it’s $1800 on amazon.

Here’s how to set up VRR best: https://blurbusters.com/gsync/gsync101-input-lag-tests-and-settings/14/

Regarding VRR below 48hz - I’m actually not sure, but I don’t think you should worry about it with a 160hz monitor. Windows will stay at your max refresh rate, and if your games drop below 48 fps when you’ve got a 160hz monitor, I think you would find a way to increase fps not because of VRR support, but because of how crappy <60 fps would feel to you after you’ve gotten used to higher framerates.

Edited 4 years ago: additional info - 21010

Wouldn’t the monitor be able to display 10bit 158hz 4:4:4 with a custom resolution set in nvidia control panel? That would only be a mere 2 fps less than ideal. I’ve read about bandwidth limitations on DP1.4, and from the calculations I’ve made, 10bit 158hz should be possible at this resolution, but I’m not an expert on the subject, so I don’t know if anything would get in the way of it.

I used this calculator: https://linustechtips.com/main/topic/729232-guide-to-display-cables-adapters-v2/?section=calc

- 21010

Hey Schmidt89 …

Thank you for the great response :) I may have come across pretty dissatisfied with Rtings in my original post, but I very much appreciate all you do, and every time I write feedback, I get a good, thoughtful response.

I didn’t actually realize the monitor also scored highest for mixed usage. That’s a surprise to be sure, considering 1080p. I had seen the scoring tool before but never used it, because I thought it was a way for me to rate things based on my own observations or opinions, and not as a way to adjust the Rtings score based on my own weighting. What it really is, however, is brilliant! :D I’ll have to start using it for sure. I’m not sure how I’ve found it before though, because it isn’t listed under Tools. Correction: I’ve just found it under the normal score summary as “create your own”. Maybe it should be put under the Tools menu somehow? Just to make it more visible.

I hadn’t looked well enough into the refresh rate scoring, so it’s good to hear it’s already a non-linear scoring. It also looks very fair to be honest. I guess my issue comes from the way it’s weighted then.

Regarding overdrive - I didn’t know there was a difference in general behavior between G-Sync and Freesync. I guess my LG monitor is one of the few with Freesync that is consistent. For me, consistent performance is important, as VRR support is a top priority to me, given that games perform differently, and I like tear-free cinematic games without normal V-sync lag. I think a lot of “cinematic” gamers feel the same way.

I’m glad to hear minimum brightness will be looked at :) And I do hope you end up changing contrast scoring somehow. I was just so surprised at how little the difference was between my LG 27GL850-B at 735:1 and my brothers Samsung CHG70 at 2925:1. We tested them side-by-side in a blacked-out room at the luminance settings you calibrated to at ~100 cd/m2. We used the game GTFO (a worst-case game that’s mostly black) with duplicate output from the same PC. The difference was there, but it was small. I’d think of it as something like a 1.0 difference, from ~6.0 to ~7.0. In reality though, they are rated 5.5 and 7.8 for contrast, respectively. I guess I’m kinda repeating myself, but I just wanted to give more info on my experience :)

The gaming sub-categories sounds like a good idea to me, so I’d definitely support that. The HDR gaming category is a bit confusing to me, but I think it’s a great idea to have it. It’s probably only relevant to console- and cinematic gaming.

But I think HDR gaming is in a weird spot right now. Compared to TVs, there are hardly any monitors with FALD, and the ones that are supposedly good are all too expensive for reviews. I’ve played a lot of HDR gaming with my OLED TV, and I love it, but later when I tried it with my LG 27GL850-B, I had a hard time seeing any value in it and turned it off (even though it has an HDR gaming score of 6.8). I think it’s due to the fact that my monitor can’t do true blacks with FALD, and I think that even if a monitor has FALD but can’t do true blacks (like the Acer Predator X27 that you reviewed), it would still be kind of meaningless. Especially compared to the “real” HDR effect you get with TVs.

I just checked your TV reviews for the best HDR Movie score of a non-OLED panel, and it’s the Samsung Q9FN. Without local dimming, it has a contrast of ~6000:1, and with local dimming it’s ~19.000:1. I’ve got an LG C8 OLED myself and have viewed recent top-of-the-line Samsung TVs in person, and as far as blacks go, it’s what I’d call “true black” (although I didn’t view it in a blacked-out room, so I may think slightly different under such conditions). I think that since my monitor got an HDR Gaming score of 6.8 while (in my opinion) being completely useless, the scoring has to change in order to be useful to the reader, as 6.8 sounds like it would be decent. I don’t have a lot of knowledge on the subject, but I would suggest rating HDR gaming in a very non-linear way. Monitors like mine with HDR support, but without meaningful implementation, should get something like a 1.0 (?) to simply tell that it’s supported but not in a useable way (honestly, I was very surprised by how inconsequential my monitors HDR effect was). When HDR monitors arrive with FALD that provide similar contrast to the TV I mentioned, they could get scored in the higher end of the scale.

I just feel like the difference between how my TV does HDR gaming (scored at 8.0) and how my monitor does it (scored 6.8) is far bigger than the scores suggest. I know that TV and Monitor ratings are on completely different scales and can’t be compared the way I just did, but they’re on the same review site, so I think they should be comparable, leading me to think that monitor HDR scores should be rated on a much harsher scale, now that FALD and OLED monitors exist, next to the TV market which has had great HDR for a long time. TVs and monitors don’t exist in a vacuum after all, so I think their scores should both be relative to what’s achievable in gaming overall, regardless of what type of screen you use.

Thanks for reading and have a great day :)

- 2102-1

You plainly didn’t read the post. I’m guessing you only skimmed some of it and understood my point as being “higher refresh rate isn’t important” which isn’t the case. It’s certainly a long read, but I tried explaining the “why” to all my opinions and statements. I explained all I wanted in the first post, so I won’t repeat it. I guess if you think refresh rate is all that matters to gaming monitors, then yes, this is the best monitor out there, until it’s beaten by one with a higher number on it.

- 21010

Awesome, thanks! It’s listed under the “Sizes and Variants” section of the XV273 X review, and I couldn’t add it as a review suggestion myself, so that’s why I thought the way I did.

- 32120

I agree. This is something I’ve often wondered about when reading these reviews - how much emphasis should I put on the image examples of black uniformity (and motion blur for that matter) compared to the actual numbers.

An example would be comparing the LG 27GL83A-B with the LG 27GL850-B. The 83A has a much nicer black uniformity image than the 850, but it’s actually slightly worse by the numbers. Now compare those monitors with the ViewSonic VX2758-2KP-MHD (which has amazing black uniformity) and the 83A clearly looks closer to it than the 850, even though it has a worse score.

I think it would be great if the review had a small note on how much emphasis you should put on the picture compared to the number, and how much the contrast ratio plays a role regarding this (something that also leaves me wondering).

- 32120

I was thinking the same. The VG27AQ specifically has higher scores in all main categories than the AD27QD, so it should win that comparison by default.