Since 2017, we've published over 350 unbiased, trustworthy, and straight-to-the-point monitor reviews. We buy and test more than 30 of these monitors each year, with completely independent units that we buy on our own, without any cherry-picked units or samples. We put a lot into each review, and there's a whole process from purchasing to publishing, with multiple teams and people being involved. We do more than just use the monitor for a week; we use specialized and custom tools to measure various aspects with objective data-based results.

Each monitor review includes nearly 400 individual tests and around 3,000 words of text, taking nearly two total work weeks to produce. If you've ever wondered what goes into producing our monitor reviews, then you've come to the right place. Below, we'll break down what goes into delivering the objective and trustworthy reviews that you've come to expect.

Product Selection

Before we can test any monitor, we first have to buy it. All kinds of monitors are released yearly, including models from lesser-known brands, so we can't buy all of them. We only purchase and test monitors available in the United States, though we don't buy all monitors available in the country, either.

There are different ways for us to buy a monitor. The first and most common is buying popular monitors when they're released or buying other models that have gained popularity over time. We also consider our recommendations, so we might buy a batch of monitors that we need to test for a certain recommendation, like what we did before publishing Best Portable Monitors.

The other way we buy monitors is based on our Voting Tool. You can vote for which monitor you want us to purchase and test. You get one vote every 60 days (or 10 for Insiders), and we buy the winning monitor at the end of the voting cycle as long as it has received 25 votes.

Keep in mind that we buy each monitor on our own, the same way you would. We don't accept review samples from brands, nor do we buy monitors after a brand tells us to do so. We buy them from Amazon, Best Buy, Newegg, B&H, and other retailers, mainly from the United States. Once we receive the monitor in our lab in Montreal, we unpack and photograph it before it goes to the testing team.

Philosophy

First, let's examine the details of our testing processes and our philosophy for designing these tests.

Our motto is: "We purchase our own products and put them under the same test bench so you can easily compare the results. No cherry-picked units sent by brands. Only real tests." This isn't just a slogan, as it's a summary of how we test every monitor. Whether it's a gaming or work monitor, they go through the same rigorous testing process, so you can compare the results of any two monitors on the same test bench. We also don't take price or expected performance into consideration, so if an expensive monitor performs the same as a cheaper monitor, it receives the same score.

We regularly update our test methodology to remain at the forefront of the monitor testing industry. Whenever we update our test bench, we retest monitors according to the new methodology. When that happens, scores and our testing process changes, so results between monitors on different test benches aren't comparable.

You can see all our Test Benches below:

- Test Bench 2.1: June 2025

- Test Bench 2.0.1: February 2025

- Test Bench 2.0: April 2024

- Test Bench 1.2: April 2022

- Test Bench 1.1: February 2020

- Test Bench 1.0 (Initial): October 2017

While our methodology gives us a baseline of tests that you can filter by in our table tool, our testers also spend some time researching the product before testing to see if any additional tests are needed. For example, a monitor like the ASUS ROG Swift OLED PG27AQDP has various HDR picture modes, which we tested in addition to the regular HDR brightness testing. These results aren't scored, but they're useful if you want to read more results in the text.

Testing

Before we run through our tests, we ensure the monitor is properly warmed up by playing our four-minute warm-up video of gaming clips for at least 30 minutes before testing. A 'cold' monitor can negatively impact the test results. Then, one of our skilled and highly-trained testers goes through our series of tests, often taking up to a week of work to finish testing it. They start by completing tests that don't need calibration in SDR, like HDR color gamut, HDR brightness, and pre-calibration accuracy. After calibrating the monitor, we then complete the rest of the tests.

Once the tester has finished testing, there's a peer review process with another tester to validate all the results. To maintain high quality and standards, the original tester may often have to recheck certain results before handing the review to writers.

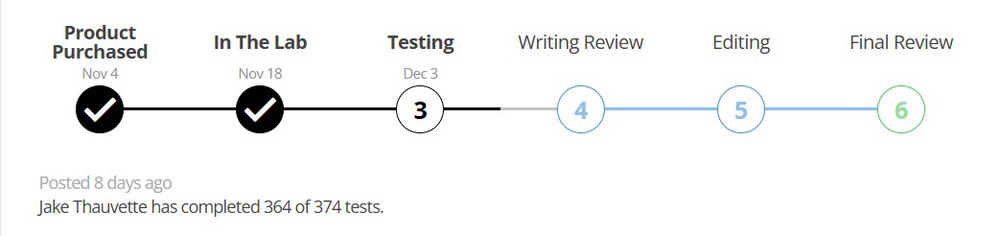

Our Test Bench 2.1 consists of 375 individual tests, but remember that not all tests take the same amount of time—some are quick, while others can take up to an hour. Because of this, we have a review progress tracker to help you see how many tests we've done and understand where we are in the review process. You can also follow each monitor's discussion page to get notifications about its review status.

We split our results into five sections: Design, Picture Quality, Motion, Inputs, and Features. Below, you can see each of these with its respective tests, and you can click the links for more in-depth articles on how we perform each test. You can also see all our learn, test, and R&D articles to learn more.

| Design | Picture Quality | Motion | Inputs | Additional Features | ||

|---|---|---|---|---|---|---|

| Style | Contrast | Local Dimming | Refresh Rate | Variable Refresh Rate (VRR) | Input Lag | Additional Features |

| Build Quality | SDR Brightness | HDR Brightness | VRR Motion Performance | Refresh Rate Compliance | Resolution | |

| Ergonomics | Horizontal Viewing Angle | Vertical Viewing Angle | CAD/Response Time @ Max Refresh Rate | PS5 and Xbox Series X|S Compatibility | ||

| Stand | Gray Uniformity | Black Uniformity | CAD/Response Time @ 120Hz | |||

| Display | Color Accuracy (Pre-Calibration) | Color Accuracy (Post-Calibration) | CAD/Response Time @ 60Hz | Inputs | On-Screen Display (OSD) | |

| Controls | SDR Color Gamut | HDR Color Gamut | Backlight Strobing (BFI) | USB | ||

| In The Box | HDR Color Volume | Text Clarity | VRR Flicker | macOS Compatibility | ||

| Direct Reflections | Ambient Black Level Raise | Image Flicker | ||||

| Total Reflected Light | Gradient | |||||

Design

The design section has two main goals. The first is for you to see what the monitor looks like from various angles, and our professional photographers take multiple pictures of the product. They also take the same photos on every monitor, so comparing two monitors is easy. They may also take additional photos we include in the text, like if the monitor has something unique.

The second goal of this section is to see how big it is and to make sure it will fit in your setup. Our testers take precise measurements of different aspects of the display, and we present the data in both imperial and metric systems, so it's easy to understand no matter where you're from.

Our testers also evaluate the monitor's build quality and pay attention to any issues that may arise, like if it's wobbly or has noticeable fan noise. They mark these down for our writers, who then add them to the review.

Picture Quality

Most of our picture quality tests are done in a dark, light-controlled testing room, and we use various tools to take our precise and accurate measurements. Most of the tests are done using a PC over a DisplayPort connection, except for some HDR brightness tests that use a Blu-ray player and an HDMI connection. We calibrate the monitor using a Colorimetry Research CR-100 colorimeter and the CalMan 5 for Business software, and most of the tests are done after a full calibration.

We calibrate the monitor using the picture mode and the most accurate and brightest settings. Most other settings are left at their default, and we list the settings tested in the Brightness and Color Accuracy test sections. However, these are just the tested settings and not our recommended ones, so you should choose the ones you prefer most. You can also learn how to adjust your monitor's settings.

Some of our picture quality tests require photos that we take with a Nikon D750 camera. As with our entire philosophy, we use the same camera settings on every monitor to ensure comparable images. We also take pictures of the monitor at a brightness of 100 cd/m2 to ensure that the image isn't too bright for the camera's sensor.

Motion

Our motion section is the most data-filled section of the review, and our custom-built response time tool helps us compile all kinds of data. We place the tool on the screen and let our program measure the thousands of data points in this section. If you love data, you'll love this section.

We have interactive graphs alongside linked heatmaps, charts, and pursuit photos so you can see all this data. While most of our motion testing focuses on gamma-corrected Cumulative Absolute Deviation (CAD) to represent how much motion blur there is, we also provide typical response time data.

We use the same post-calibration settings as with our picture quality test, but we measure motion with various overdrive settings that the monitor may have. We only choose one as our recommended setting, which is what the score is based on, but we still include the same data for the other overdrive settings. Unless otherwise mentioned, all motion testing is done over a DisplayPort connection with VRR enabled, and we repeat the testing at various refresh rates.

On top of our already Everest-sized mountain of data, we also take pursuit photos with a moving camera to give you a better understanding of the blur you see on the monitor. It contains many different elements and colors to represent the variety that you may see on your screen in action-packed content. In addition to this, we also measure both backlight and VRR flicker, which are different types of flicker.

Inputs

Our inputs section also contains useful data for gaming, just not as much as the motion testing. Most testing here consists of measuring the input lag using our specialized photodiode tool, which, like response time testing, we measure at various refresh rates. However, we do this with VRR disabled, as enabling VRR causes testing issues, and rarely has a significant impact on the input lag.

The rest of the section contains useful data if you want to connect various devices, like consoles or a macOS computer. We also list which types of ports it has and, if necessary, measure the bandwidth, speed, or power of such ports. Our writers provide any extra info in the text. However, we don't test for everything, so if you're curious about a monitor's capabilities or settings, it's better to read the user manual or contact the manufacturer.

Features

The last section is for any additional features or info that aren't covered in the rest of the review. We list whether the monitor has speakers, RGB illumination, Picture-in-Picture/Picture-by-Picture modes, and a KVM switch. However, our testers also write down other important features, which the writers add to the review. We don't normally cover every feature, but list the more important ones.

Writing

Testing aims to measure objective-based scientific data and evaluate subjective elements. However, writing seeks to convey that data in ways most people understand and to write about how the results impact your experience using the monitor. Once the tester is done with the monitor, the writer goes over the data to ensure everything is high-quality. The writer will contact the tester to check something if there are concerns. Once the results have been approved, the writer publishes it for Early Access so that Insiders who support us can see the data without any text.

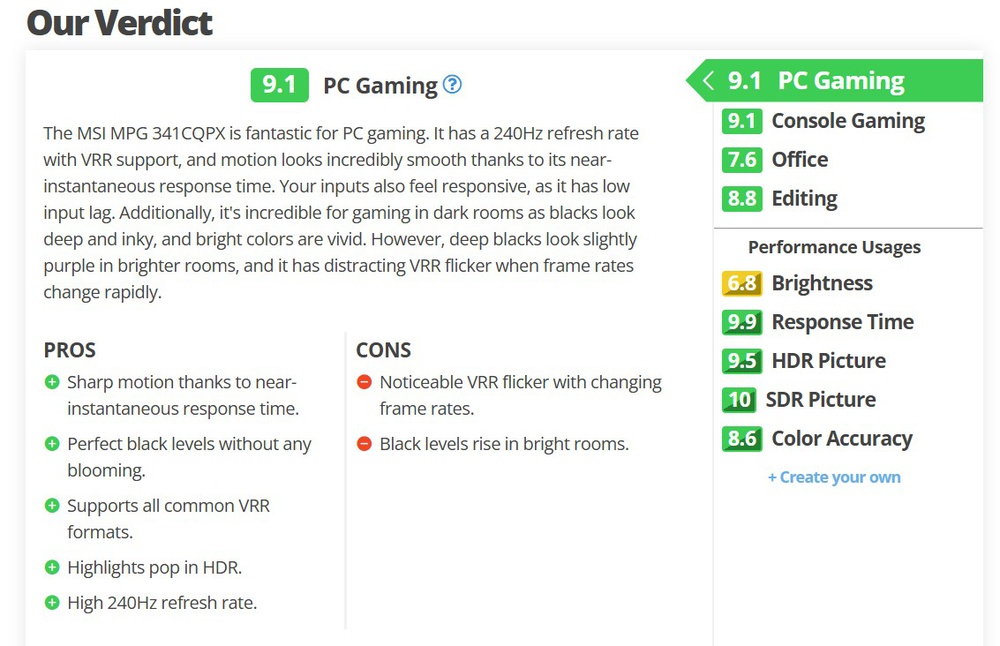

The writer then uses the data, as well as any additional testers' notes, to write each box of the review. The writer is also responsible for writing the Intro, Verdict, Variants, Compared, and Side-by-Side sections of the review. Because of this, the writer must also do some research before to see if there are other variants and to understand the monitor's position in the market and which models it competes against.

Writing normally takes 1-2 business days, with an additional day for the peer-review process with another writer and the tester who tested the product. This process aims to ensure that the writing is correct and error-free and that the data makes sense. It's a lengthy process, but we're committed to providing high-quality results.

Once the two writers have gone through that process, the review is sent to a member of our editing team, who proofreads it and checks it for inconsistencies before publishing it. Nearly ten people are involved in the review process for a single monitor, from ordering, unpacking, taking photos, testing, writing, and editing.

Rewrites and Updates

Once the review has been published, it doesn't mean we get rid of the monitor or forget about it. We keep the monitor until it's no longer useful, whether it's discontinued or not popular anymore, and then we sell it locally in Montreal. But what do we do with the monitor while we have it? We store it in our lab and keep it in case we need to run any retests, like when there are firmware updates or when there are certain community requests to look into something.

When we perform a retest, we don't retest everything; rather, we measure only the necessary aspects. For example, if a monitor receives a firmware update that's supposed to increase the brightness, we'll remeasure the SDR and HDR brightness. A retest goes through a similar but condensed process compared to a normal review, as a second tester validates the results, and then a writer updates the text before it goes to editing.

We also keep these monitors for any future test bench updates.

Recommendations

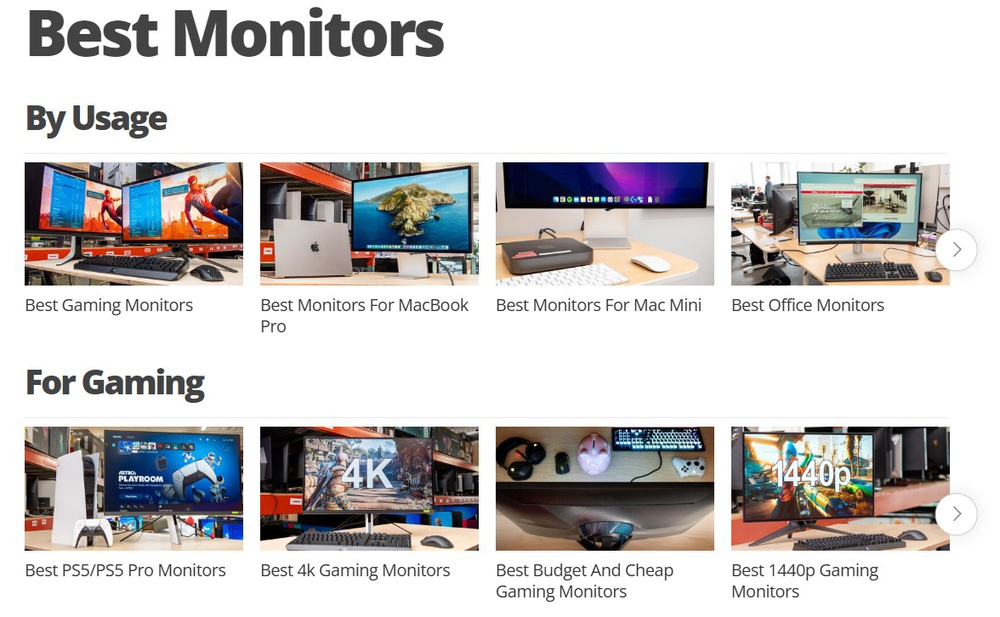

After reviewing the monitor, our writers also consider whether or not it should be included in our Monitor Recommendations. These are buying guides to help people decide which monitor best suits their needs, and we consider multiple factors when recommending monitors. Performance, price, and availability in the United States are the main factors we consider, as our monitor writers don't want to recommend the best monitors just by sorting the table tool. Instead, our writers use their expert knowledge to understand where a monitor stands in the market and recommend the best monitors for most people in each category. For example, if two monitors perform the exact same, but one monitor is cheaper, then we'll recommend the lower-cost one.

We also consider any trade-offs between monitors as you move down price categories, and explain that in the text. If a monitor costs more than another and has extra features, we'll recommend it in a higher price category. For example, if a monitor costs more than our recommended budget monitor and has extra features that set it apart, like a higher resolution or Mini LED backlighting, we'll recommend it in the lower mid-range or mid-range price categories.

That said, these recommendations are just that: recommendations. They're not the best monitors for everyone, but for most people. If you feel like the recommended monitors aren't what you're looking for, you can use any of our tools to find the perfect monitor for your needs.

RTINGS.com Computer Channel

We also produce review and recommendation videos for our RTINGS.com Computer YouTube channel. A writer often writes the script for a video review after the regular review has been published, and it follows a similar process where multiple people are involved in the validation. The tester who tested the product, a second writer, a content editor, and a video team member all ensure that the script is high-quality and accurate.

Once the review script is validated, one of our video editors films with a host and then puts the video together. Once again, multiple people are involved in the video validation process, including making sure the data that appears on the screen is correct. In keeping with our philosophy, our video reviews aren't sponsored by monitor manufacturers, separating us from other reviewers. The total video process takes about a week of work, from scripting to editing.

We also have a video explaining the review process that our video team goes through.

Conclusion

When you see our final, in-depth, detailed, and trustworthy reviews, both on our website and on YouTube, there's a lot of work that goes into these. Many people are involved in the review process to ensure it's published with very high quality, from unpacking to taking photos, to testing, to writing, and finally, to editing. We aim to ensure all our monitor reviews follow the same unbiased and class-leading process so that it's easy for you to compare monitors before making a buying decision.

Recent Updates

- July 2, 2025: We updated text throughout and added more information about our recent Test Bench Updates.